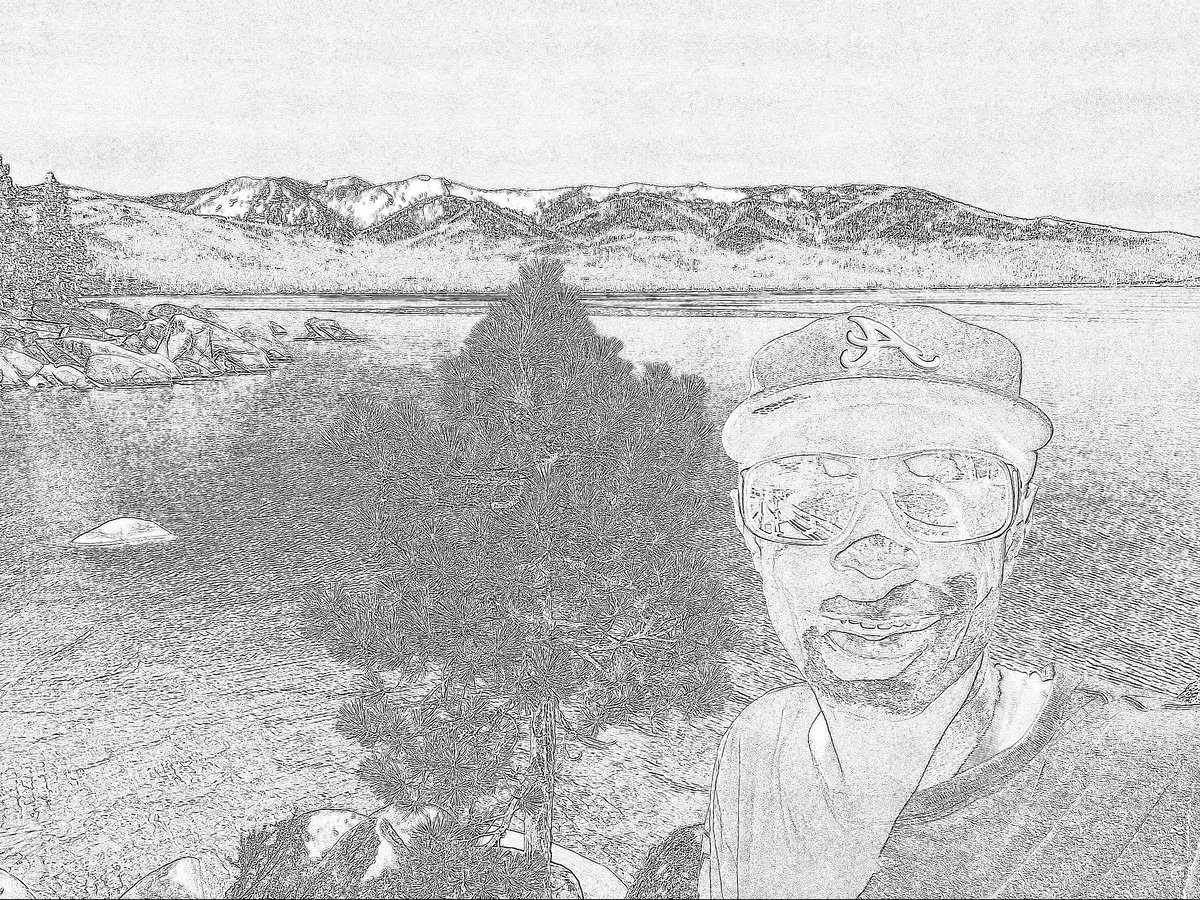

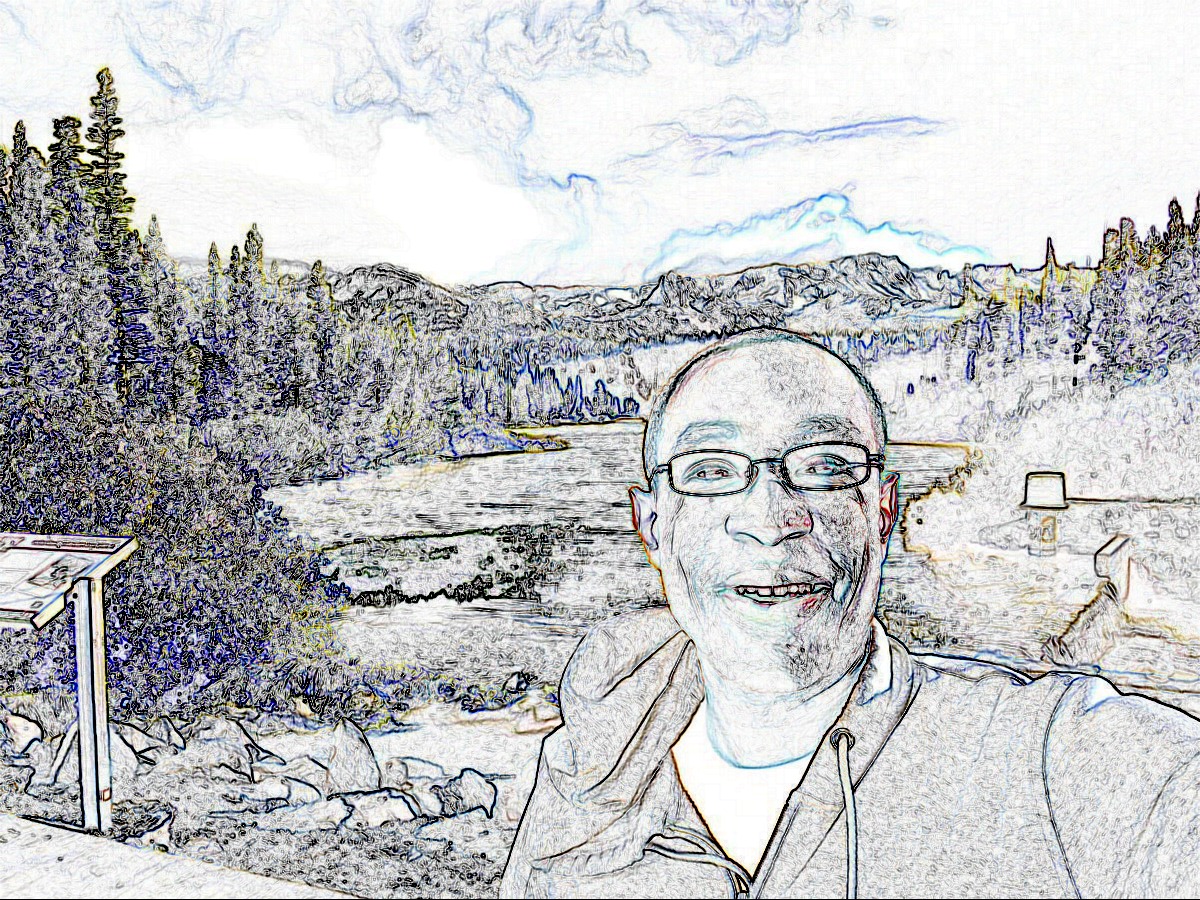

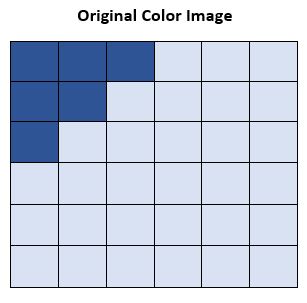

In this post, I will explain how the Canny Edge Detector works. The Canny Edge Detector is a popular edge detection algorithm developed by John F. Canny in 1986. The goal of the Canny Edge Detector is to:

- Minimize Error: Edges that are detected by the algorithm as edges should be real edges and not noise.

- Good Localization: Minimize the distance between detected edge pixels and real edge pixels.

- Minimal Responses to Single Edges: In other words, areas of the image that are not marked as edges should not be edges.

How the Canny Edge Detector Works

The Canny Edge Detector Process is as follows:

- Gaussian Filter: Smooth the input image with a Gaussian filter to remove noise (using a discrete Gaussian kernel).

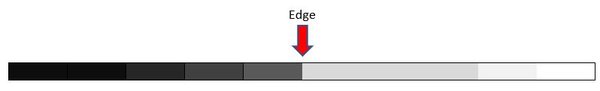

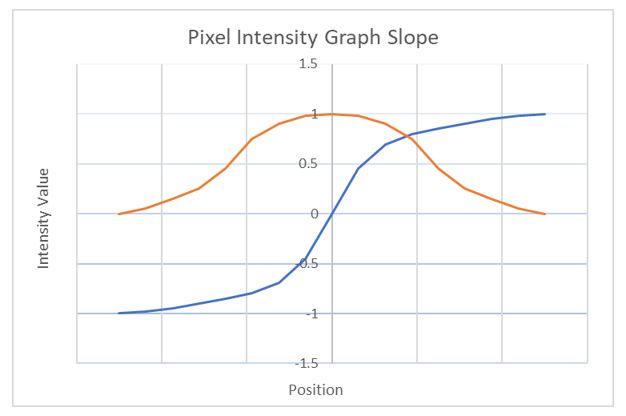

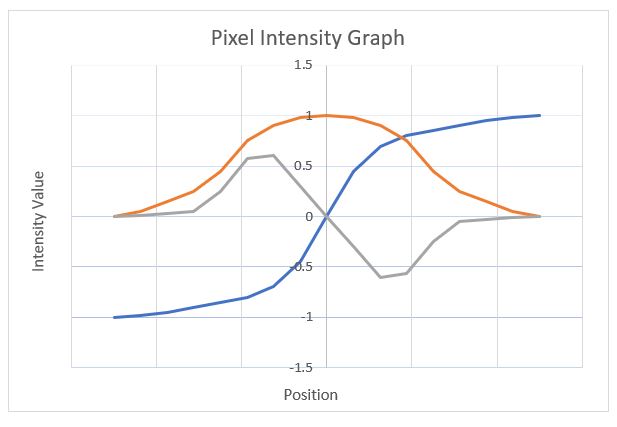

- Calculate Intensity Gradients: Identify the areas in the image with the strongest intensity gradients (using a Sobel, Prewitt, or Roberts kernel).

- Non-maximum Suppression: Apply non-maximum suppression to thin out the edges. We want to remove unwanted pixels that might not be part of an edge.

- Thresholding with Hysteresis: Hysteresis or double thresholding involves:

- Accepting pixels as edges if the intensity gradient value exceeds an upper threshold.

- Rejecting pixels as edges if the intensity gradient value is below a lower threshold.

- If a pixel is between the two thresholds, accept it only if it is adjacent to a pixel that is above the upper threshold.

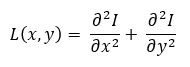

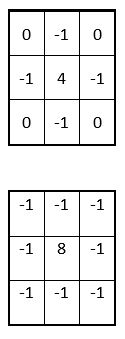

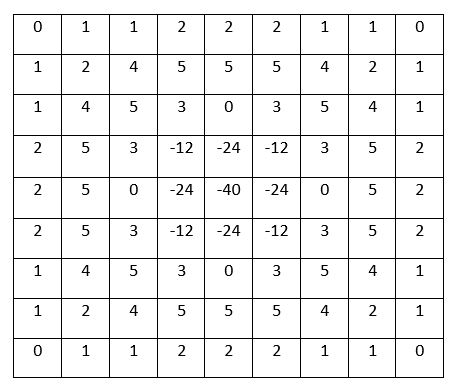

Mathematical Formulation of the Canny Edge Detector

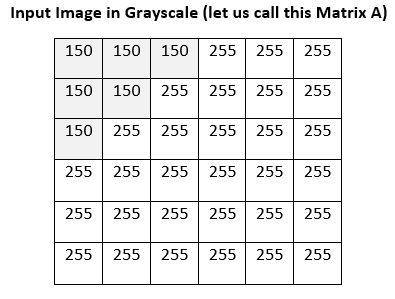

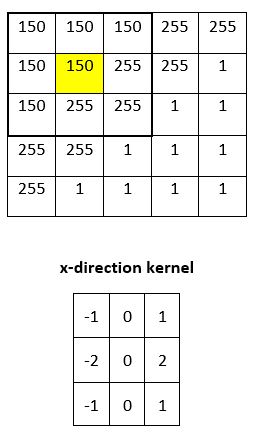

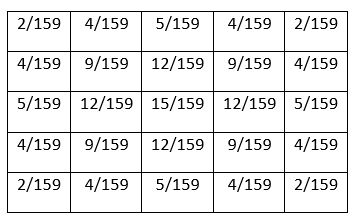

More formally, in step 1 of the Canny Edge Detector, we smooth an image by convolving the image with a Gaussian kernel. An example calculation showing the convolving mathematical operation is shown in the Sobel Operator discussion. Below is an example 5×5 Gaussian kernel that can be used.

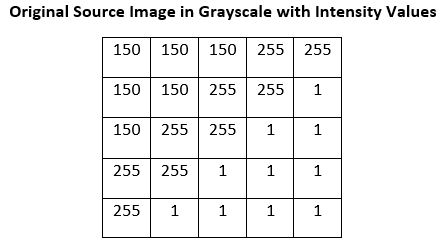

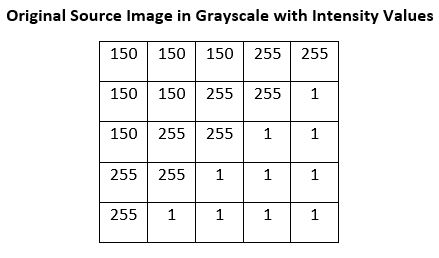

We must go through each 5×5 region in the image and apply the convolving operation between a 5×5 portion of the input image (with the pixel of interest as the center cell, or anchor) and the 5×5 kernel above. The result is then summed to give us the new intensity value for that pixel.

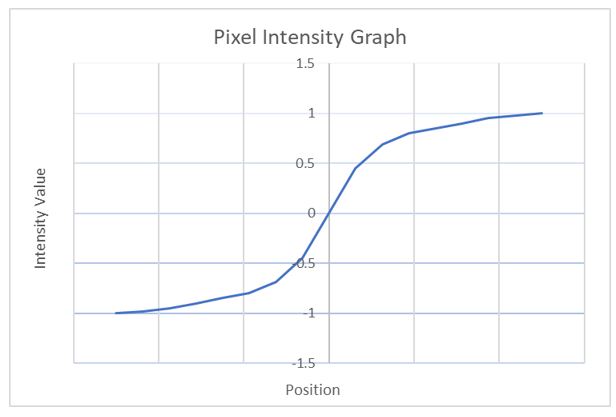

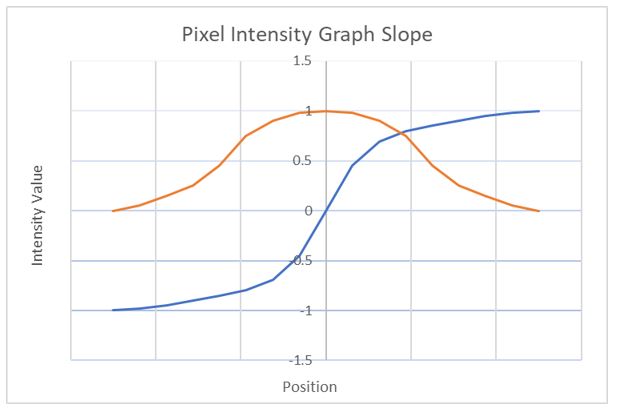

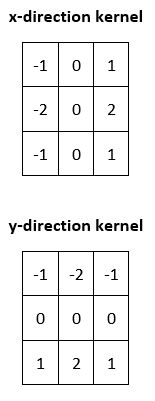

After smoothing the image using the Gaussian kernel, we then calculate the intensity gradients. A common method is to use the Sobel Operator.

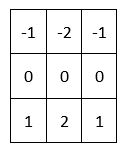

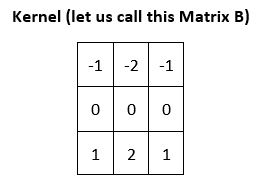

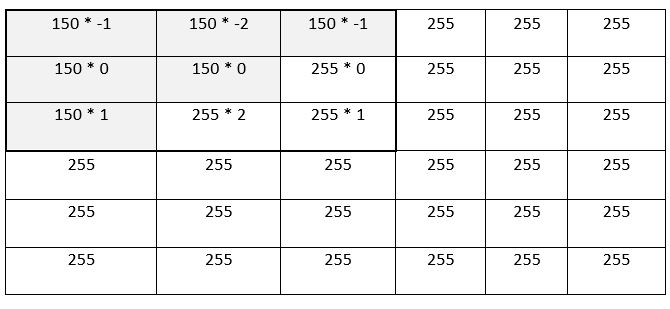

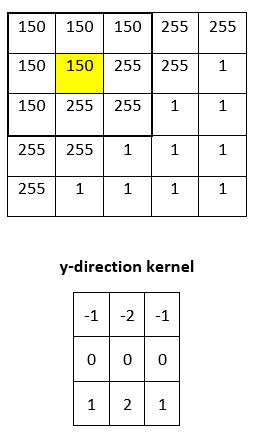

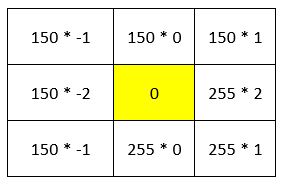

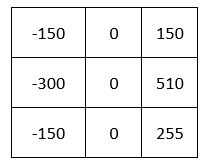

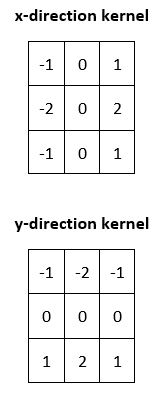

Here are the two kernels used in the Sobel algorithm:

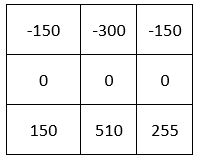

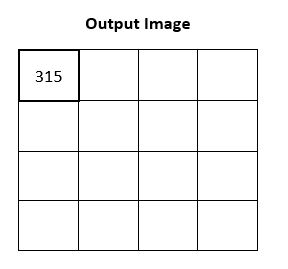

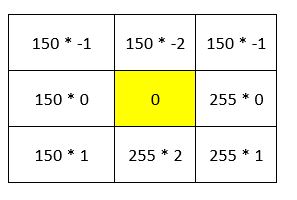

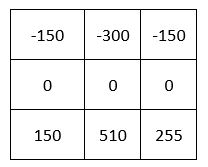

The gradient approximations at pixel (x,y) given a 3×3 portion of the source image Ii are calculated as follows:

Gx = x-direction kernel * (3x3 portion of image A with (x,y) as the center cell)

Gy = y-direction kernel * (3x3 portion of image A with (x,y) as the center cell)

* above is not normal matrix multiplication. * denotes the convolution operation.

We then combine the values above to calculate the magnitude of the gradient:

magnitude(G) = square_root(Gx2 + Gy2)

The direction of the gradient Ɵ is:

Ɵ = atan(Gy / Gx)

where atan is the arctangent operator.

Once we have the gradient magnitude and direction, we perform non-maximum suppression by scanning the entire image to get rid of pixels that might not be part of an edge. Non-maximum suppression works by finding pixels that are local maxima in the direction of the gradient (gradient direction is perpendicular to edges).

If, for example, we have three pixels that are next to each other: pixels a, b, and then c. Pixel b is larger in intensity than both a and c where pixels a and c are in the gradient direction of b. Therefore, pixel b is marked as an edge. Otherwise, if pixel b was not a local maximum, it would be set to 0 (i.e. black), meaning it would not be an edge pixel.

a ——> b <edge> ——> c

Non-maximum suppression is not perfect because some edges might actually be noise and not real edges. To solve this, Canny Edge Detector goes one step further and applies thresholding to remove the weakest edges and keep the strongest ones. Edge pixels that are borderline weak or strong are only considered strong if they are connected to strong edge pixels.

Canny Edge Detector Code

This tutorial has the Python code for the Canny Edge Detector.

Conclusion

In this discussion, we covered the Canny Edge Detector. The Canny Edge Detector is just one of many edge detection algorithms.

The most common edge detection algorithms fall into the following categories:

- Gradient Operators

- Roberts Cross Operator

- Sobel Operator

- Prewitt Operator

- Canny Edge Detector

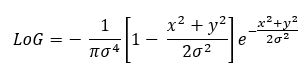

- Laplacian of Gaussian

- Haralick Operator

Which edge detection algorithm you choose depends on what you are trying to achieve with your application.

Keep building!