In this tutorial, we will simulate and control a mobile robot in Gazebo. I will show you how to set up and control a mecanum wheel robot using ROS 2 Control and Gazebo.

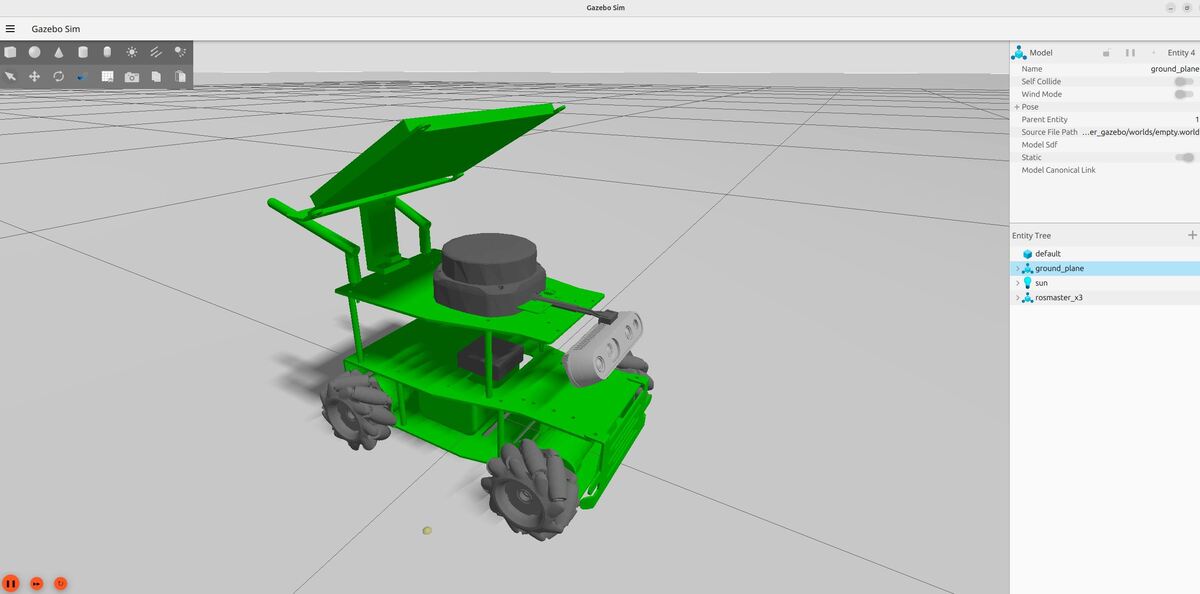

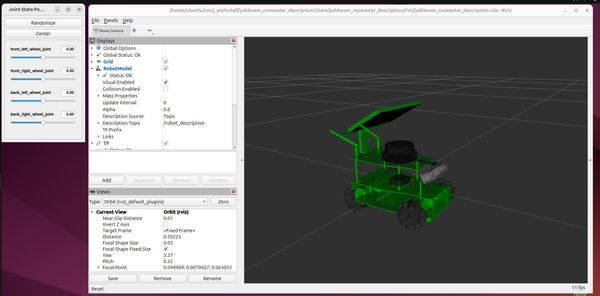

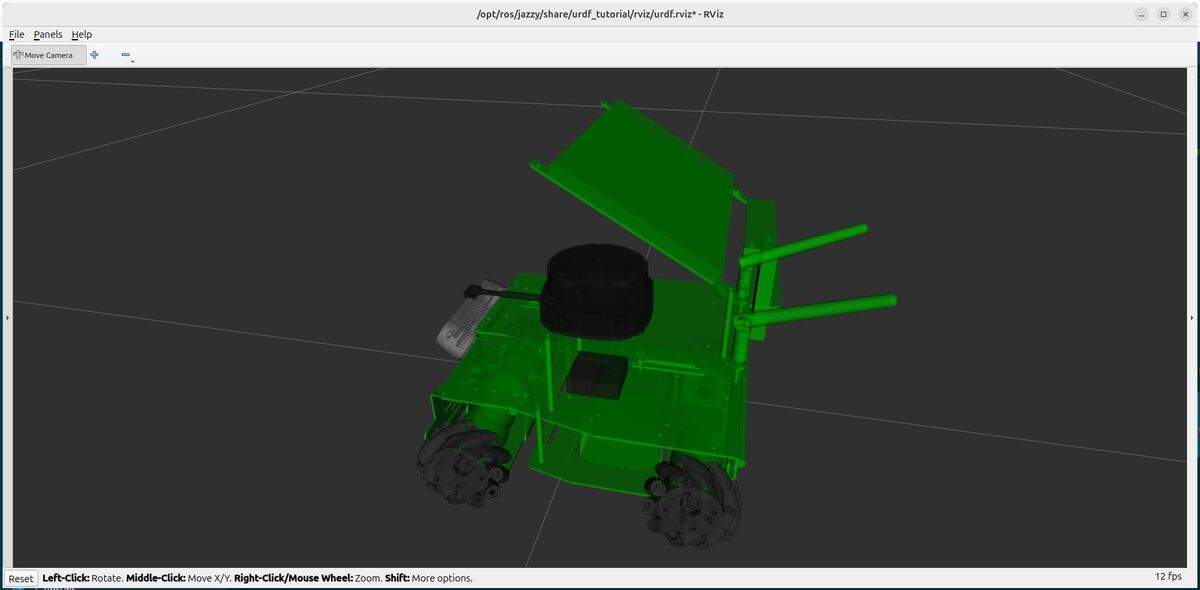

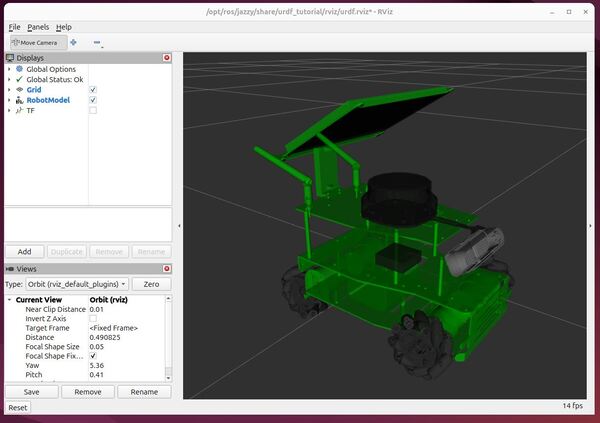

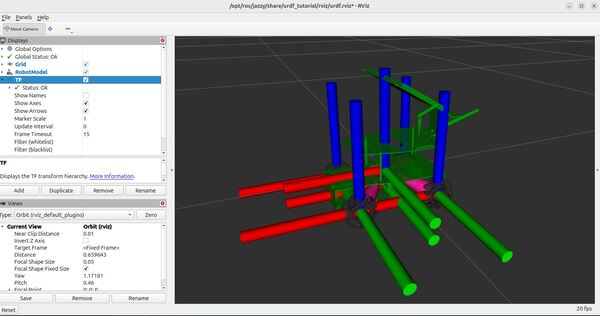

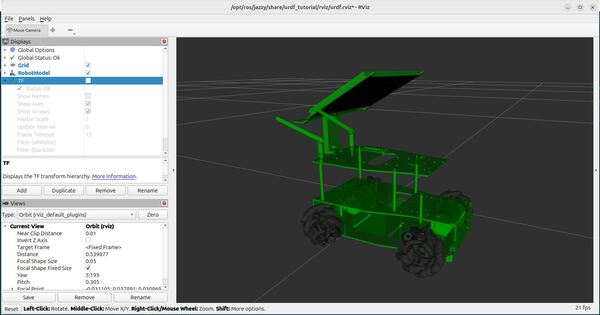

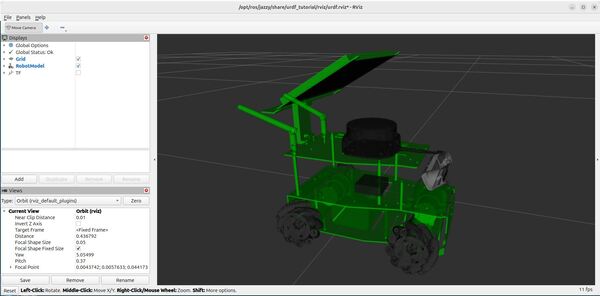

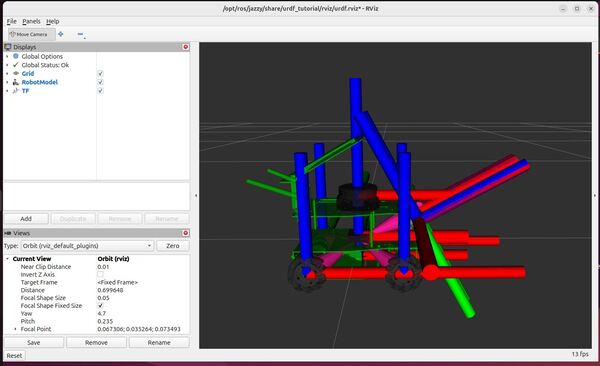

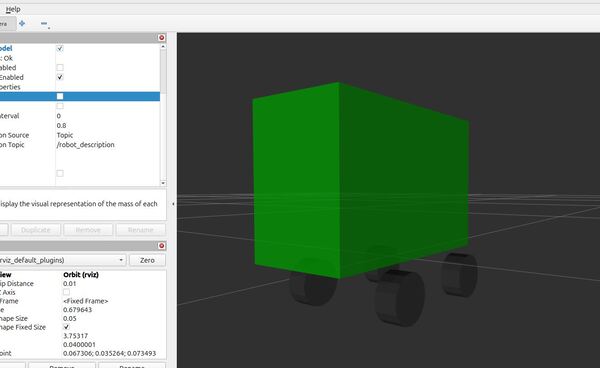

By the end of this tutorial, you will be able to build this:

If you prefer to learn by video, you can follow the entire tutorial here:

Prerequisites

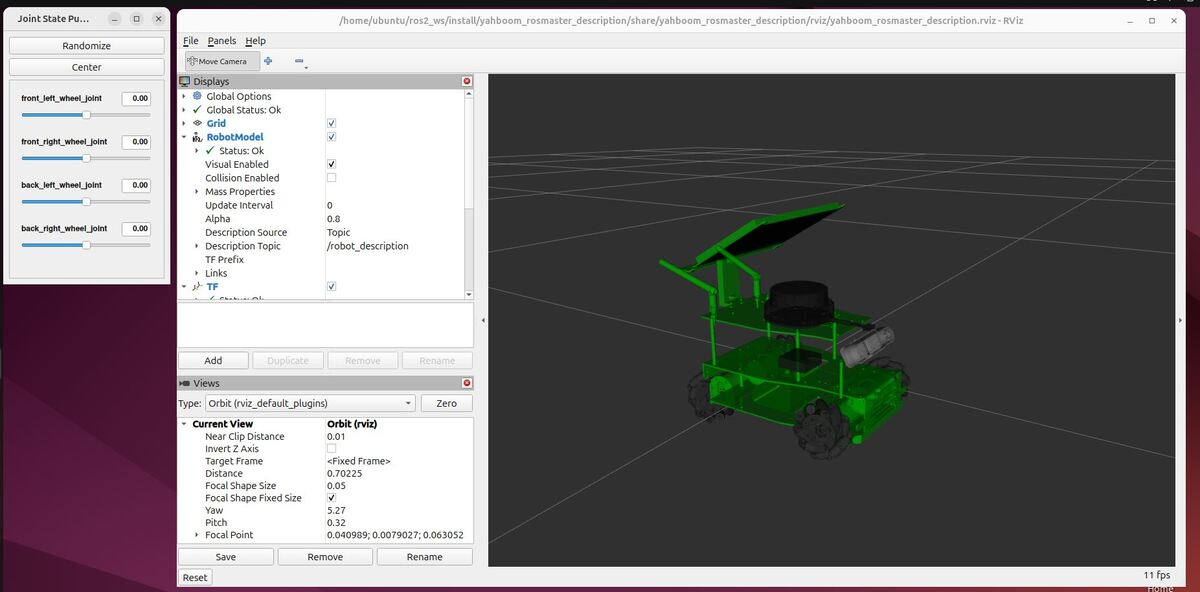

- You have completed this tutorial: Create and Visualize a Mobile Robot with URDF – ROS 2 Jazzy.

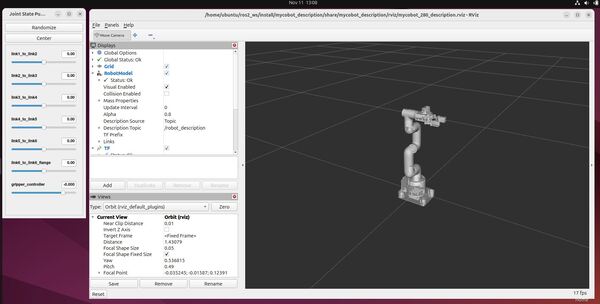

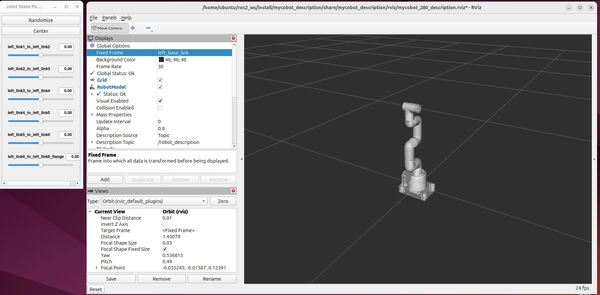

- You have completed this tutorial: How to Simulate a Robotic Arm in Gazebo – ROS 2 Jazzy (recommended but not required if you understand how Gazebo and ROS 2 Control work at a high level)

- I am assuming you are using Visual Studio Code, but you can use any code editor.

All my code for this project is located here on GitHub.

Create Packages

Navigate to your workspace, and create the following packages. You can replace the maintainer-name and maintainer email with your own information.

cd ~/ros2_ws/src/yahboom_rosmaster/ros2 pkg create --build-type ament_cmake \

--license BSD-3-Clause \

--maintainer-name ubuntu \

--maintainer-email automaticaddison@todo.com \

yahboom_rosmaster_bringupros2 pkg create --build-type ament_cmake \

--license BSD-3-Clause \

--maintainer-name ubuntu \

--maintainer-email automaticaddison@todo.com \

yahboom_rosmaster_gazeboros2 pkg create --build-type ament_cmake \

--license BSD-3-Clause \

--maintainer-name ubuntu \

--maintainer-email automaticaddison@todo.com \

yahboom_rosmaster_system_testsUpdate the package.xml files for all packages, including the metapackage. Be sure to add a good description line for each.

You can also update the metapackage with the new packages you just created.

cd yahboom_rosmaster

gedit package.xml

<?xml version="1.0"?>

<?xml-model href="http://download.ros.org/schema/package_format3.xsd" schematypens="http://www.w3.org/2001/XMLSchema"?>

<package format="3">

<name>yahboom_rosmaster</name>

<version>0.0.0</version>

<description>ROSMASTER series robots by Yahboom (metapackage).</description>

<maintainer email="automaticaddison@todo.todo">ubuntu</maintainer>

<license>BSD-3-Clause</license>

<buildtool_depend>ament_cmake</buildtool_depend>

<exec_depend>yahboom_rosmaster_bringup</exec_depend>

<exec_depend>yahboom_rosmaster_description</exec_depend>

<exec_depend>yahboom_rosmaster_gazebo</exec_depend>

<exec_depend>yahboom_rosmaster_system_tests</exec_depend>

<test_depend>ament_lint_auto</test_depend>

<test_depend>ament_lint_common</test_depend>

<export>

<build_type>ament_cmake</build_type>

</export>

</package>

Edit package.xml

Now let’s make sure some key packages are installed to handle the integration between ROS 2 and Gazebo. One important package is ros_gz. Here is the GitHub repository, and here are the official installation instructions. Let’s walk through this together.

Open a terminal window, and go to your package.xml folder inside the yahboom_rosmaster_gazebo package.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazeboOpen the package.xml file.

Make sure it has this block:

<depend>controller_manager</depend>

<depend>gz_ros2_control</depend>

<depend>python3-numpy</depend>

<depend>rclcpp</depend>

<depend>ros_gz</depend>

<depend>ros_gz_bridge</depend>

<depend>ros_gz_image</depend>

<depend>ros_gz_sim</depend>

<depend>ros2_control</depend>

<depend>ros2_controllers</depend>

<depend>trajectory_msgs</depend>

<depend>xacro</depend>

<exec_depend>gz_ros2_control_demos</exec_depend>

<exec_depend>rqt_robot_steering</exec_depend>

<exec_depend>rviz_imu_plugin</exec_depend>

Edit CMakeLists.txt

Now open the CMakeLists.txt file, and add this block:

find_package(ament_cmake REQUIRED)

find_package(controller_manager REQUIRED)

find_package(gz_ros2_control REQUIRED)

find_package(rclcpp REQUIRED)

find_package(ros_gz REQUIRED)

find_package(ros_gz_bridge REQUIRED)

find_package(ros_gz_image REQUIRED)

find_package(ros_gz_sim REQUIRED)

find_package(ros2_control REQUIRED)

find_package(ros2_controllers REQUIRED)

find_package(trajectory_msgs REQUIRED)

find_package(xacro REQUIRED)

Build the Workspace

Now let’s build our workspace.

cd ~/ros2_ws/rosdep install -i --from-path src --rosdistro $ROS_DISTRO -ycolcon build && source ~/.bashrcOpen a terminal window, and type:

echo "alias build='cd ~/ros2_ws/ && colcon build && source ~/.bashrc'" >> ~/.bashrcNow going forward, any time you want to build you workspace, just type:

buildTest Your Installation

Let’s test to see if Gazebo and ROS 2 Control are working properly:

ros2 launch gz_ros2_control_demos diff_drive_example.launch.pyTo move the robot from the terminal window, you can type:

ros2 topic pub /diff_drive_base_controller/cmd_vel geometry_msgs/msg/TwistStamped "{header: {stamp: now, frame_id: 'base_link'}, twist: {linear: {x: 0.2, y: 0.0, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 0.5}}}" --rate 5To stop the robot:

CTRL + C

ros2 topic pub /diff_drive_base_controller/cmd_vel geometry_msgs/msg/TwistStamped "{header: {stamp: now, frame_id: 'base_link'}, twist: {linear: {x: 0.0, y: 0.0, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 0.0}}}" --rate 5Create World Files

Now we will create an environment for our simulated robot.

Open a terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/Create a worlds folder and other folders we will need later:

mkdir -p worlds launch modelsNow let’s create our world. The first world we will create is an empty world.

cd worldsgedit empty.worldAdd this code.

Save the file, and close it.

Now launch your Gazebo world:

cd ~/ros2_ws/src/mycobot_ros2/mycobot_gazebo/worlds/gz sim empty.worldPress CTRL + C to close everything.

Now let’s create a world called pick_and_place_demo.world.

gedit pick_and_place_demo.worldAdd this code.

Now let’s create a world called house.world.

gedit house.worldAdd this code.

Save the file, and close it.

Now let’s create a world called cafe.world.

gedit cafe.worldAdd this code.

Save the file, and close it.

Add all these models to your models folder.

Create a Mecanum Drive Controller

We need to create a controller for a mobile robot with mecanum omnidirectional wheels since none exists in the official ros2_controllers package. The official instructions for creating a new controller are on this page. We will adapt these instructions to your use case.

Create a Package

cd ~/ros2_ws/src/yahboom_rosmaster/ros2 pkg create --build-type ament_cmake \

--license BSD-3-Clause \

--maintainer-name ubuntu \

--maintainer-email automaticaddison@todo.com \

mecanum_drive_controllerNow let’s prepare our source files. Move into the new package directory and create the necessary folders:

cd mecanum_drive_controllermkdir -p test configPrepare Files

Let’s create the header files:

touch include/mecanum_drive_controller/mecanum_drive_controller.hpp

touch include/mecanum_drive_controller/odometry.hpp

touch include/mecanum_drive_controller/speed_limiter.hpp

touch include/mecanum_drive_controller/visibility_control.hNext, create the source files we need to implement:

touch src/mecanum_drive_controller.cpp

touch src/mecanum_drive_controller_parameter.yaml

touch src/odometry.cpp

touch src/speed_limiter.cppCreate the test files we need to create:

mkdir -p test/config/

touch test/config/test_mecanum_drive_controller.yaml

touch test/test_mecanum_drive_controller.cpp

touch test/test_load_mecanum_drive_controller.cppCreate other files we need to implement:

touch mecanum_drive_plugin.xmlAdd Declarations into the Header File

Open mecanum_drive_controller.hpp inside the include/mecanum_drive_controller folder.

Add this code.

Save the file, and close it.

Open odometry.hpp inside the include/mecanum_drive_controller folder.

Add this code.

Save the file, and close it.

Open speed_limiter.hpp inside the include/mecanum_drive_controller folder.

Add this code.

Save the file, and close it.

Open visibility_control.h inside the include/mecanum_drive_controller folder.

Add this code.

Save the file, and close it.

Mecanum Wheel Forward and Inverse Kinematics

Before we write our source code, let’s dive into the mathematics that make omnidirectional movement possible. This foundation will be important when we implement our controller in the next section.

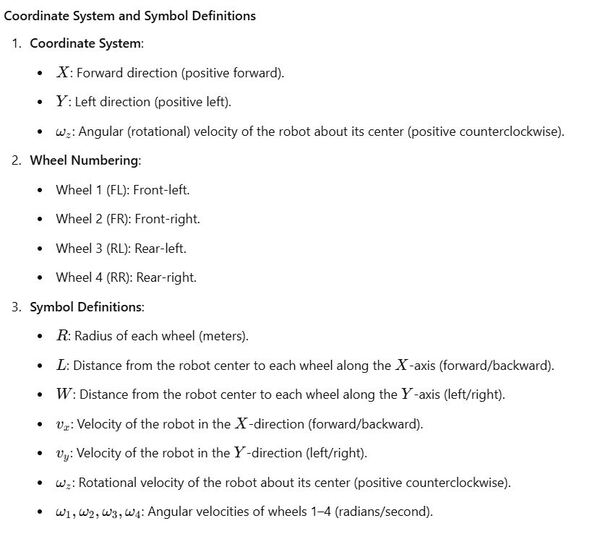

The coordinate system for our robot follows ROS 2 conventions. The base frame’s X-axis points forward, Y-axis points left, and rotations follow the right-hand rule with positive counterclockwise rotation. We number our wheels starting from the front-left as Wheel 1 (FL), moving clockwise to Wheel 2 (FR), Wheel 3 (RL), and Wheel 4 (RR).

Understanding Wheel Rotation Direction

Before diving into the kinematics equations, we need to understand how wheel rotations are defined in our system.

Each wheel’s rotation axis aligns with its positive y-axis, which runs parallel to the robot base’s y-axis. You can see this in the mecanum_wheel.urdf.xacro file. A positive wheel velocity value indicates counterclockwise rotation when viewed down this y-axis (imagine looking at the wheel from its side with the y-axis pointing towards you).

This convention has practical implications. For example, when commanding the robot to move forward, each wheel rotates counterclockwise around its y-axis, creating a positive velocity value. Think of the wheel’s 2D plane (formed by its positive z and x axes) rotating counterclockwise around the y-axis. This coordinated motion drives the robot base forward.

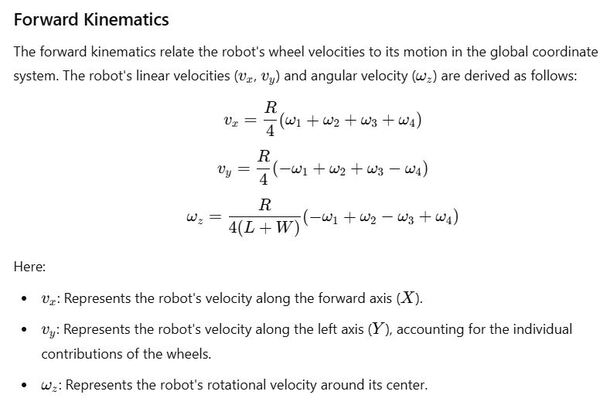

Forward Kinematics: From Wheel Speeds to Robot Motion

The forward kinematics equations tell us how individual wheel velocities combine to create the robot’s overall motion. These equations are particularly useful when we need to estimate the robot’s actual movement based on wheel encoder feedback.

Looking at the equations, we can see how each wheel contributes to the robot’s linear velocities (vx and vy) and angular velocity (ωz). The wheel radius R scales the contribution of each wheel’s angular velocity (ω1 through ω4).

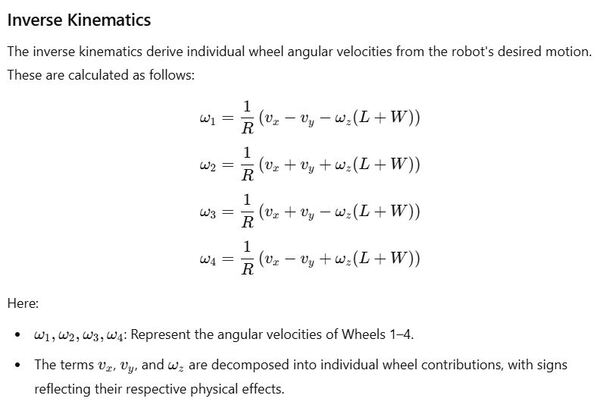

Inverse Kinematics for Motion Control

In our ROS 2 controller, we’ll primarily use inverse kinematics. These equations help us calculate the required wheel velocities to achieve desired robot motion. When we receive velocity commands (typically from the /cmd_vel topic), we’ll use these equations to determine appropriate wheel speeds.

The inverse kinematics equations consider:

- The robot’s desired linear velocities (vx and vy)

- The desired angular velocity (ωz)

- The robot’s physical dimensions (L and W)

- The wheel radius (R)

In our mecanum drive controller we transform velocity commands into actual wheel motions. We also handle odometry feedback using the forward kinematics equations to provide accurate motion estimation of the full robot.

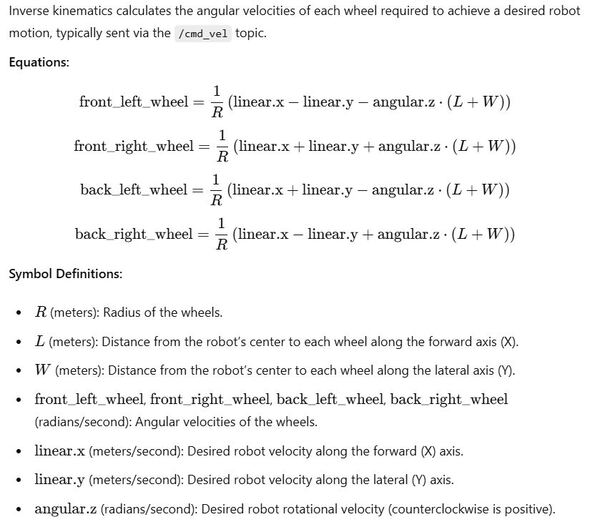

Mecanum Wheel Kinematics in ROS 2: Translating Equations into Code

Now that we understand the mathematics behind forward and inverse kinematics, let’s map these equations to ROS 2 concepts. This step bridges the gap between theory and implementation, ensuring a smooth transition into developing a functional mecanum drive controller.

Forward Kinematics in ROS 2

These equations estimate the robot’s motion based on wheel encoder feedback, providing odometry as linear and angular velocities in meters per second and radians per second.

Inverse Kinematics in ROS 2

These equations allow us to translate velocity commands into individual wheel speeds.

Create Source Files

Now let’s create the source files.

cd ~/ros2_ws/src/yahboom_rosmaster/mecanum_drive_controller/Open speed_limiter.cpp inside the src folder.

Add this code.

Save the file, and close it.

Open mecanum_drive_controller_parameter.yaml inside the src folder.

Add this code.

Save the file, and close it.

Open odometry.cpp inside the src folder.

Add this code.

Save the file, and close it.

Open mecanum_drive_controller.cpp inside the src folder.

Add this code.

Save the file, and close it.

Write Export Definition for Pluginlib

Now we need to create the pluginlib XML file in the package root.

cd ~/ros2_ws/src/yahboom_rosmaster/mecanum_drive_controller/Open mecanum_drive_plugin.xml

Add this code.

Save the file, and close it.

Write a Test to Check if the Controller Can Be Found and Loaded

Now we are going to write tests to confirm the controller can be found and loaded.

cd ~/ros2_ws/src/yahboom_rosmaster/mecanum_drive_controller/testOpen test_mecanum_drive_controller.yaml.

Add this code.

Save the file, and close it.

We are not going to use this yaml file. I just put it there as a reference to list all the available parameters for this controller.

Now let’s write the code to test if the mecanum drive controller can be loaded into the ROS 2 Control framework.

Open test_load_mecanum_drive_controller.cpp.

Add this code.

Save the file, and close it.

Add Dependencies into package.xml File

Now we need to update our package dependencies.

cd ~/ros2_ws/src/yahboom_rosmaster/mecanum_drive_controller/Open the package.xml file.

Add this code.

Save the file, and close it.

Add Compile Directives into the CMakeLists.txt File

Now we need to update our CMakeLists.txt file.

cd ~/ros2_ws/src/yahboom_rosmaster/mecanum_drive_controller/Open the CMakeLists.txt file.

Add this code.

Save the file, and close it.

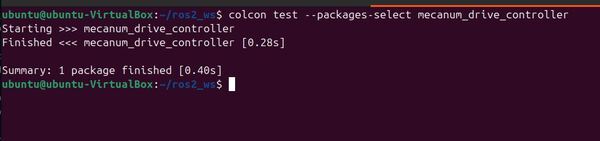

Compile and Test the Controller

Let’s build our package to make sure everything is set up correctly:

cd ~/ros2_wsrosdep install --from-paths src --ignore-src -r -ycolcon build --packages-select mecanum_drive_controllersource ~/.bashrcFinally, let’s run the tests to ensure our controller can be found and loaded:

colcon test --packages-select mecanum_drive_controller

OR (for detailed output)

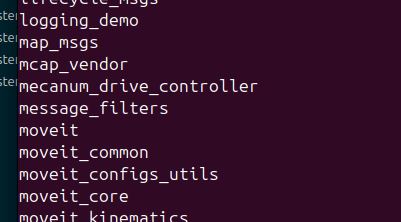

colcon test --packages-select mecanum_drive_controller --event-handlers console_direct+You can also see your new controller if you type:

ros2 pkg list

Connect Gazebo to ROS 2 Control

Create a YAML Configuration File for the Controller Manager

After setting up our simulation environment in Gazebo, we need to tell ROS 2 Control how to manage our robot’s movements in this virtual world. Let’s create a configuration file that defines how our robot’s wheels should be controlled.

Open a terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_description/mkdir -p config/rosmaster_x3/Create a new file inside the config/rosmaster_x3 directory called:

ros2_controllers.yamlAdd this code:

# controller_manager provides the necessary infrastructure to manage multiple controllers

# efficiently and robustly using ROS 2 Control.

controller_manager:

ros__parameters:

update_rate: 50 # Hz

# Declare the controllers

joint_state_broadcaster:

type: joint_state_broadcaster/JointStateBroadcaster

mecanum_drive_controller:

type: mecanum_drive_controller/MecanumDriveController

# Parameters for the mecanum drive controller

mecanum_drive_controller:

ros__parameters:

# Joint names

front_left_joint_name: front_left_wheel_joint

front_right_joint_name: front_right_wheel_joint

back_left_joint_name: back_left_wheel_joint

back_right_joint_name: back_right_wheel_joint

# Robot physical parameters

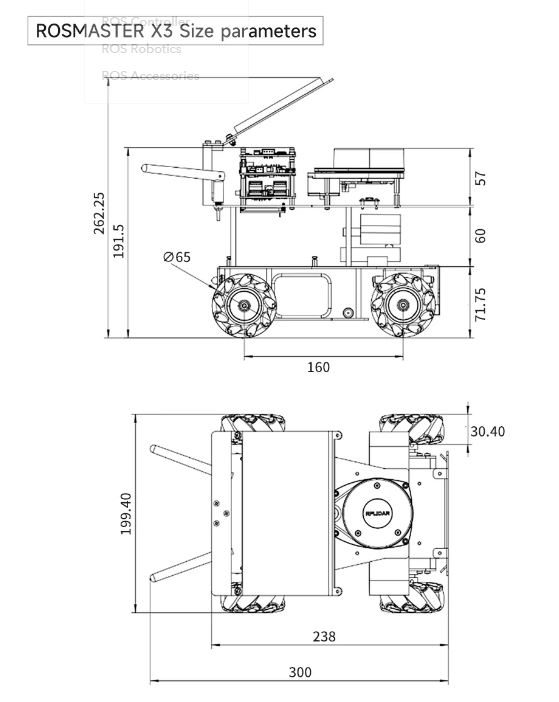

wheel_base: 0.16

wheel_separation: 0.169

wheel_radius: 0.0325

wheel_separation_multiplier: 1.0

front_left_wheel_radius_multiplier: 1.0

front_right_wheel_radius_multiplier: 1.0

back_left_wheel_radius_multiplier: 1.0

back_right_wheel_radius_multiplier: 1.0

# TF configuration

tf_frame_prefix_enable: false

tf_frame_prefix: ""

odom_frame_id: odom

base_frame_id: base_footprint

# Odometry parameters

pose_covariance_diagonal: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

twist_covariance_diagonal: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

position_feedback: true

open_loop: true

enable_odom_tf: true

# Command handling

cmd_vel_timeout: 0.5

publish_limited_velocity: false

velocity_rolling_window_size: 10

publish_rate: 50.0

# Velocity limits

linear.x.has_velocity_limits: false

linear.x.has_acceleration_limits: false

linear.x.has_jerk_limits: false

linear.x.max_velocity: 0.0

linear.x.min_velocity: 0.0

linear.x.max_acceleration: 0.0

linear.x.max_jerk: 0.0

linear.x.min_jerk: 0.0

linear.y.has_velocity_limits: false

linear.y.has_acceleration_limits: false

linear.y.has_jerk_limits: false

linear.y.max_velocity: 0.0

linear.y.min_velocity: 0.0

linear.y.max_acceleration: 0.0

linear.y.max_jerk: 0.0

linear.y.min_jerk: 0.0

angular.z.has_velocity_limits: false

angular.z.has_acceleration_limits: false

angular.z.has_jerk_limits: false

angular.z.max_velocity: 0.0

angular.z.min_velocity: 0.0

angular.z.max_acceleration: 0.0

angular.z.min_acceleration: 0.0

angular.z.max_jerk: 0.0

angular.z.min_jerk: 0.0

Save the file, and close it.

Create a new file inside the config/rosmaster_x3 directory called:

ros2_controllers_template.yamlAdd this code, and save the file.

This file will help us substitute a prefix should we desire.

Update CMakeLists.txt with the new config folder.

Update yahboom_rosmaster_desciption/launch/robot_state_publisher.py with the relevant part to handle the finding and replacing.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_description/launchSave the file, and close it.

Create the XACRO/URDF Files

Next, we need to add three essential XACRO/URDF files that bridge Gazebo simulation with ROS 2 Control. These files work alongside your controller configuration YAML file to create a complete control system.

gazebo_sim_ros2_control.urdf.xacro

Open a terminal window.

Type the following command:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_description/urdf/mkdir controlCreate the file named:

gazebo_sim_ros2_control.urdf.xacroAdd this code.

Save the file, and close it.

rosmaster_x3_ros2_control.urdf.xacro:

Open a terminal window.

Type the following command:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_description/urdf/controlCreate the file named:

rosmaster_x3_ros2_control.urdf.xacroAdd this code.

Save the file, and close it.

velocity_control_plugin.urdf.xacro

One more file to add. Add this one. This plugin is used to send velocity commands to the robot.

<?xml version="1.0"?>

<robot xmlns:xacro="http://www.ros.org/wiki/xacro">

<xacro:macro name="load_velocity_control_plugin" params="use_gazebo">

<xacro:if value="${use_gazebo}">

<gazebo>

<plugin

filename="gz-sim-velocity-control-system"

name="gz::sim::systems::VelocityControl">

<topic>mecanum_drive_controller/cmd_vel</topic>

<initial_linear>0 0 0</initial_linear>

<initial_angular>0 0 0</initial_angular>

</plugin>

</gazebo>

</xacro:if>

</xacro:macro>

</robot>

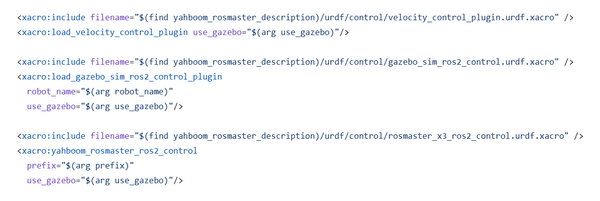

Include the Files in rosmaster_x3.urdf.xacro

Next we need to include these files inside rosmaster_x3.urdf.xacro.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_description/urdf/robots/Open the rosmaster_x3_urdf.xacro file.

Add this code at the end before the </robot> tag:

<xacro:include filename="$(find yahboom_rosmaster_description)/urdf/control/gazebo_sim_ros2_control.urdf.xacro" />

<xacro:load_gazebo_sim_ros2_control_plugin

robot_name="$(arg robot_name)"

use_gazebo="$(arg use_gazebo)"/>

<xacro:include filename="$(find yahboom_rosmaster_description)/urdf/control/rosmaster_x3_ros2_control.urdf.xacro" />

<xacro:yahboom_rosmaster_ros2_control

prefix="$(arg prefix)"

use_gazebo="$(arg use_gazebo)"/>

Save the file, and close it.

Create the Launch Files

Now let’s create the launch files.

load_ros2_controllers.launch.py

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/mkdir launchcd launchAdd this code called load_ros2_controllers.launch.py:

#!/usr/bin/env python3

"""

Launch ROS 2 controllers for the mecanum wheel robot.

This script creates a launch description that starts the necessary controllers

for operating the mecanum wheel robot in a specific sequence.

Launched Controllers:

1. Joint State Broadcaster: Publishes joint states to /joint_states

2. Mecanum Drive Controller: Controls the robot's mecanum drive movements via ~/cmd_vel

:author: Addison Sears-Collins

:date: November 20, 2024

"""

from launch import LaunchDescription

from launch.actions import ExecuteProcess, RegisterEventHandler, TimerAction

from launch.event_handlers import OnProcessExit

def generate_launch_description():

"""Generate a launch description for sequentially starting robot controllers.

The function creates a launch sequence that ensures controllers are started

in the correct order.

Returns:

LaunchDescription: Launch description containing sequenced controller starts

"""

# Start mecanum drive controller

start_mecanum_drive_controller_cmd = ExecuteProcess(

cmd=['ros2', 'control', 'load_controller', '--set-state', 'active',

'mecanum_drive_controller'],

output='screen'

)

# Start joint state broadcaster

start_joint_state_broadcaster_cmd = ExecuteProcess(

cmd=['ros2', 'control', 'load_controller', '--set-state', 'active',

'joint_state_broadcaster'],

output='screen'

)

# Add delay to joint state broadcaster

delayed_start = TimerAction(

period=20.0,

actions=[start_joint_state_broadcaster_cmd]

)

# Register event handler for sequencing

load_joint_state_broadcaster_cmd = RegisterEventHandler(

event_handler=OnProcessExit(

target_action=start_joint_state_broadcaster_cmd,

on_exit=[start_mecanum_drive_controller_cmd]))

# Create and populate the launch description

ld = LaunchDescription()

# Add the actions to the launch description in sequence

ld.add_action(delayed_start)

ld.add_action(load_joint_state_broadcaster_cmd)

return ld

Save the file, and close it.

Update CMakeLists.txt for the yahboom_rosmaster_bringup package to include the new launch folder. Make sure the block below, looks like this:

# Copy necessary files to designated locations in the project

install (

DIRECTORY launch

DESTINATION share/${PROJECT_NAME}

)

Create YAML File for ROS 2 Bridge

We now need to create a YAML file that bridges between ROS 2 and Gazebo topics. This file will specify how sensor data (camera info, point clouds, IMU data, and LIDAR data) should be translated between the Gazebo simulation environment and the ROS 2 ecosystem. The configuration ensures that simulated sensor data can be used by ROS 2 nodes for perception and planning tasks.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/mkdir configUpdate CMakeLists.txt with the new config folder.

# Copy necessary files to designated locations in the project

install (

DIRECTORY config

DESTINATION share/${PROJECT_NAME}

)

Create a file called ros_gz_bridge.yaml inside the config folder.

Add this code.

# RGBD Camera topics

- ros_topic_name: "cam_1/color/camera_info"

gz_topic_name: "cam_1/camera_info"

ros_type_name: "sensor_msgs/msg/CameraInfo"

gz_type_name: "gz.msgs.CameraInfo"

direction: GZ_TO_ROS

lazy: true # Determines whether connections are created immediately at startup (when false) or only when data is actually requested by a subscriber (when true), helping to conserve system resources at the cost of potential initial delays in data flow.

- ros_topic_name: "cam_1/depth/color/points"

gz_topic_name: "cam_1/points"

ros_type_name: "sensor_msgs/msg/PointCloud2"

gz_type_name: "gz.msgs.PointCloudPacked"

direction: GZ_TO_ROS

lazy: true

# LIDAR configuration

- ros_topic_name: "scan"

gz_topic_name: "scan"

ros_type_name: "sensor_msgs/msg/LaserScan"

gz_type_name: "gz.msgs.LaserScan"

direction: GZ_TO_ROS

lazy: false

# IMU configuration

- ros_topic_name: "imu/data"

gz_topic_name: "imu/data"

ros_type_name: "sensor_msgs/msg/Imu"

gz_type_name: "gz.msgs.IMU"

direction: GZ_TO_ROS

lazy: false

# Sending velocity commands from ROS 2 to Gazebo

- ros_topic_name: "mecanum_drive_controller/cmd_vel"

gz_topic_name: "mecanum_drive_controller/cmd_vel"

ros_type_name: "geometry_msgs/msg/TwistStamped"

gz_type_name: "gz.msgs.Twist"

direction: ROS_TO_GZ

lazy: false

Save the file, and close it.

Create an RViz configuration file.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/mkdir rvizAdd this file to the rviz folder: yahboom_rosmaster_gazebo_sim.rviz.

Update CMakeLists.txt

Go to the CMakeLists.txt.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/Make sure it has this block of code:

# Copy necessary files to designated locations in the project

install (

DIRECTORY launch models worlds rviz

DESTINATION share/${PROJECT_NAME}

)

gedit CMakeLists.txtSave the file, and close it.

yahboom_rosmaster.gazebo.launch.py

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/launchCreate a new file called yahboom_rosmaster.gazebo.launch.py.

Add this code.

Save the file, and close it.

Create a Bash Script for Quick Launching

Create a bash script to launch multiple launch files:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/mkdir scriptsAdd a bash file called: rosmaster_x3_gazebo.sh.Add this code.

Save the file, and close it.

Make it executable.

sudo chmod +x rosmaster_x3_gazebo.shI will add an alias for quick launch:

echo "alias x3='bash ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/scripts/rosmaster_x3_gazebo.sh'" >> ~/.bashrc && source ~/.bashrcMake sure to update CMakeLists.txt for the yahboom_rosmaster_bringup folder.

Build the Workspace

Now let’s build the package. Open a terminal window, and type:

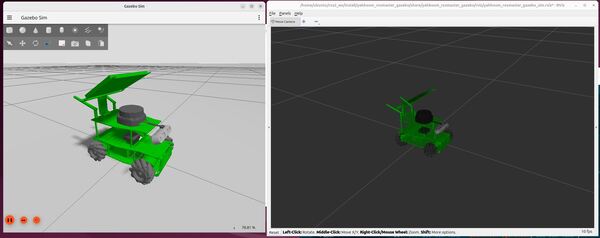

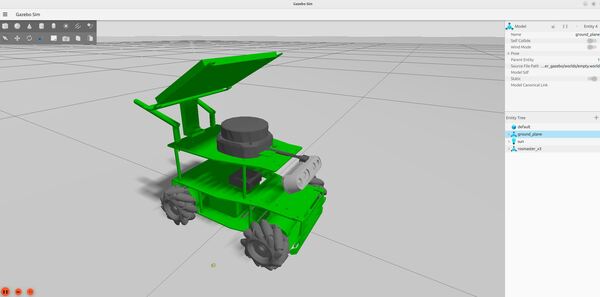

buildLaunch Everything and Move the Robot

Now let’s launch everything.

Open a terminal window, and type the following command:

x3or

bash ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/scripts/rosmaster_x3_gazebo.shTo change the default camera view in Gazebo, we will need to use the /gui/move_to/pose service (found via gz service -l):

gz service -s /gui/move_to/pose --reqtype gz.msgs.GUICamera --reptype gz.msgs.Boolean --timeout 2000 --req "pose: {position: {x: 0.0, y: -2.0, z: 2.0} orientation: {x: -0.2706, y: 0.2706, z: 0.6533, w: 0.6533}}"I added this command directly to the bash startup script.

Be patient. It can take up to 60 seconds to load everything.

To move the robot, type this:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{header: {stamp: {sec: $(date +%s), nanosec: 0}, frame_id: ''}, twist: {linear: {x: 0.0, y: 0.1, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 0.0}}}"

Press CTRL + C.

Now stop the robot:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{header: {stamp: {sec: $(date +%s), nanosec: 0}, frame_id: ''}, twist: {linear: {x: 0.0, y: 0.0, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 0.0}}}"

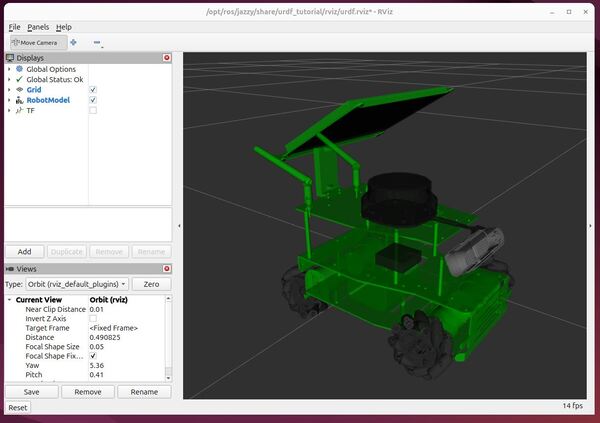

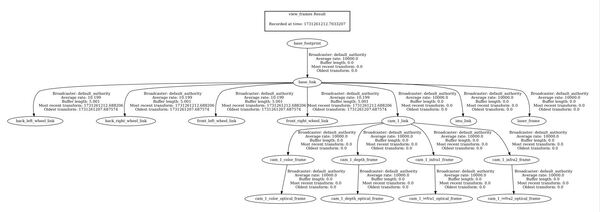

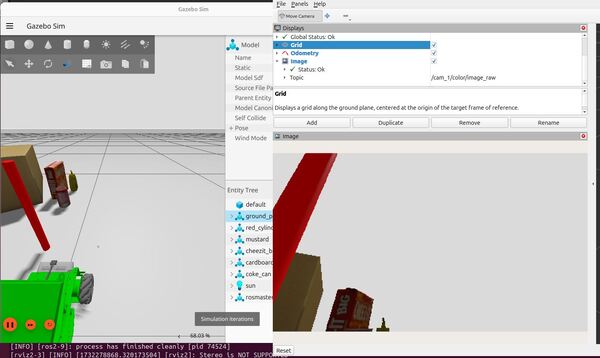

Here is the output using the empty.world file.

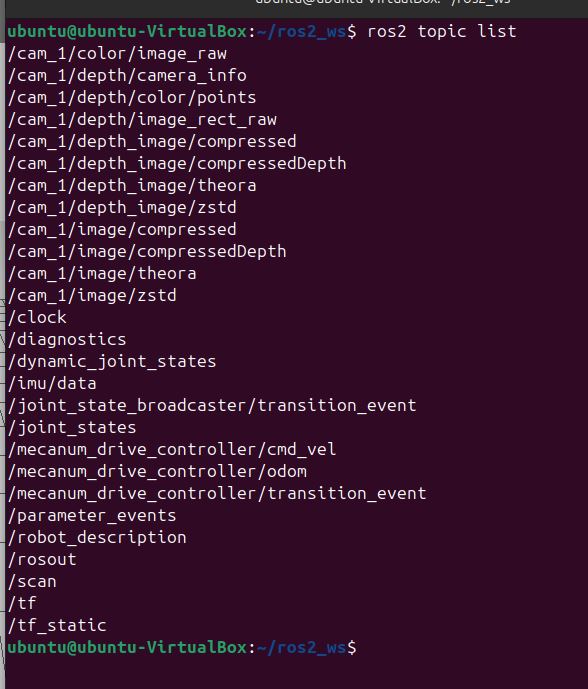

To see a list of active topics, type:

ros2 topic list

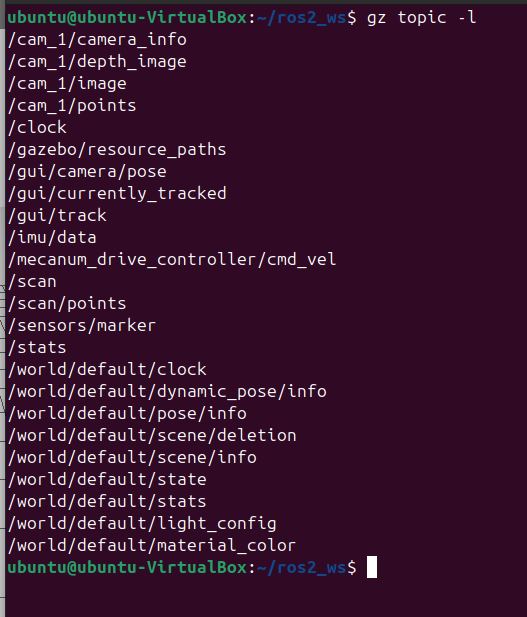

To see a list of active topics, type:

gz topic -l

To see the options, you can type:

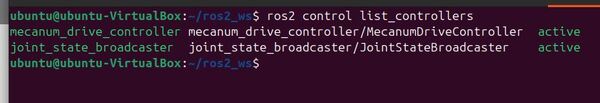

gz topic --helpSee the active controllers:

ros2 control list_controllers

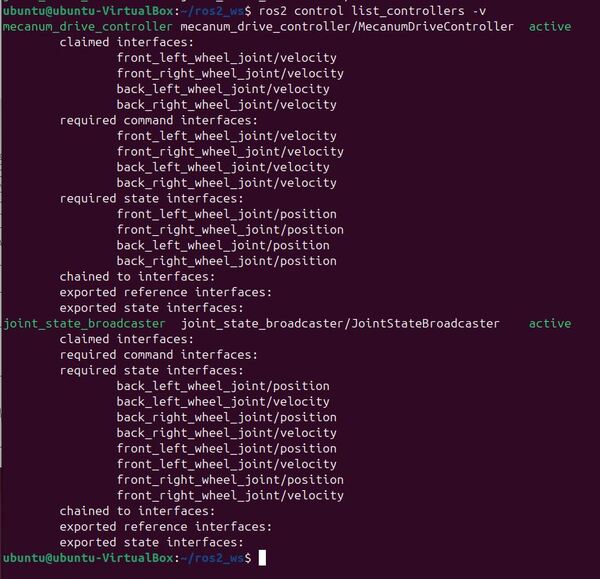

To get more information, type:

ros2 control list_controllers -v

Let’s see the information coming across some of the ROS 2 topics.

ros2 topic echo /mecanum_drive_controller/cmd_vel displays the velocity commands sent to the robot, telling it how fast and in which direction to move.

ros2 topic echo /joint_states shows the current positions (in radians) and velocities (in radians per second) of the robot’s wheels.

ros2 topic echo/mecanum_drive_controller/odom provides information about the robot’s estimated position, orientation, linear, and angular velocity based on wheel rotation data.

ros2 topic echo /imu/data outputs readings from the robot’s inertial measurement unit (IMU), which includes data about angular velocity and linear acceleration.

ros2 topic echo /scan displays data from the robot’s laser scanner or LiDAR, providing information about the distances to obstacles in the environment.

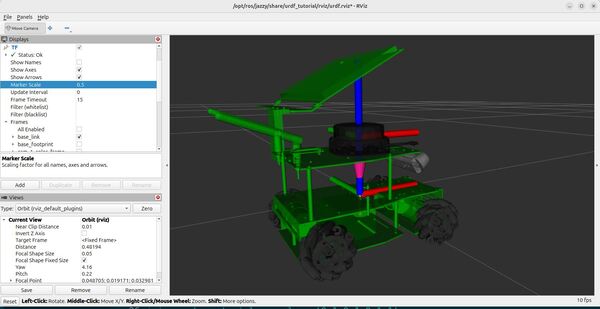

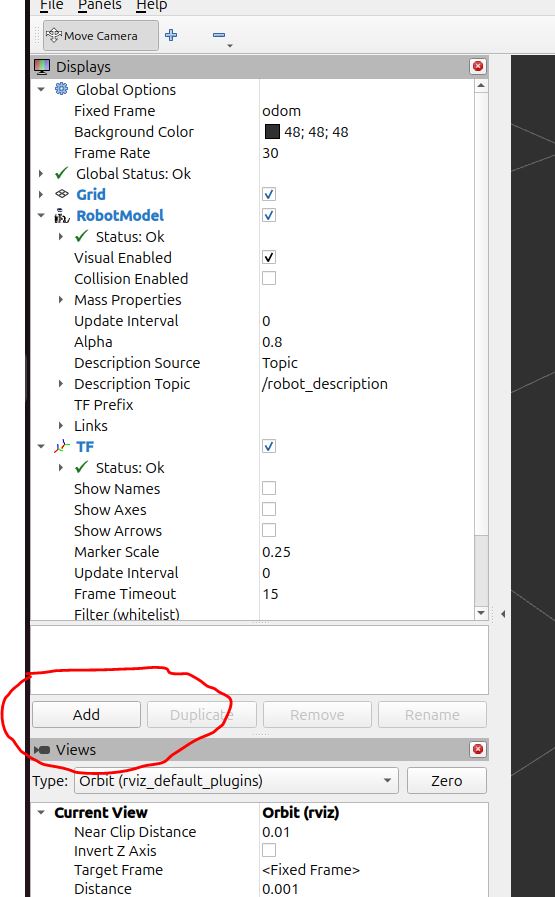

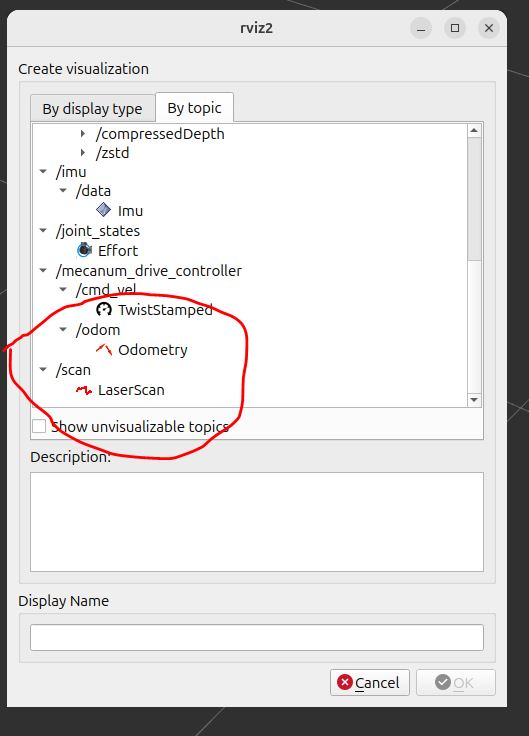

You can see the /scan data, if you go to RViz, and click the Add button in the Displays panel on the left.

Click the “By topic” tab, and scroll down to LaserScan. Click on that, and click OK to add it.

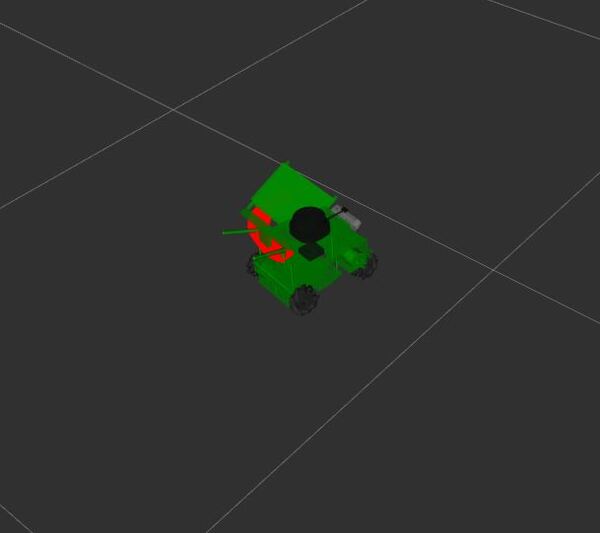

You can see the LIDAR scan data.

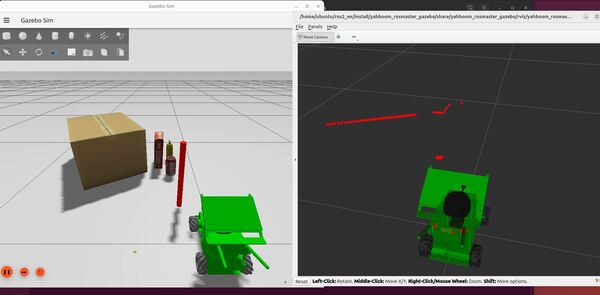

Here is what things look like when launching the robot in pick_and_place_demo.world. You can see how the scans match with the objects in the environment.

To see the frequency of publishing, type:

ros2 topic hz /scanTo get information about this topic, type:

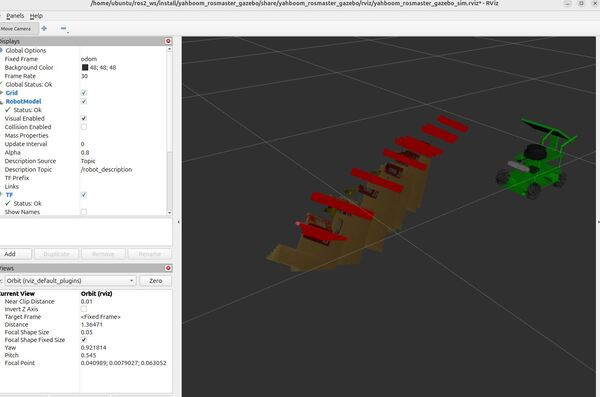

ros2 topic info /scanI also recommend checking out /cam_1/color/image_raw in RViz to see if the camera transformation for the cam_1_depth_optical_frame is oriented correctly. Compare Gazebo (on the left) to what the camera is actually seeing in ROS 2 (on the right).

Click the “By topic” tab.

Click /cam_1 -> /depth -> /color -> /points -> PointCloud2 so you can see the point cloud in RViz.

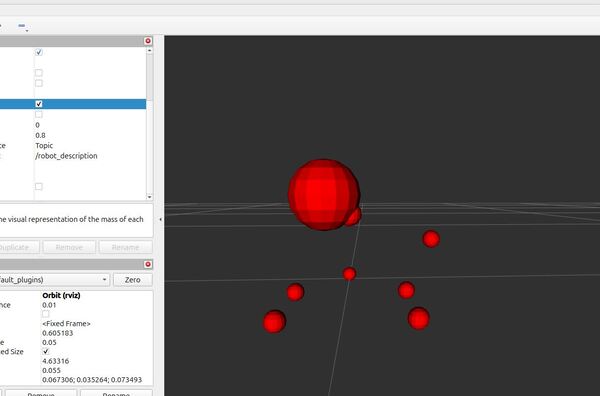

While the point cloud data shows perfectly fine in my robotic arm applications with Gazebo and RViz, I had big time rendering issues when trying to get it to display in RViz. You can see these issues in the image below. I suspect it is related to the amount of memory on my virtual machine given all the sensor data that is flowing through from Gazebo to ROS 2.

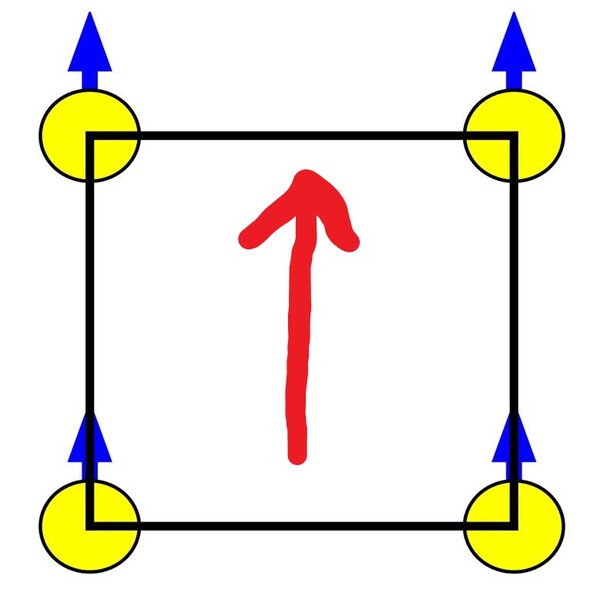

If you want to move the robot forward, type this:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.05,

y: 0.0,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

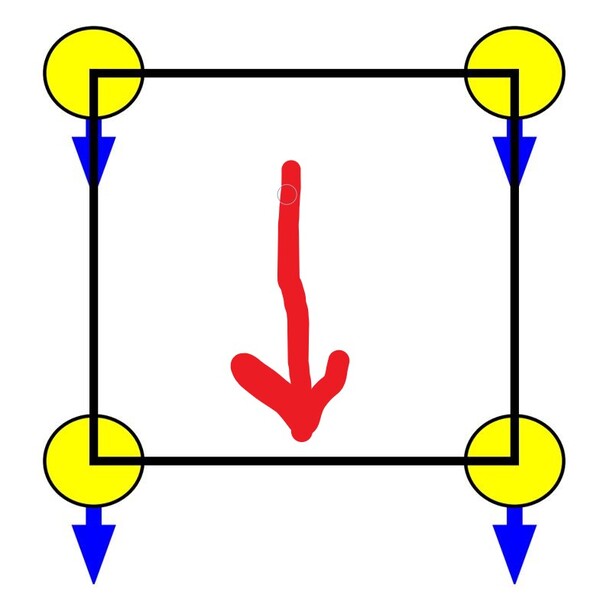

}"If you want to move the robot backward, type this:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: -0.05,

y: 0.0,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

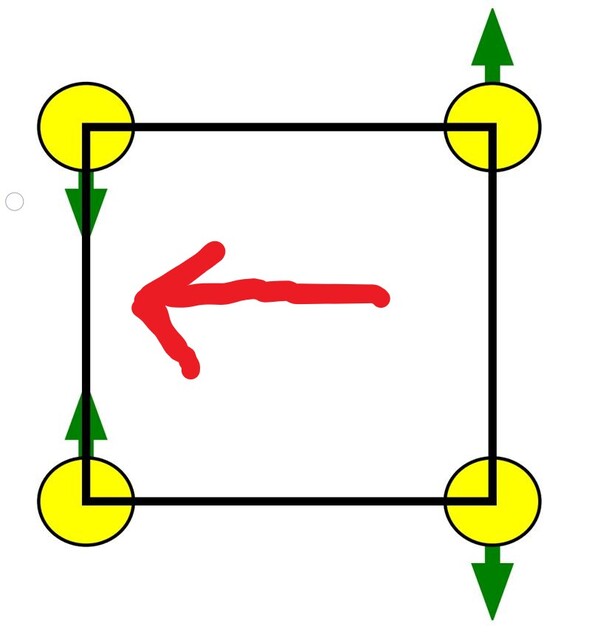

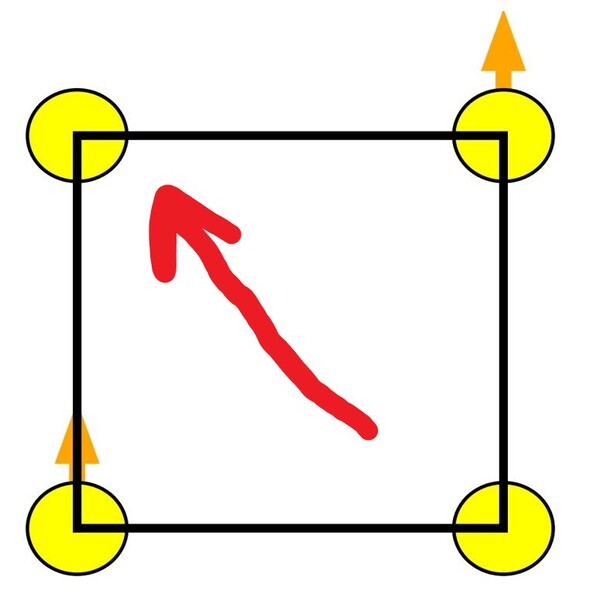

}"If you want to move the robot to the left, type this:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.0,

y: 0.05,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

}"If you want to move the robot to the right, type this:

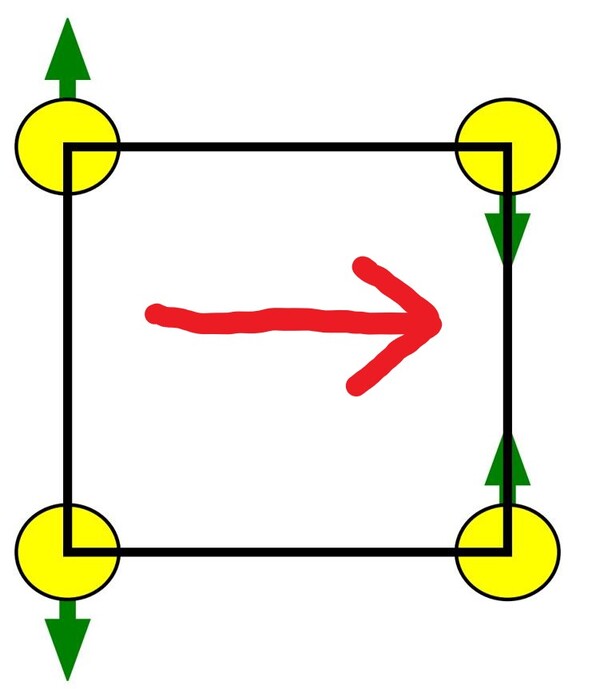

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.0,

y: -0.05,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

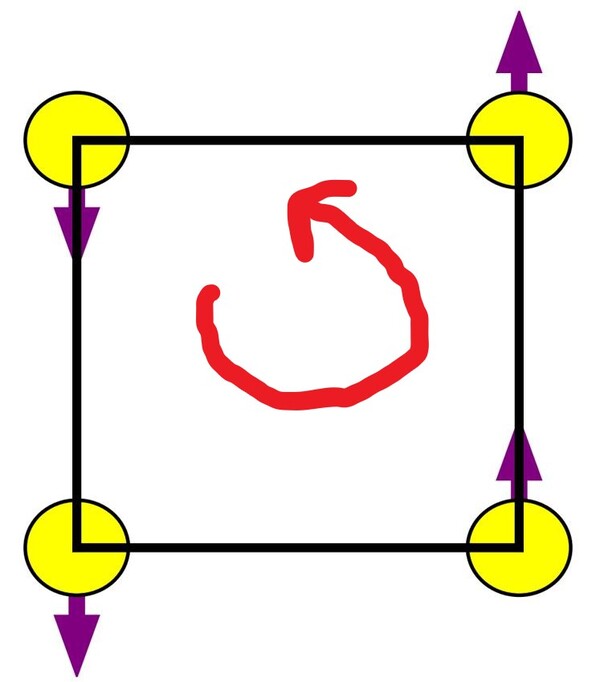

}"If you want to rotate the robot in place clockwise, type this:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.0,

y: 0.0,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: -0.4

}

}

}"Counter-clockwise:

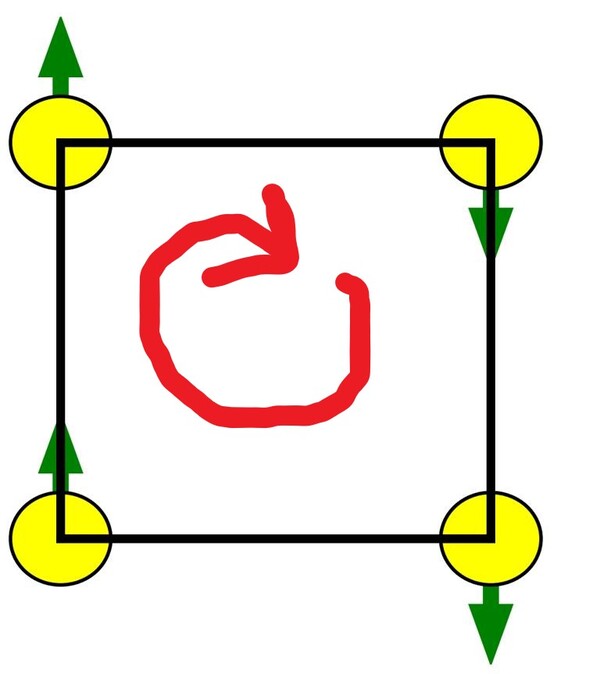

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.0,

y: 0.0,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.4

}

}

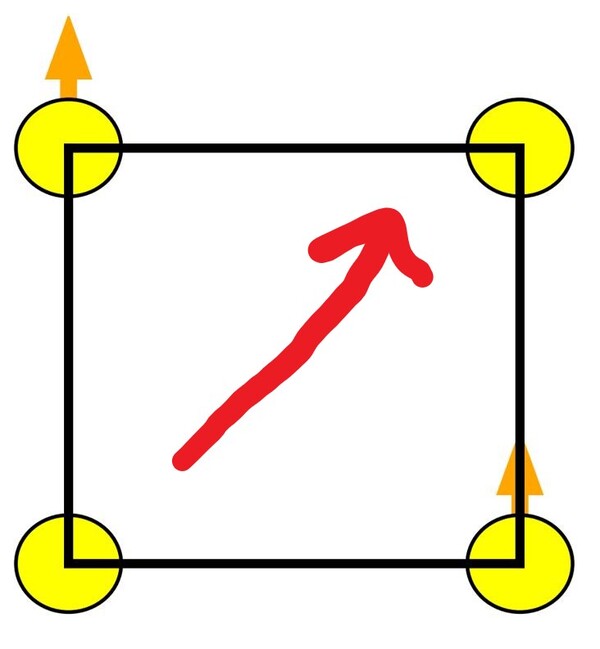

}"Diagonal forward-left:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.05,

y: 0.05,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

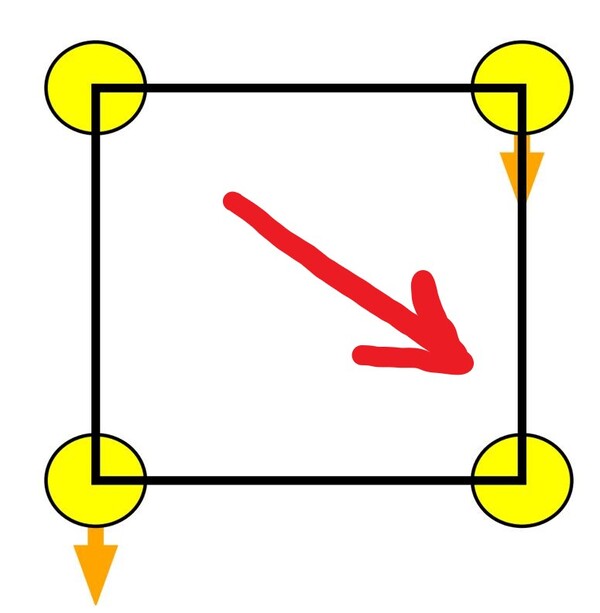

}"Diagonal forward-right:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: 0.05,

y: -0.05,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

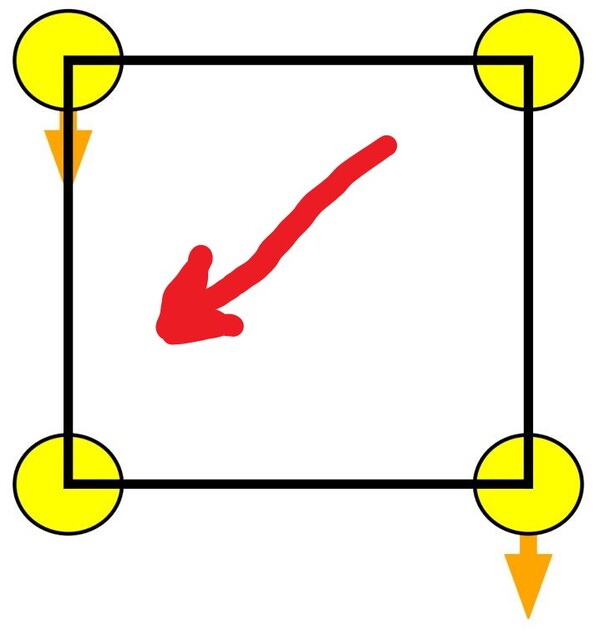

}"Diagonal backward left:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: -0.05,

y: 0.05,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

}"Diagonal backward-right:

ros2 topic pub /mecanum_drive_controller/cmd_vel geometry_msgs/msg/TwistStamped "{

header: {

stamp: {sec: `date +%s`, nanosec: 0},

frame_id: ''

},

twist: {

linear: {

x: -0.05,

y: -0.05,

z: 0.0

},

angular: {

x: 0.0,

y: 0.0,

z: 0.0

}

}

}"Press CTRL + C to close everything out.

Control the Robot with Code

Let’s create a ROS 2 node that can make the robot move repeatedly in a square pattern.

Let’s start by creating a program.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_system_tests/srcAdd a file called square_mecanum_controller.cpp.

/**

* @file square_mecanum_controller.cpp

* @brief Controls a mecanum wheeled robot to move in a square pattern

*

* This program creates a ROS 2 node that publishes velocity commands to make a

* mecanum wheeled robot move in a square pattern. It takes advantage of the

* omnidirectional capabilities of mecanum wheels to move in straight lines

* along both X and Y axes.

*

* Publishing Topics:

* /mecanum_drive_controller/cmd_vel (geometry_msgs/TwistStamped):

* Velocity commands for the robot's motion

*

* @author Addison Sears-Collins

* @date November 22, 2024

*/

#include <chrono>

#include <functional>

#include <memory>

#include "rclcpp/rclcpp.hpp"

#include "geometry_msgs/msg/twist_stamped.hpp"

using namespace std::chrono_literals;

class SquareController : public rclcpp::Node

{

public:

SquareController() : Node("square_controller")

{

// Create publisher for velocity commands

publisher_ = this->create_publisher<geometry_msgs::msg::TwistStamped>(

"/mecanum_drive_controller/cmd_vel", 10);

// Create timer that calls our control function every 200ms

timer_ = this->create_wall_timer(

200ms, std::bind(&SquareController::timer_callback, this));

// Initialize variables

current_side_ = 0; // Which side of square we're on (0-3)

elapsed_time_ = 0.0; // Time spent on current side

robot_speed_ = 0.2; // Speed in meters/second

side_length_ = 2.0; // Length of each side in meters

time_per_side_ = side_length_ / robot_speed_; // Time to complete one side

}

// Send stop command when node is shut down

~SquareController()

{

stop_robot();

}

// Function to stop the robot

void stop_robot()

{

auto msg = geometry_msgs::msg::TwistStamped();

msg.header.stamp = this->now();

publisher_->publish(msg); // All velocities default to 0

}

private:

void timer_callback()

{

// Create velocity command message

auto msg = geometry_msgs::msg::TwistStamped();

msg.header.stamp = this->now();

// Set velocity based on which side of the square we're on

switch (current_side_) {

case 0: // Moving forward (positive X)

msg.twist.linear.x = robot_speed_;

break;

case 1: // Moving left (positive Y)

msg.twist.linear.y = robot_speed_;

break;

case 2: // Moving backward (negative X)

msg.twist.linear.x = -robot_speed_;

break;

case 3: // Moving right (negative Y)

msg.twist.linear.y = -robot_speed_;

break;

}

// Publish the velocity command

publisher_->publish(msg);

// Update time tracking

elapsed_time_ += 0.2; // 200ms in seconds

// Check if we've completed the current side

if (elapsed_time_ >= time_per_side_) {

elapsed_time_ = 0.0;

current_side_ = (current_side_ + 1) % 4; // Move to next side (0-3)

}

}

// Class member variables

rclcpp::TimerBase::SharedPtr timer_;

rclcpp::Publisher<geometry_msgs::msg::TwistStamped>::SharedPtr publisher_;

int current_side_;

double elapsed_time_;

double side_length_;

double robot_speed_;

double time_per_side_;

};

int main(int argc, char * argv[])

{

rclcpp::init(argc, argv);

auto node = std::make_shared<SquareController>();

rclcpp::spin(node);

rclcpp::shutdown();

return 0;

}

Update your CMakeLists.txt file and package.xml file.

buildLaunch your robot in Gazebo.

x3Now run your node:

ros2 run yahboom_rosmaster_system_tests square_mecanum_controllerYour robot will move in a pattern that resembles a square.

That’s it! You made it to the end! Congratulations!

Keep building!