In the previous tutorial, you learned how to leverage ROS 2 Control to move the robotic arm manually by sending raw joint commands to the robot. This exercise is great for learning, but it is not how things are done in a real-world robotics project.

In this tutorial, I will show you how to set up a ROS 2 software called MoveIt 2 to send those commands. MoveIt 2 is a powerful and flexible motion planning framework for robotic manipulators.

Using MoveIt 2 instead of sending manual commands through a terminal window allows for more sophisticated, efficient, and safer motion planning, taking into account obstacles, joint limits, and other constraints automatically.

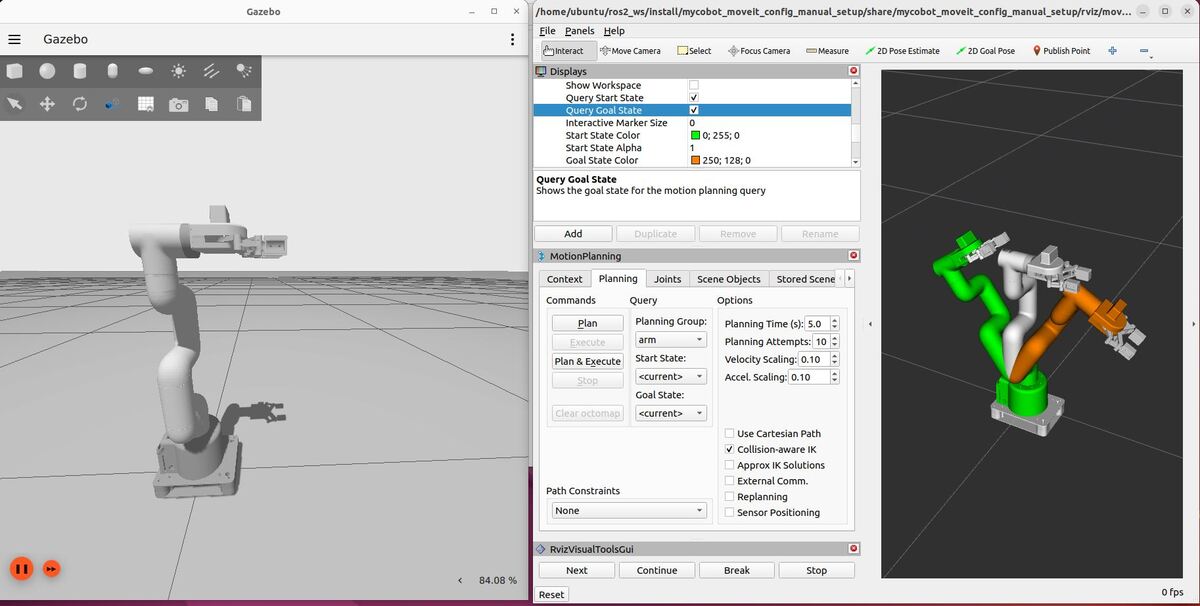

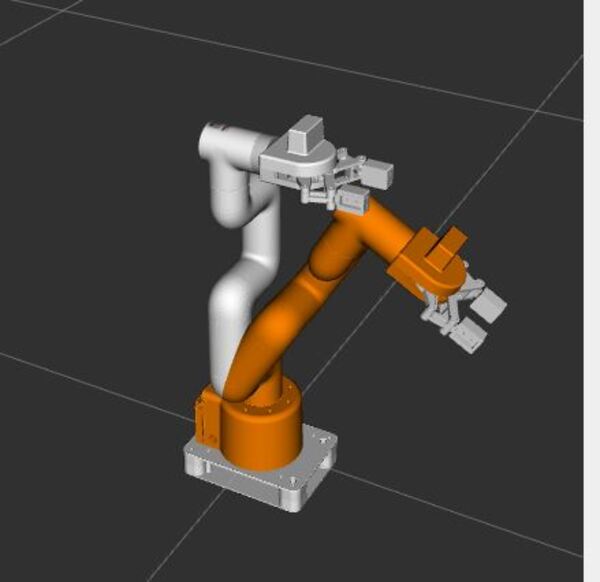

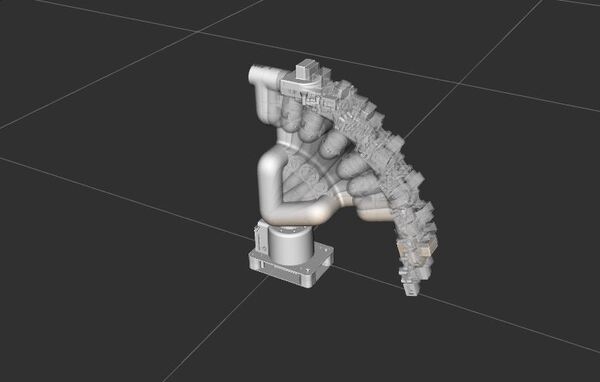

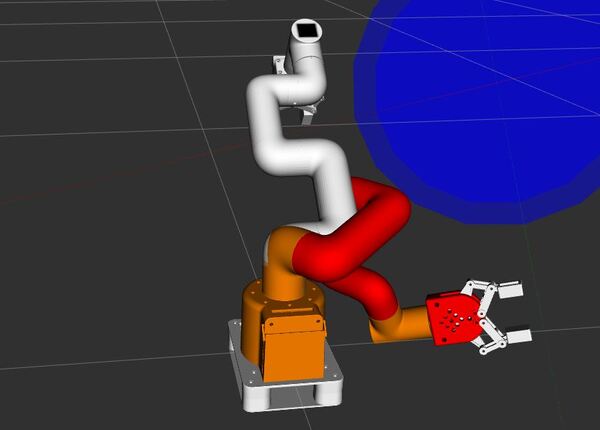

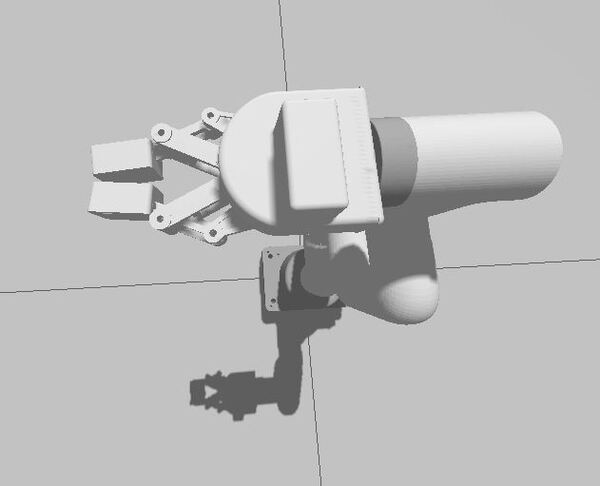

By the end of this tutorial, you will be able to create this:

Prerequisites

- You have completed my tutorial “How to Control a Robotic Arm Using ROS 2 Control and Gazebo.”

- I am working with ROS 2 Iron. Other ROS 2 distributions follow this similar process.

All my code for this project is located here on GitHub.

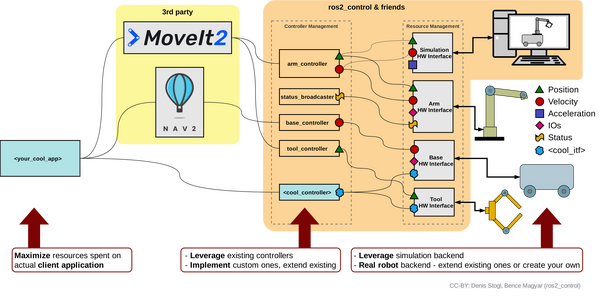

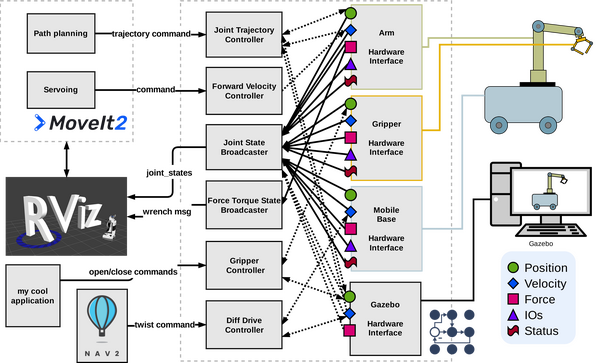

How MoveIt 2 Works with ROS 2 Control

Before we dive into the tutorial, let me explain how MoveIt 2 works with ROS 2 Control by walking through a common real-world use case.

Suppose you have a mobile manipulator, which consists of a wheeled robot base with a robotic arm attached to it.

You want to develop an application that performs a pick and place task.

Your cool app starts by telling MoveIt 2, “Pick up the object at location A and place it at location B.”

MoveIt 2, with help from Nav 2 (the navigation software stack for ROS 2), plans out the detailed movements needed – reaching for the object, grasping it, lifting it, moving to the new location, and placing it down.

These plans are sent to ROS 2 Control’s Controller Management system, which has specific controllers for the arm, gripper, and base.

The arm controller (e.g. JointTrajectoryController), for example, breaks down the plan into specific joint movements and sends these to the Resource Management system.

The Resource Management system communicates these commands through hardware interfaces to the actual robot parts (or their simulated versions).

As the robot executes the pick and place task, sensors in its joints and gripper send back information about position, force, and status.

This feedback flows back up through the system, allowing your app and MoveIt 2 to monitor progress and make adjustments if needed.

This setup allows you to focus on defining high-level tasks while MoveIt 2 and ROS 2 Control handle the complex details of robot control and movement coordination.

Now let’s look at another diagram which shows the specific controller and topic names. This gives us a more detailed view of how MoveIt 2, ROS 2 Control, and the robot components interact:

Your cool application initiates the process, defining tasks like pick and place operations.

MoveIt 2 contains a path planning component that generates trajectory commands. These commands are sent to the Joint Trajectory Controller, which manages the arm’s movements.

For mobile robots, Nav 2 can send twist commands to the Diff Drive Controller, controlling the base’s motion.

The system includes several specialized controllers:

- Joint Trajectory Controller for arm movements

- Forward Velocity Controller for speed control (optional…only needed if you need direct velocity control for high-speed operations)

- Gripper Controller for end-effector actions (e.g. open and close gripper)

- Diff Drive Controller for mobile base navigation

These controllers communicate with Hardware Interfaces for the arm, gripper, mobile base, and the Gazebo simulation.

As the robot operates, sensor data flows back through these Hardware Interfaces. This information includes position, velocity, force, and status updates.

The Joint State Broadcaster and Force Torque State Broadcaster collect this feedback data.

This state information (e.g. joint angles) is then forwarded to RViz for visualization and to MoveIt 2 for real-time monitoring and adjustment.

Create a Package

Now that you understand how all the pieces work together on a high level, let’s start setting up our robot with MoveIt 2.

Create a new package called mycobot_moveit_config_manual_setup:

cd ~/ros2_ws/src/mycobot_ros2/ros2 pkg create --build-type ament_cmake --license BSD-3-Clause mycobot_moveit_config_manual_setupcd mycobot_moveit_config_manual_setupFill in the package.xml file with your relevant information.

gedit package.xml

<?xml version="1.0"?>

<?xml-model href="http://download.ros.org/schema/package_format3.xsd" schematypens="http://www.w3.org/2001/XMLSchema"?>

<package format="3">

<name>mycobot_moveit_config_manual_setup</name>

<version>0.0.0</version>

<description>Contains the files that enable the robot to integrate with Move It 2.</description>

<maintainer email="automaticaddison@todo.com">Automatic Addison</maintainer>

<license>BSD-3-Clause</license>

<buildtool_depend>ament_cmake</buildtool_depend>

<depend>moveit_ros_planning_interface</depend>

<depend>moveit_ros_move_group</depend>

<depend>moveit_kinematics</depend>

<depend>moveit_planners_ompl</depend>

<depend>moveit_ros_visualization</depend>

<depend>rviz2</depend>

<depend>rviz_visual_tools</depend>

<exec_depend>moveit_simple_controller_manager</exec_depend>

<exec_depend>moveit_configs_utils</exec_depend>

<exec_depend>pilz_industrial_motion_planner</exec_depend>

<test_depend>ament_lint_auto</test_depend>

<test_depend>ament_lint_common</test_depend>

<export>

<build_type>ament_cmake</build_type>

</export>

</package>

Save the file, and close it.

In a real robotics project, the naming convention for this package would be:

<robot_name>_moveit_config

Create the Configuration Files

Let’s create configuration files that will enable us to tailor our robot for MoveIt 2. We’ll do this process manually first (recommended), and then we will work through how to do this using the MoveIt Setup Assistant.

You can see some official examples of configuration files for other robots on the MoveIt resources ROS 2 GitHub.

Create the Config Folder

MoveIt 2 requires configuration files to go into the config folder of the package you created.

Open a terminal window, and type:

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setup/mkdir config && cd configAdd SRDF

The first thing we need to do is set up the SRDF File.

Semantic Robot Description Format (SRDF) is a file that tells MoveIt how your robot prefers to move and interact with its environment. It is a supplement to the URDF file.

The SRDF adds semantic (meaning-based) information to the robot’s description that isn’t included in the URDF (Unified Robot Description Format). This file includes things like:

- Naming groups of joints or links (e.g., “arm”, “gripper”)

- Defining default robot poses (e.g., “home”, “closed”, etc.)

- Which parts of the robot should be checked for colliding with each other during movement.

- We need to do this step because checking every possible collision between all parts of the robot for each planned movement can be time-consuming, especially for complex robots.

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setup/config/gedit mycobot_280.srdfAdd this code.

Save the file, and close it.

Let’s go through each section briefly:

- <group name=”arm”>: This section defines a group named “arm” that includes a list of joints. The joints listed in this group represent the robot arm.

- <group name=”gripper”>: This section defines a group named “gripper” that includes a list of joints related to the robot’s gripper or end-effector.

- <group_state name=”home” group=”arm”>: This section defines a named state called “home” for the “arm” group. It specifies the joint values for the arm joints when the robot is in its home position.

- <group_state name=”open” group=”gripper”>: This section defines a named state called “open” for the “gripper” group, specifying the joint value for the gripper when it is in its open position.

- <disable_collisions>: This section is used to disable collision checking between specific pairs of links. Each <disable_collisions> entry specifies two links and the reason for disabling collision checking between them. The reasons can be “Adjacent” (for adjacent links in the robotic arm), “Never” (for links that will never collide), or “Default” (for links that are not expected to collide in most configurations).

Add Initial Positions

Now let’s create a configuration file for the initial positions.

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setup/config/gedit initial_positions.yamlAdd this code.

# Default initial position for each movable joint

initial_positions:

link1_to_link2: 0.0

link2_to_link3: 0.0

link3_to_link4: 0.0

link4_to_link5: 0.0

link5_to_link6: 0.0

link6_to_link6flange: 0.0

gripper_controller: 0.0

Save the file. This code sets the default initial positions for each movable joint.

The initial_positions.yaml file in MoveIt specifies the starting joint positions for motion planning, overriding any default positions set in the URDF. It allows you to set a specific initial configuration for your robot in MoveIt without modifying the URDF, providing flexibility in defining the starting point for motion planning tasks.

Add Joint Limits

gedit joint_limits.yamlAdd this code.

default_velocity_scaling_factor: 1.0

default_acceleration_scaling_factor: 1.0

joint_limits:

link1_to_link2:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 6.0

link2_to_link3:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 6.0

link3_to_link4:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 6.0

link4_to_link5:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 6.0

link5_to_link6:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 6.0

link6_to_link6flange:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 6.0

gripper_controller:

has_velocity_limits: true

max_velocity: 2.792527

has_acceleration_limits: true

max_acceleration: 5.0

has_deceleration_limits: true

max_deceleration: -5.0

has_jerk_limits: false

max_jerk: 300.0

Save the file.

This code sets the velocity and acceleration limits for each joint in our robotic arm, which is used by MoveIt 2 to control the robot’s movements.

Even though we have joint limits for velocity already defined in the URDF, this YAML file allows for overriding. Defining joint limits specific to MoveIt 2 is useful if you want to impose stricter or different limits for particular applications without modifying the URDF. It provides flexibility in adjusting the robot’s behavior for different tasks.

Add Kinematics

gedit kinematics.yamlAdd this code, and save the file.

# Configures how a robot calculates possible positions and movements of its joints

# to reach a desired point. It specifies which mathematical methods (solvers) to use,

# how long to try, and how precise these calculations should be.

arm: # Name of the joint group from the SRDF file

kinematics_solver: kdl_kinematics_plugin/KDLKinematicsPlugin # Plugin used for solving kinematics

kinematics_solver_search_resolution: 0.005 # Granularity (in radians for revolute joints) used by the solver when searching for a solution.

kinematics_solver_timeout: 0.05 # Maximum time the solver will spend trying to find a solution for each planning attempt

position_only_ik: false # If true, the solver will only consider the position of the end effector, not its orientation

kinematics_solver_attempts: 5 # How many times the solver will try to find a solution

# Typically we don't need a complex kinematics solver for the gripper. This is for demonstration purposes only.

gripper:

kinematics_solver: kdl_kinematics_plugin/KDLKinematicsPlugin

kinematics_solver_search_resolution: 0.005

kinematics_solver_timeout: 0.05

position_only_ik: false

kinematics_solver_attempts: 3

arm_with_gripper:

kinematics_solver: kdl_kinematics_plugin/KDLKinematicsPlugin

kinematics_solver_search_resolution: 0.005

kinematics_solver_timeout: 0.05

position_only_ik: false

kinematics_solver_attempts: 5

This file configures how MoveIt 2 calculates possible positions and movements of the robot’s arm to reach a desired point in space. It specifies the mathematical methods, precision, and time limits for these calculations, which MoveIt 2 uses to plan the robot’s movements.

Add Controllers

gedit moveit_controllers.yamlAdd this code, and save the file.

# This file configures the controller management for MoveIt, specifying which controllers are available,

# the joints each controller handles, and the type of interface (e.g. FollowJointTrajectory).

moveit_controller_manager: moveit_simple_controller_manager/MoveItSimpleControllerManager # Specifies the controller manager to use.

# Define the controllers configured using the ROS 2 Control framework.

moveit_simple_controller_manager:

controller_names:

- arm_controller # Name of the controller for the robotic arm.

- grip_controller # Name of the controller for the gripper.

- grip_action_controller # Other controller for the gripper (recommended)

# Configuration for the arm_controller.

arm_controller:

action_ns: follow_joint_trajectory # ROS action namespace to which MoveIt sends trajectory commands.

type: FollowJointTrajectory # Type of the controller interface; follows a joint trajectory.

default: true # This controller is the default for the joints listed below.

joints: # List of joints that the arm_controller manages.

- link1_to_link2

- link2_to_link3

- link3_to_link4

- link4_to_link5

- link5_to_link6

- link6_to_link6flange

# Configuration for the grip_controller.

grip_controller:

action_ns: follow_joint_trajectory

type: FollowJointTrajectory

default: false

joints:

- gripper_controller

# Configuration for the grip_action_controller.

grip_action_controller:

action_ns: gripper_cmd

type: GripperCommand

default: true

joints:

- gripper_controller

This yaml file tells MoveIt 2 how to convert its motion plans into instructions that the ROS 2 controllers can understand and execute on the real (or simulated) robot. Without this, MoveIt 2 wouldn’t know how to make the robot actually move using the ROS 2 control system.

MoveIt 2 sends desired joint positions or trajectories to ROS 2 controllers, which then manage the low-level control of the robot’s motors. This integration allows MoveIt 2 to focus on high-level motion planning while relying on ROS 2 control for the actual execution on the robot hardware.

We see that three ROS 2 controllers are available:

- arm_controller: This is a Joint Trajectory Controller for the robot arm

- grip_controller: This is a Joint Trajectory Controller for the gripper (optional)

- grip_action_controller: This is a Gripper Action Controller for the gripper

We also see a list of joints associated with each controller.

The arm controller uses the “FollowJointTrajectory” action interface. The FollowJointTrajectory action interface is a standardized interface in ROS (Robot Operating System) for controlling the joint positions of a robot over time. It allows MoveIt 2 to send a trajectory, which is a sequence of joint positions and their corresponding timestamps, to the robot’s joint controllers.

How it works:

- MoveIt 2 plans a movement, which results in a series of joint positions over time.

- This plan is packaged into a “trajectory” – think of it as a list of goal positions (in radians) for each joint.

- MoveIt 2 sends this trajectory to the appropriate ROS 2 controller using the FollowJointTrajectory action interface. This interface is like a protocol MoveIt 2 uses to communicate with the controllers.

- The ROS 2 controller receives this trajectory, processes it, and relays it to the hardware interface.

- The hardware interface sends the commands to the microcontroller (e.g. Arduino) to move the robot’s joints accordingly.

Add Cartesian Speed and Acceleration Limits

For motion planning for our robotic arm, we are going to use the Pilz industrial motion planner.

Pilz focuses on simple, predictable movements like straight lines, circles, and direct point-to-point paths. It works by calculating a clear, predefined route for each part of the robot to follow, ensuring smooth and safe motion from start to finish.

Think of Pilz as a careful driver that always takes known, reliable routes rather than trying to find creative shortcuts, making it perfect for precise tasks where you want consistent, controlled movements.

While default planners like OMPL (Open Motion Planning Library) work best for dynamic environments where you can have unexpected obstacles (people moving around, objects left on tabletops and counters, etc.), the structured and predictable nature of Pilz is advantageous in a scenario where the environment is well-structured and controlled.

Open a new file where we will define the Cartesian limits:

gedit pilz_cartesian_limits.yaml

# Defines the Cartesian movement limits for a robotic arm using the Pilz Planner.

# The values are typically determined based on a combination of the robot manufacturer's

# specifications, practical testing, and considerations for the specific tasks the robot will perform.

cartesian_limits:

max_trans_vel: 1.0 # Maximum translational velocity in meters per second. Specifies how fast the end effector can move in space.

max_trans_acc: 2.25 # Maximum translational acceleration in meters per second squared. Determines how quickly the end effector can increase its speed.

max_trans_dec: -5.0 # Maximum translational deceleration in meters per second squared. Specifies how quickly the end effector can decrease its speed.

max_rot_vel: 1.57 # Maximum rotational velocity in radians per second. Controls the speed at which the end effector can rotate.

Save the file, and close it.

This file sets speed and acceleration limits for a robotic arm’s movement in space. It defines how fast and quickly the robot’s end effector can move and rotate in Cartesian space (X, Y, Z direction) to ensure safe and efficient operation within the robot’s workspace.

Why do we need this file if we have already set velocity limits inside the URDF?

The URDF and the MoveIt configuration files serve different purposes when it comes to controlling the robot’s motion. The URDF contains velocity limits for each individual joint, which determine how fast each joint can move. These limits are important for controlling the speed of the individual joints. For example:

<joint name="joint_name" type="revolute">

<limit lower="-2.879793" upper="2.879793" effort="5.0" velocity="2.792527"/>

</joint>

On the other hand, the cartesian_limits in the MoveIt configuration are used to control the speed and motion of the robot’s gripper in 3D space. These limits define how fast the gripper can move and rotate in the world coordinate frame (X, Y, Z axes). The cartesian_limits are essential for planning the overall motion of the robot and ensuring that it moves safely and efficiently when performing tasks.

So, while the URDF velocity limits are important for controlling individual joints, the MoveIt cartesian_limits are still necessary for proper motion planning and control of the gripper’s movement in 3D space.

By the way, in the context of the file name “pilz_cartesian_limits.yaml”, “pilz” refers to Pilz GmbH & Co. KG, a German automation technology company that specializes in safe automation solutions, including robotics.

Add a Planning Pipeline File for the Pilz Industrial Motion Planner

To make all this work, we need to add a file called pilz_industrial_motion_planner_planning_planner.yaml.

Here is an example file from MoveIt’s GitHub.

This file preprocesses the robot’s motion planning request to make sure it meets certain safety and feasibility criteria, like ensuring the robot’s starting position is safe and within allowed limits before planning its movement.

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setup/config/gedit pilz_industrial_motion_planner_planning.yamlAdd this code.

planning_plugin: pilz_industrial_motion_planner/CommandPlanner

request_adapters: >-

default_planner_request_adapters/FixWorkspaceBounds

default_planner_request_adapters/FixStartStateBounds

default_planner_request_adapters/FixStartStateCollision

default_planner_request_adapters/FixStartStatePathConstraints

default_planner_config: PTP

capabilities: >-

pilz_industrial_motion_planner/MoveGroupSequenceAction

pilz_industrial_motion_planner/MoveGroupSequenceService

Save the file, and close it.

Add an RViz Configuration File

Let’s create a file that sets the parameters for visualizing all this in RViz.

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setupmkdir rviz && cd rvizgedit move_group.rvizAdd this code.

Save the file, and close it.

Create a Launch File

Let’s create a launch file.

Open a terminal window, and type:

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setupCreate a new launch folder.

mkdir launch && cd launchgedit move_group.launch.pyAdd this code.

# Author: Addison Sears-Collins

# Date: July 31, 2024

# Description: Launch MoveIt 2 for the myCobot robotic arm

import os

from launch import LaunchDescription

from launch.actions import DeclareLaunchArgument, EmitEvent, RegisterEventHandler

from launch.conditions import IfCondition

from launch.event_handlers import OnProcessExit

from launch.events import Shutdown

from launch.substitutions import LaunchConfiguration

from launch_ros.actions import Node

from launch_ros.substitutions import FindPackageShare

from moveit_configs_utils import MoveItConfigsBuilder

import xacro

def generate_launch_description():

# Constants for paths to different files and folders

package_name_gazebo = 'mycobot_gazebo'

package_name_moveit_config = 'mycobot_moveit_config_manual_setup'

# Set the path to different files and folders

pkg_share_gazebo = FindPackageShare(package=package_name_gazebo).find(package_name_gazebo)

pkg_share_moveit_config = FindPackageShare(package=package_name_moveit_config).find(package_name_moveit_config)

# Paths for various configuration files

urdf_file_path = 'urdf/ros2_control/gazebo/mycobot_280.urdf.xacro'

srdf_file_path = 'config/mycobot_280.srdf'

moveit_controllers_file_path = 'config/moveit_controllers.yaml'

joint_limits_file_path = 'config/joint_limits.yaml'

kinematics_file_path = 'config/kinematics.yaml'

pilz_cartesian_limits_file_path = 'config/pilz_cartesian_limits.yaml'

initial_positions_file_path = 'config/initial_positions.yaml'

rviz_config_file_path = 'rviz/move_group.rviz'

# Set the full paths

urdf_model_path = os.path.join(pkg_share_gazebo, urdf_file_path)

srdf_model_path = os.path.join(pkg_share_moveit_config, srdf_file_path)

moveit_controllers_file_path = os.path.join(pkg_share_moveit_config, moveit_controllers_file_path)

joint_limits_file_path = os.path.join(pkg_share_moveit_config, joint_limits_file_path)

kinematics_file_path = os.path.join(pkg_share_moveit_config, kinematics_file_path)

pilz_cartesian_limits_file_path = os.path.join(pkg_share_moveit_config, pilz_cartesian_limits_file_path)

initial_positions_file_path = os.path.join(pkg_share_moveit_config, initial_positions_file_path)

rviz_config_file = os.path.join(pkg_share_moveit_config, rviz_config_file_path)

# Launch configuration variables

use_sim_time = LaunchConfiguration('use_sim_time')

use_rviz = LaunchConfiguration('use_rviz')

# Declare the launch arguments

declare_use_sim_time_cmd = DeclareLaunchArgument(

name='use_sim_time',

default_value='true',

description='Use simulation (Gazebo) clock if true')

declare_use_rviz_cmd = DeclareLaunchArgument(

name='use_rviz',

default_value='true',

description='Whether to start RViz')

# Load the robot configuration

# Typically, you would also have this line in here: .robot_description(file_path=urdf_model_path)

# Another launch file is launching the robot description.

moveit_config = (

MoveItConfigsBuilder("mycobot_280", package_name=package_name_moveit_config)

.trajectory_execution(file_path=moveit_controllers_file_path)

.robot_description_semantic(file_path=srdf_model_path)

.joint_limits(file_path=joint_limits_file_path)

.robot_description_kinematics(file_path=kinematics_file_path)

.planning_pipelines(

pipelines=["ompl", "pilz_industrial_motion_planner", "stomp"],

default_planning_pipeline="pilz_industrial_motion_planner"

)

.planning_scene_monitor(

publish_robot_description=False,

publish_robot_description_semantic=True,

publish_planning_scene=True,

)

.pilz_cartesian_limits(file_path=pilz_cartesian_limits_file_path)

.to_moveit_configs()

)

# Start the actual move_group node/action server

start_move_group_node_cmd = Node(

package="moveit_ros_move_group",

executable="move_group",

output="screen",

parameters=[

moveit_config.to_dict(),

{'use_sim_time': use_sim_time},

{'start_state': {'content': initial_positions_file_path}},

],

)

# RViz

start_rviz_node_cmd = Node(

condition=IfCondition(use_rviz),

package="rviz2",

executable="rviz2",

arguments=["-d", rviz_config_file],

output="screen",

parameters=[

moveit_config.robot_description,

moveit_config.robot_description_semantic,

moveit_config.planning_pipelines,

moveit_config.robot_description_kinematics,

moveit_config.joint_limits,

{'use_sim_time': use_sim_time}

],

)

exit_event_handler = RegisterEventHandler(

condition=IfCondition(use_rviz),

event_handler=OnProcessExit(

target_action=start_rviz_node_cmd,

on_exit=EmitEvent(event=Shutdown(reason='rviz exited')),

),

)

# Create the launch description and populate

ld = LaunchDescription()

# Declare the launch options

ld.add_action(declare_use_sim_time_cmd)

ld.add_action(declare_use_rviz_cmd)

# Add any actions

ld.add_action(start_move_group_node_cmd)

ld.add_action(start_rviz_node_cmd)

# Clean shutdown of RViz

ld.add_action(exit_event_handler)

return ld

Save the file, and close it.

This launch file launches MoveIt and RViz.

Looking at the source code for the moveit_configs_builder helped me to complete this launch file.

Create a Setup Assistant File

The .setup_assistant file is typically created automatically when you use the MoveIt Setup Assistant to configure your robot.

Create a new file named .setup_assistant in the root directory of your MoveIt configuration package (e.g., mycobot_moveit_config_manual_setup).

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setup/gedit .setup_assistantAdd this code:

moveit_setup_assistant_config:

URDF:

package: mycobot_gazebo

relative_path: urdf/ros2_control/gazebo/mycobot_280.urdf.xacro

xacro_args: ""

SRDF:

relative_path: config/mycobot_280.srdf

CONFIG:

author_name: AutomaticAddison

author_email: addison@todo.com

generated_timestamp: 1690848000Save the file, and close it.

Set file permissions.

sudo chmod 644 .setup_assistantEdit package.xml

Let’s edit the package.xml file with the dependencies.

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setupgedit package.xmlAdd this code.

Save the file, and close it.

Edit CMakeLists.txt

Update CMakeLists.txt with the new folders we have created.

cd ~/ros2_ws/src/mycobot_ros2/mycobot_moveit_config_manual_setupgedit CMakeLists.txtAdd this code.

cmake_minimum_required(VERSION 3.8)

project(mycobot_moveit_config_manual_setup)

if(CMAKE_COMPILER_IS_GNUCXX OR CMAKE_CXX_COMPILER_ID MATCHES "Clang")

add_compile_options(-Wall -Wextra -Wpedantic)

endif()

# find dependencies

find_package(ament_cmake REQUIRED)

find_package(moveit_ros_planning_interface REQUIRED)

find_package(moveit_ros_move_group REQUIRED)

find_package(moveit_kinematics REQUIRED)

find_package(moveit_planners_ompl REQUIRED)

find_package(moveit_ros_visualization REQUIRED)

find_package(rviz2 REQUIRED)

find_package(rviz_visual_tools REQUIRED)

# Copy necessary files to designated locations in the project

install (

DIRECTORY config launch rviz scripts

DESTINATION share/${PROJECT_NAME}

)

# Install the .setup_assistant file

install(

FILES .setup_assistant

DESTINATION share/${PROJECT_NAME}

)

if(BUILD_TESTING)

find_package(ament_lint_auto REQUIRED)

# the following line skips the linter which checks for copyrights

# comment the line when a copyright and license is added to all source files

set(ament_cmake_copyright_FOUND TRUE)

# the following line skips cpplint (only works in a git repo)

# comment the line when this package is in a git repo and when

# a copyright and license is added to all source files

set(ament_cmake_cpplint_FOUND TRUE)

ament_lint_auto_find_test_dependencies()

endif()

ament_package()Save the file, and close it.

Build the Package

Now let’s build the package.

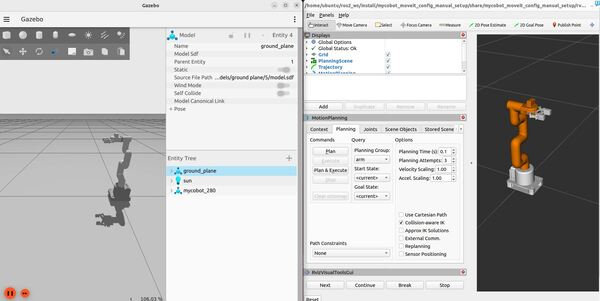

cd ~/ros2_ws/colcon buildsource ~/.bashrcLaunch the Demo and Configure the Plugin

Begin by launching the robot in Gazebo along with all the controllers (i.e. arm_controller, grip_controller). Since we are going to launch RViz using the MoveIt launch file, we can set the use_rviz flag to False.

ros2 launch mycobot_gazebo mycobot_280_arduino_bringup_ros2_control_gazebo.launch.py use_rviz:=falseThis will:

- Start Gazebo

- Spawn the robot

- Start the robot_state_publisher

- Load and start the necessary controllers (joint_state_broadcaster, arm_controller, grip_controller)

If you look in the logs, you will see the joint_state_broadcaster, arm_controller, and grip_controller are loaded and activated.

Launch MoveIt and RViz.

ros2 launch mycobot_moveit_config_manual_setup move_group.launch.py This will:

- Start the move_group node

- Launch RViz with MoveIt configuration

- Use the simulation time from Gazebo

Rather than launching two launch files one after the other, you can put them both into a bash script like this (call the file some name like “robot.sh”):

#!/bin/bash

# Single script to launch the mycobot with Gazebo, RViz, and MoveIt 2

cleanup() {

echo "Restarting ROS 2 daemon to cleanup before shutting down all processes..."

ros2 daemon stop

sleep 1

ros2 daemon start

echo "Terminating all ROS 2-related processes..."

kill 0

exit

}

trap 'cleanup' SIGINT;

ros2 launch mycobot_gazebo mycobot_280_arduino_bringup_ros2_control_gazebo.launch.py use_rviz:=false &

sleep 10

ros2 launch mycobot_moveit_config_manual_setup move_group.launch.py

Be sure to set permissions to this bash script before running it.

sudo chmod +x robot.shThen run this command to launch everything at once.

bash robot.shHere is what your screen should look like when everything is loaded properly. You will notice that on the right-hand side of Gazebo I closed the two panels. You can close them by right-clicking and hitting “Close”.

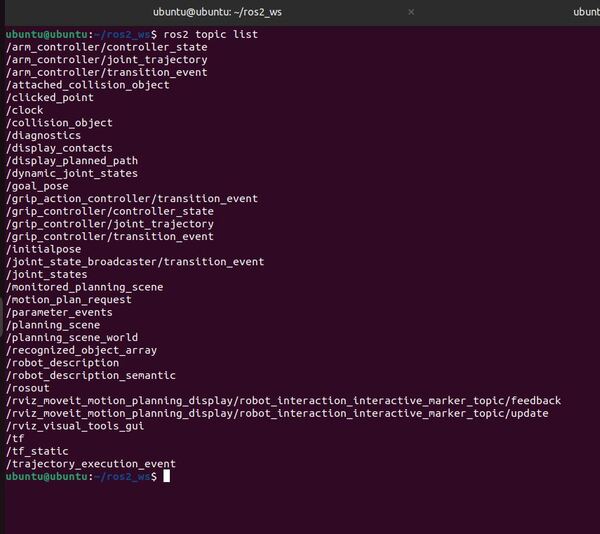

Let’s check out the active topics:

ros2 topic list

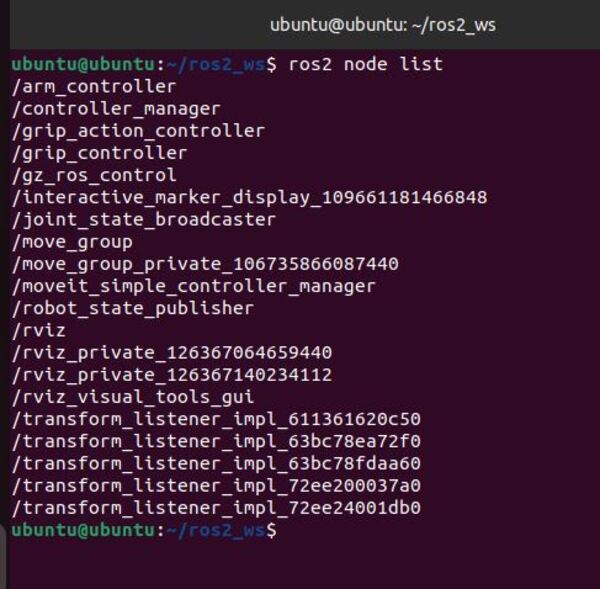

The active nodes are:

ros2 node list

Here are our available action servers:

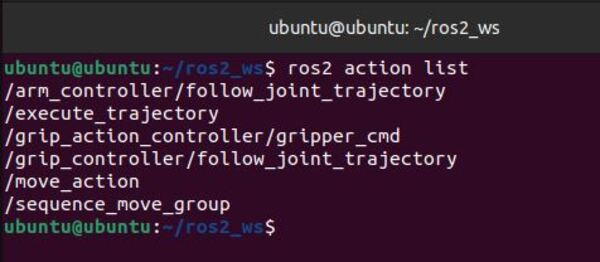

ros2 action list

Here are our services. You will see we have a lot of services. This page at the ROS 2 tutorials shows you how we can call services from the command line.

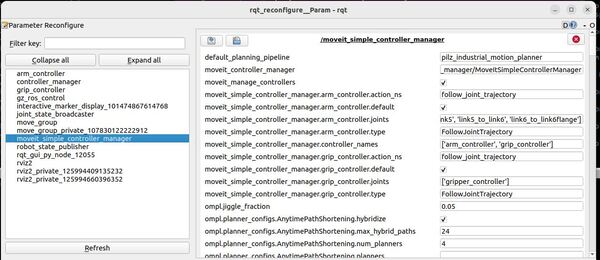

ros2 service listLet’s check out the parameters for the different nodes my launching a GUI tool called rqt_reconfigure:

sudo apt install ros-$ROS_DISTRO-rqt-reconfigurerqt --standalone rqt_reconfigure

Click “Refresh”.

Click on any of the nodes to see the parameters for that node. For example, let’s take a look at the moveit_simple_controller_manager node.

This tool enables you to see if the parameters you set in the config file of your MoveIt package were set correctly.

Explore MoveIt in RViz

We will now roughly follow this official MoveIt Quickstart in RViz tutorial which walks you through the main features provided by the MoveIt RViz plugins.

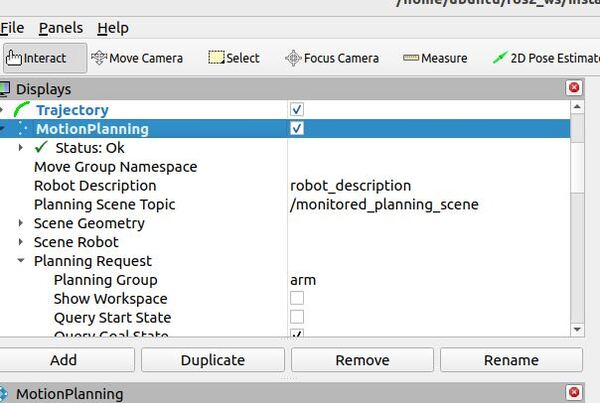

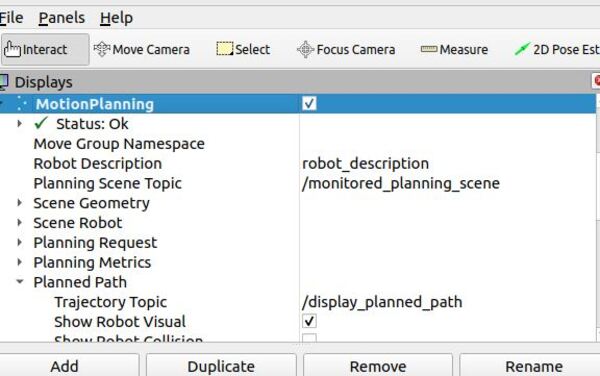

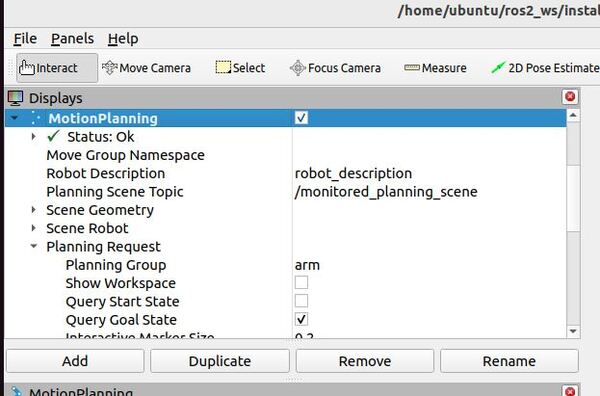

First, click on the Displays panel in the upper left.

Under Global Options, make sure the Fixed Frame is set to “base_link”

Scroll down to the MotionPlanning plugin.

Make sure the Robot Description field is set to robot_description.

Make sure the Planning Scene Topic field is set to /monitored_planning_scene.

Make sure the Trajectory Topic under Planned Path is set to /display_planned_path.

In the Planning Request section, make sure the Planning Group is set to arm. You can also see this in the MotionPlanning panel in the bottom left.

Play With the Robotic Arm

Rviz has four different visualizations for MoveIt. These visualizations are overlapping.

- The robot’s configuration in the /monitored_planning_scene planning environment:

- Planning Scene -> Scene Robot -> Show Robot Visual checkbox

- The planned path for the robot:

- Motion Planning -> Planned Path -> Show Robot Visual checkbox

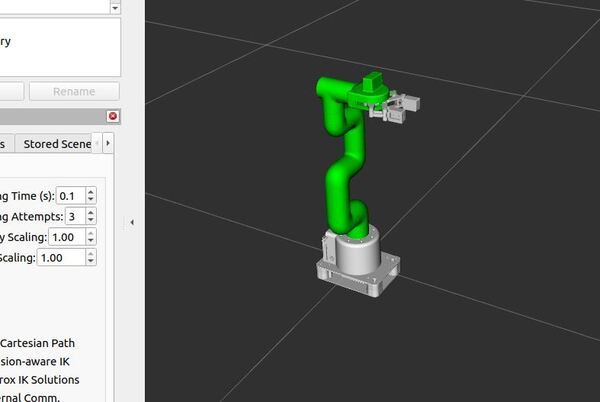

- Green: The start state for motion planning:

- MotionPlanning -> Planning Request -> Query Start State checkbox

- Orange: The goal state for motion planning:

- MotionPlanning -> Planning Request -> Query Goal State checkbox

You can toggle each of these visualizations on and off using the checkboxes in the Displays panel.

Interact With the Robotic Arm

Now let’s configure our RViz so that all we have are the scene robot, the starting state, and the goal state.

- Check the Motion Planning -> Planned Path -> Show Robot Visual checkbox

- Uncheck the Planning Scene -> Scene Robot -> Show Robot Visual checkbox

- Uncheck the MotionPlanning -> Planning Request -> Query Start State checkbox

- Check the MotionPlanning -> Planning Request -> Query Goal State checkbox

The orange colored robot represents the desired Goal State.

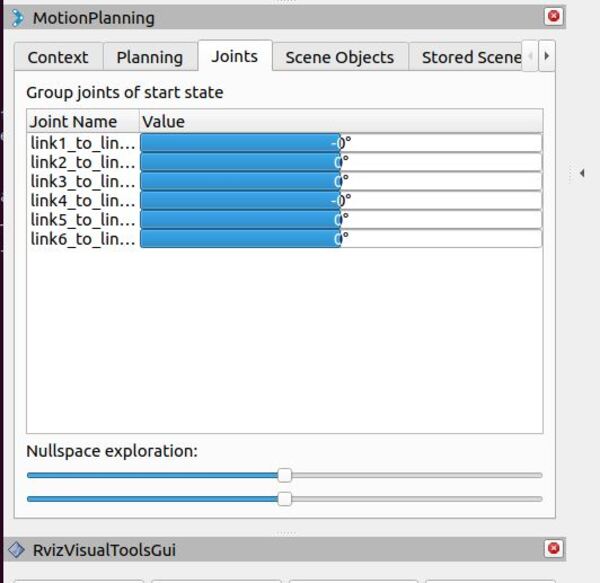

Find the “Joints” tab in the bottom MotionPlanning panel. This lets you control individual joints of the robot.

Try moving the sliders for the joints, and watch how the robot arm changes its pose.

Uncheck the MotionPlanning -> Planning Request -> Query Goal State checkbox.

Check the MotionPlanning -> Planning Request -> Query Start State checkbox.

The green colored robot is used to set the Start State for motion planning.

Use Motion Planning

Now it’s time to plan a movement for your robotic arm using RViz and MoveIt 2.

In the Displays panel in RViz, do the following…

Uncheck the MotionPlanning -> Planning Request -> Query Goal State checkbox

Check the MotionPlanning -> Planning Request -> Query Start State checkbox.

You can see if any links are in collision by going to the MotionPlanning window at the bottom and moving to the “Status” tab.

Move the green (Start State) to where you want the movement to begin.

Rather than using the interactive marker, I find it easier to go to the “Joints” tab in the Motion Planning window at the bottom. This lets you control individual joints of the robot to get your desired Start State.

Uncheck the MotionPlanning -> Planning Request -> Query Start State checkbox.

Check the MotionPlanning -> Planning Request -> Query Goal State checkbox.

Move the orange (Goal State) to where you want the movement to end.

Check the MotionPlanning -> Planning Request -> Query Start State checkbox.

If you have a strong computer, you can check the MotionPlanning -> Planned Path -> Show Trail checkbox. This will show you a trail of the planned path. This step is optional.

Now let’s plan a motion. Look for the “MotionPlanning” window at the bottom-left part of the screen.

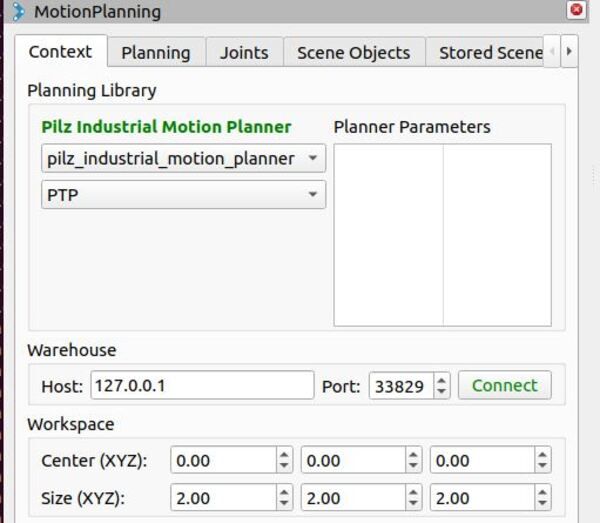

Click the Context tab.

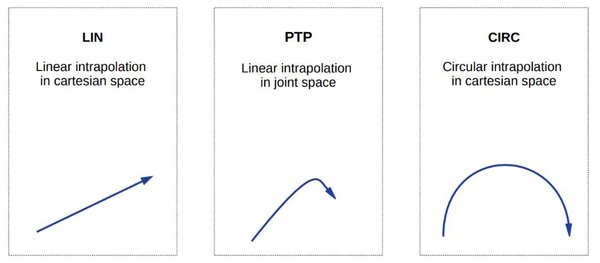

- Select CIRC (circular motion) if you want the end-effector to move in a circular arc.

- Select LIN (linear motion) if you want the end effector to move in a straight line between the start and goal positions

- Select PTP (point-to-point motion) if you want the quickest joint movement between start and goal configurations.

I will click PTP.

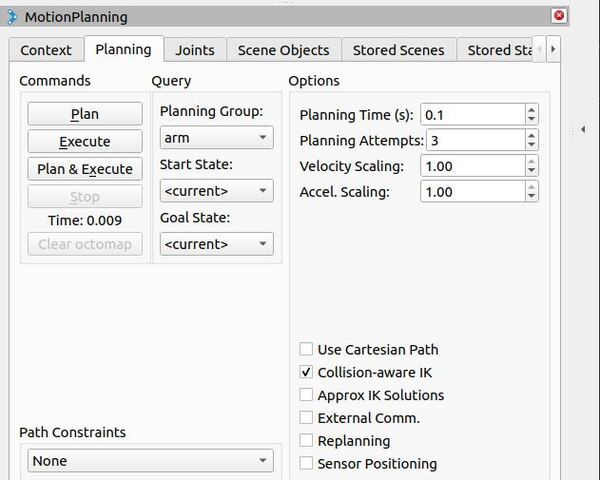

Now click the Planning tab.

Click the Plan button to generate a motion plan between the start and goal state.

The system will calculate a path, and you should see a preview of the planned motion in the 3D visualization window.

Review the planned path carefully to ensure it’s appropriate and collision-free.

If you’re not satisfied with the plan, you can adjust the start and goal states in the 3D view and plan again.

Once you’re happy with the plan, click the Plan & Execute button to make the robot carry out the planned motion.

You should see your simulated robotic arm moving in both Gazebo and RViz.

If you want to speed things up, you can tweak the Velocity Scaling figures on the Planning tab. You can make them all 1.0 for example.

Then click Plan & Execute again.

Keep setting new Goal States and moving your arm to different poses.

Be careful not to move your robot into a Goal State where the robot appears red. Red links on your robot mean the robot is colliding with itself.

If you want to open and close the gripper. The steps are the same.

Go to the MotionPlanning window at the bottom-left.

Go to the Planning tab.

Change the Planning Group to gripper.

Go to the Context tab.

Select PTP under the pilz_industrial_motion_planner.

Click the Planning tab again.

Select closed as the Goal State. Remember this is one of the states we configured in the SRDF file.

Click Plan & Execute. No need to click Plan first and then Execute since this is a simple motion.

You should see the gripper close in Gazebo.

You can also make motion plans for the combined arm and gripper group. Have fun with it, and play around to get a feel for MoveIt2 in Rviz.

Don’t forget that every time you change a planning group, you need to go to the Context tab and select PTP.

You can try other planners other than Pilz if you like.

I have set up OMPL and STOMP as some alternative motion planning libraries. Just use the dropdown menu on the Context tab.

The two parameters files that are required to use OMPL (ompl_planning.yaml) and STOMP (stomp_planning.yaml) are in the mycobot_moveit_config_manual_setup/config folder.

A good planner to use if you are in doubt of what to use is the ompl/RRTConnectkConfigDefault planner. It works well for a wide variety of use cases.

Introspecting Trajectory Waypoints

Understanding how your robot moves in detail is important for ensuring smooth and safe operations. Let’s explore a tool that allows us to do just that:

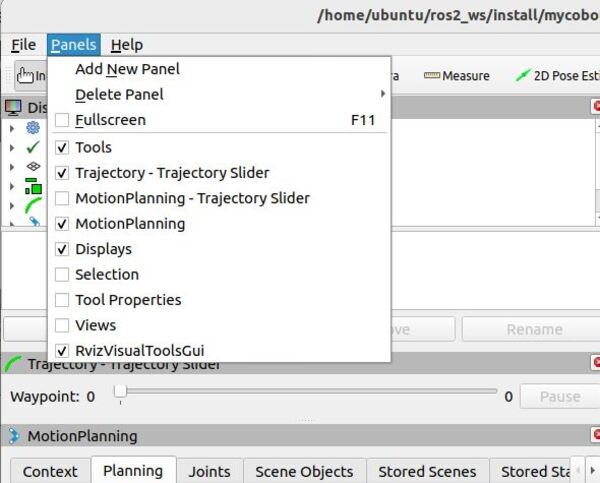

Add a new tool to RViz.

- Click on the “Panels” menu

- Choose “Trajectory – Trajectory Slider”

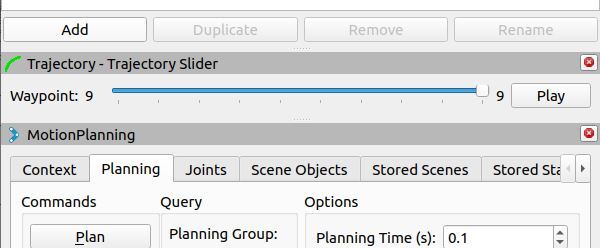

- You’ll see a new slider appear in RViz

Plan a movement:

- Set a new goal pose for the robotic arm.

- Click the “Plan” button

Use the new Trajectory Slider:

- Move the slider to see each step of the robot’s planned movement

- Press the “Play” button to watch the whole movement smoothly

Remember: If you change where you want the robot to go, always click “Plan” again before using the slider or “Play” button. This makes sure you’re looking at the newest plan, not an old one.

This tool helps you understand exactly how your robot will move from start to finish.

Plan Cartesian Motions

Sometimes, you want your robot to move in a straight line. Here’s how to do that.

Go to the MotionPlanning panel at the bottom-right of the screen.

Click the Planning tab.

Click the Use Cartesian Path checkbox.

What does this do?

- When checked, the robot will try to move its end-effector in a straight line.

- This is useful for tasks that need precise, direct movements, like drawing a straight line or moving directly towards an object.

Why is this important?

- Normal planning might move the robot’s arm in a curved or complex path.

- Cartesian planning ensures a more predictable, straight-line movement.

Try planning some movements with this option on and off to see the difference in how the robot moves.

That’s it! Keep building!