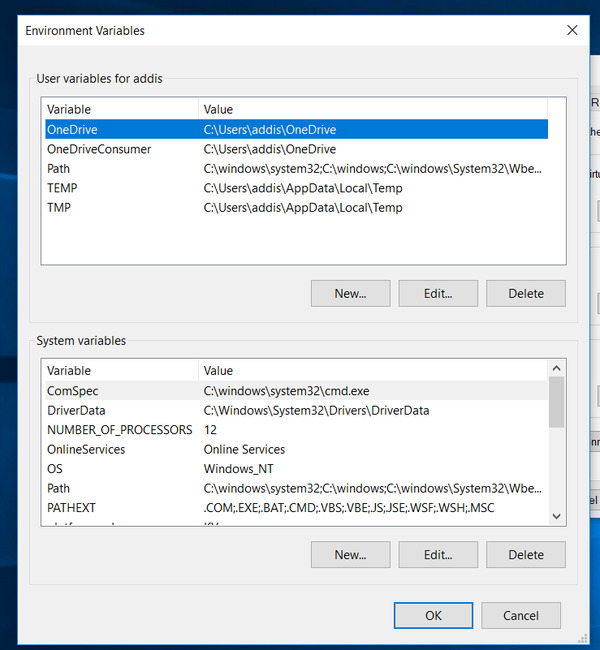

In this post, I will explain the difference between generative classifiers and discriminative classifiers.

Let us suppose we have a class that we want to predict H (hypothesis) and a set of attributes E (evidence). The goal of classification is to create a model based on E and H that can predict the class H given a set of new, unseen attributes E. However, both classifier types, generative and discriminative, go about this classification process differently.

Generative Classifiers

Classification algorithms such as Naïve Bayes are known as generative classifiers. Generative classifiers take in training data and create probability estimates. Specifically, they estimate the following:

- P(H): The probability of the hypothesis (e.g. spam or not spam). This value is the class prior probability (e.g. probability an e-mail is spam before taking any evidence into account).

- P(E|H): The probability of the evidence given the hypothesis (e.g. probability an e-mail contains the phrase “Buy Now” given that an e-mail is spam). This value is known as the likelihood.

Once the probability estimates above have been computed, the model then uses Bayes Rule to make predictions, choosing the most likely class, based on which class maximizes the expression P(E|H) * P(H).

Discriminative Classifiers

Rather than estimate likelihoods, discriminative classifiers like Logistic Regression estimate P(H|E) directly. A decision boundary is created that creates a dividing line/plane between instances of one class and instances of another class. New, unseen instances are classified based on which side of the line/plane they fall. In this way, a direct mapping is generated from attributes E to class labels H.

An Example Using an Analogy

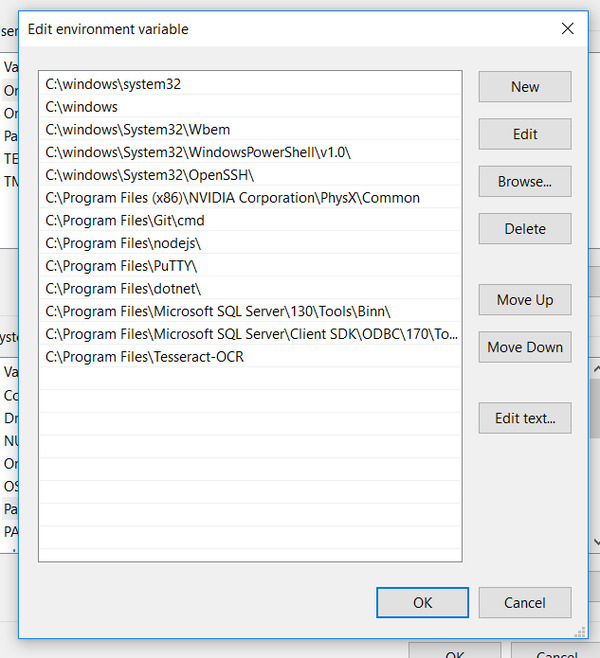

Here is an analogy that demonstrates the difference between generative and discriminative classifiers. Suppose we live in a world in which there are only two classes of animals, cats and rabbits. We want to build a robot that can automatically classify a new animal as either a cat or a rabbit. How would we train this robot using a discriminative algorithm like Logistic Regression?

With a discriminative algorithm, we would feed the model a set of training data containing instances of cats and instances of rabbits. The discriminative algorithm would try to find a straight line/plane (a decision boundary) that separates instances of cats from instances of rabbits. This line would be created by examining the differences in the attributes (e.g. herbivore vs. carnivore, long oval ears vs. small triangular ears, hopping vs. walking, etc.)

Once the training step is complete, the discriminative algorithm is then ready to classify new unseen animals. It will look at new, unseen animals and check which side of the decision boundary the animal should go. The animal is classified based on the side of the decision boundary it falls into.

In contrast, a generative learning algorithm like Naïve Bayes will take in training data and develop a model of what a cat and rabbit should look like. Once trained, a new, unseen animal is compared to the model of a cat and the model of a rabbit. It is then classified based on whether it looks more like the cat instances the model was trained on or the rabbit instances the model was trained on.

Past research has shown that discriminative classifiers like Logistic Regression generally perform better on classification tasks than generative classifiers like Naïve Bayes (Y. Ng & Jordan, 2001).

As a final note, generative classifiers are called generative because we can use the probabilistic information of the data to generate more instances. In other words, given a class y, you can generate its respective attributes x.

References

Y. Ng, A., & Jordan, M. (2001). On Discriminative vs. Generative Classifiers: A Comparison of Logistic Regression and Naive Bayes. NIPS’01 Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic , 841-848.