In this tutorial, we will explore SLAM (Simultaneous Localization and Mapping), a fundamental concept in robotics. SLAM allows a robot to create a map of its environment while simultaneously keeping track of its own location within that map. It’s like giving a robot the ability to explore an unknown area, remember where it has been, and use that information to navigate effectively. SLAM combines data from the robot’s sensors, such as cameras, LIDAR, and odometry, to incrementally build a consistent map and estimate the robot’s trajectory.

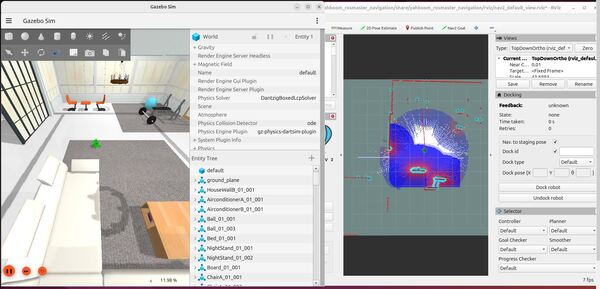

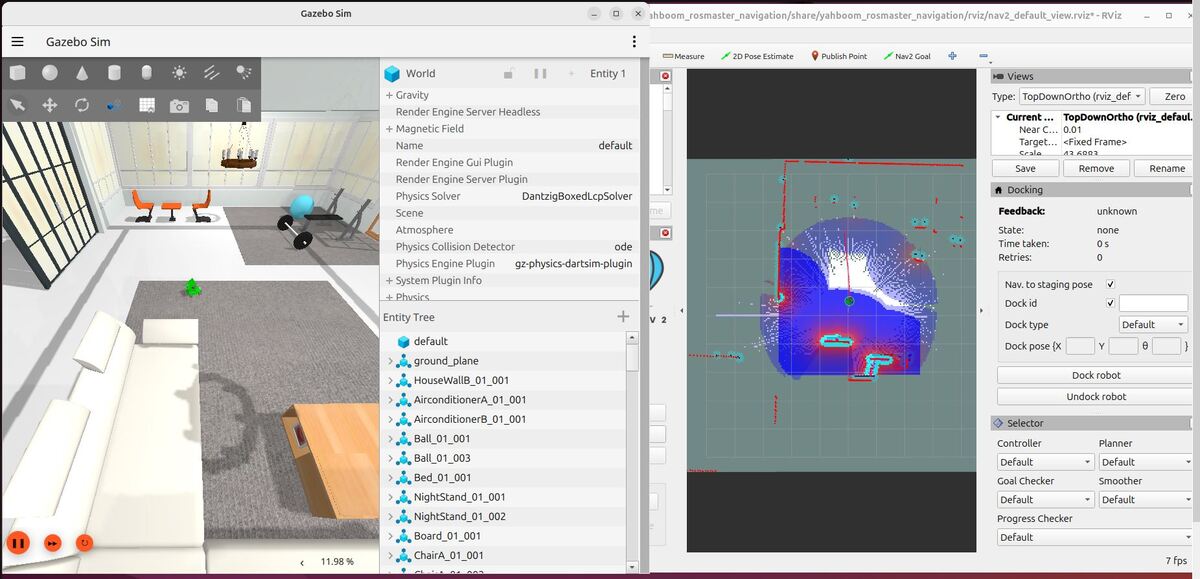

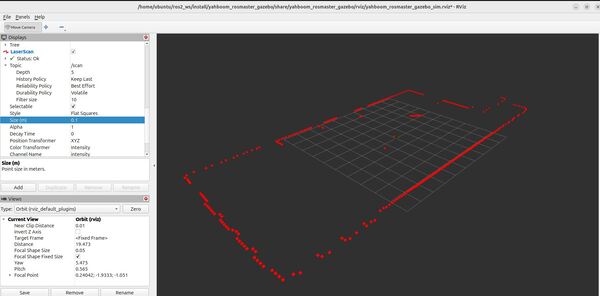

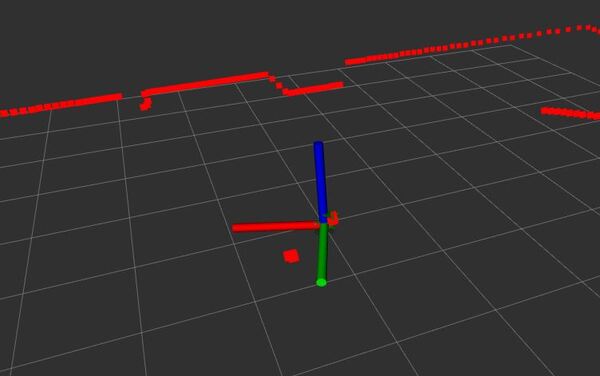

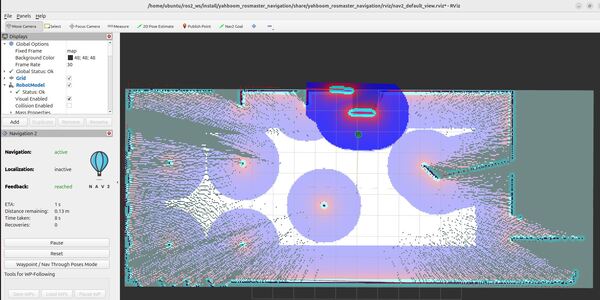

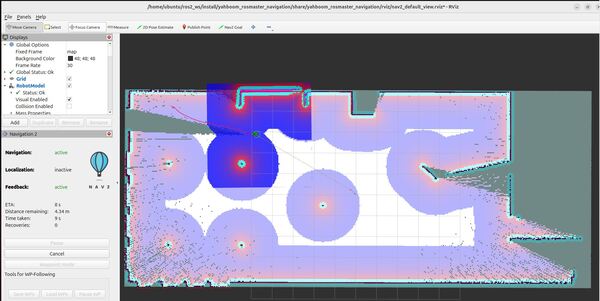

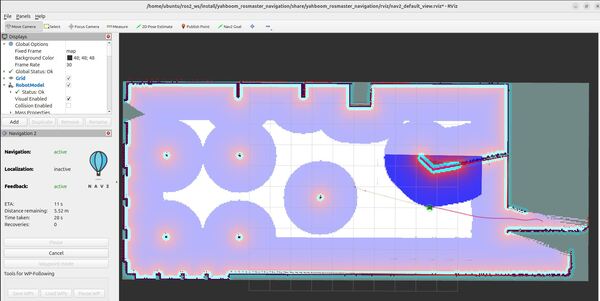

By the end of this tutorial, you will be able to build this:

Real-World Applications

Real-world applications of what you will learn in this tutorial include:

- Autonomous navigation for robots in various environments, such as homes, offices, or outdoor settings

- Mapping and exploration of unknown or dynamic environments

- Search and rescue operations where a robot needs to navigate and map an area

If you prefer learning by video, watch the video below, which covers this entire tutorial from start to finish.

Prerequisites

- You have completed this tutorial: How to Install ROS 2 Navigation (Nav2) – ROS 2 Jazzy.

- You have completed this tutorial: Sensor Fusion and Robot Localization Using ROS 2 Jazzy.

All my code for this project is located here on GitHub.

Create Folders to Store Maps and Configuration Files

Open a new terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/Add two new folders.

mkdir -p config mapsCreate a Configuration File for Nav2

Create the File

Now let’s create a file that stores the values for parameters defined for Nav2 nodes. These parameters

control how the robot builds a map, localizes itself, and navigates.

cd configtouch rosmaster_x3_nav2_default_params.yamlAdd this code.

Save the file.

This file has a lot of values. Don’t be intimidated. Over time you will get to know the meaning of each of these parameters.

If you want a detailed understanding of these parameters, go to this page.

Let’s discuss the main nodes that are responsible for mapping, localization, and obstacle detection.

Understanding the slam_toolbox

The slam_toolbox package helps the robot build a map of its environment as it moves around. SLAM stands for Simultaneous Localization and Mapping. As the robot explores, it uses its sensors like the lidar to detect obstacles. It localizes itself relative to those obstacles to figure out where it is on the map. At the same time, it adds the obstacle information to the map to build a complete picture of the environment.

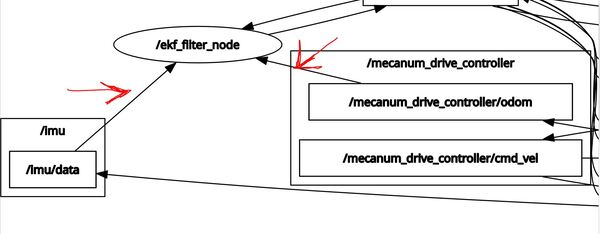

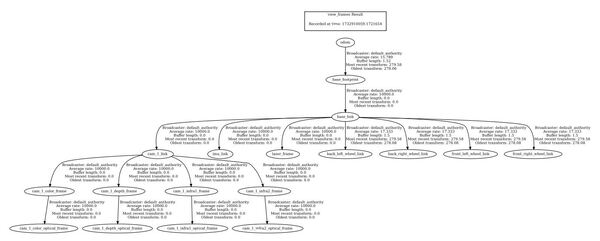

The slam_toolbox is responsible for publishing the coordinate transformation between the map frame and the odometry (odom) frame. This transformation allows the robot to understand its position and orientation within the map as it moves.

Understanding the amcl

The amcl (Adaptive Monte Carlo Localization) package helps the robot figure out where it is on a known map. This package is important once you have a complete map and want the robot to navigate autonomously.

amcl takes the map and LIDAR data as inputs. It then estimates the robot’s position and orientation by comparing the LIDAR scans to the expected readings at each possible position on the map. It uses a particle filter approach, maintaining a set of “particles” that represent guesses of the robot’s state.

Understanding the global_costmap

A costmap is like a special version of the map used for navigation. In the costmap, each cell has a specific value representing how difficult or dangerous it is to traverse. A value of 0 indicates completely free space, while 254 marks lethal obstacles. Special values like 253 mark inflated obstacle areas, and 255 indicates unknown space. Values between 1-252 represent increasing levels of navigation difficulty.

The navigation algorithms use these cost values to plan paths that steer the robot away from obstacles. You can picture it like hills on a landscape – the robot will naturally take the “valleys” that have low cost while avoiding the “peaks” of high-cost areas.

The global_costmap is used for long-term planning over the entire known map. It has several plugins or “layers” that track different kinds of data:

- The static_layer holds a copy of the map from the map server

- The obstacle_layer tracks obstacles detected by the robot’s sensors (typically LIDAR).

- The voxel_layer tracks 3D data if available (typically RGBD camera).

- The inflation_layer adds extra costs around obstacles to make the robot keep a safer distance

Understanding the local_costmap

The local_costmap is similar to the global one, but it’s used for short-term planning in a small area right around the robot.

The Difference Between the Global and the Local Costmap

The key differences between the global and local costmap are as follows:

- The global costmap covers the entire known environment, while the local costmap only covers a small area around the robot.

- The global costmap is used for big-picture planning of the best route given known obstacles (e.g. walls, sofas, etc.), while the local costmap is used for immediate decisions about avoiding both known (walls, sofas, etc.) and unknown obstacles (e.g. people walking through the environment).

Let’s use an analogy to make this clearer:

- Global Costmap: Imagine you are planning a road trip across the country. You use a large map to plot the best route from your starting point to your destination, considering major highways and cities. This is like the global costmap, helping you plan the overall journey.

- Local Costmap: Now, imagine you are driving through your neighborhood. You pay close attention to the streets and obstacles immediately around you, like parked cars or pedestrians, and make quick decisions to navigate through them. This is like the local costmap, helping you with immediate navigation.

So bottom line…the global costmap handles long-term path planning over a wide area, while the local costmap deals with real-time, short-term obstacle avoidance around the robot. By working together, these costmaps enable robots to navigate their environments efficiently and safely.

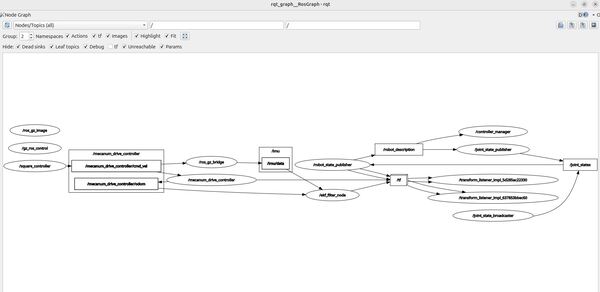

Create a Velocity Relay Node

We need to create a file that subscribes to the /cmd_vel topic (geometry_msgs/msg/Twist) and publishes to the /mecanum_drive_controller/cmd_vel ( geometry_msgs/msg/TwistStamped) topic.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/srctouch cmd_vel_relay_node.cppAdd this code:

/**

* @file cmd_vel_relay.cpp

* @brief Relay node that converts Twist messages to TwistStamped messages

*

* This program subscribes to velocity commands published as Twist messages and

* republishes them as TwistStamped messages. This is useful when interfacing

* between different ROS2 nodes that expect different message types for velocity

* commands.

*

* Subscription Topics:

* /cmd_vel (geometry_msgs/Twist): Raw velocity commands

*

* Publishing Topics:

* /mecanum_drive_controller/cmd_vel (geometry_msgs/TwistStamped):

* Timestamped velocity commands

*

* @author Addison Sears-Collins

* @date November 30, 2024

*/

// Include necessary header files

#include <memory> // For smart pointers

#include "rclcpp/rclcpp.hpp" // Main ROS2 C++ library

#include "geometry_msgs/msg/twist.hpp" // For Twist messages

#include "geometry_msgs/msg/twist_stamped.hpp" // For TwistStamped messages

/**

* @brief A ROS2 node that relays velocity commands between different message types

*

* This class creates a node that subscribes to Twist messages and republishes

* them as TwistStamped messages, adding a timestamp and frame ID.

*/

class CmdVelRelay : public rclcpp::Node {

public:

/**

* @brief Constructor for the CmdVelRelay node

*

* Initializes the node, creates a subscription to the input topic,

* and sets up a publisher for the output topic.

*/

CmdVelRelay() : Node("cmd_vel_relay") {

// Create subscription to /cmd_vel topic

// The '10' represents the queue size - how many messages to store if we can't process them fast enough

subscription_ = this->create_subscription<geometry_msgs::msg::Twist>(

"/cmd_vel", 10,

std::bind(&CmdVelRelay::cmd_vel_callback, this, std::placeholders::_1));

// Create publisher for /mecanum_drive_controller/cmd_vel topic

// Again, '10' is the queue size for outgoing messages

publisher_ = this->create_publisher<geometry_msgs::msg::TwistStamped>(

"/mecanum_drive_controller/cmd_vel", 10);

// Log a message indicating the node has started

RCLCPP_INFO(this->get_logger(), "Velocity relay node started");

}

private:

/**

* @brief Callback function that handles incoming Twist messages

*

* This function is called whenever a new message arrives on the /cmd_vel topic.

* It creates a new TwistStamped message, copies the velocity data, adds

* a timestamp and frame ID, and publishes the result.

*

* @param msg Shared pointer to the incoming Twist message

*/

void cmd_vel_callback(const geometry_msgs::msg::Twist::SharedPtr msg) {

// Create a new TwistStamped message

auto stamped_msg = std::make_unique<geometry_msgs::msg::TwistStamped>();

// Set the timestamp to current time

stamped_msg->header.stamp = this->now();

// Set the frame ID (coordinate frame this velocity is expressed in)

stamped_msg->header.frame_id = "base_link";

// Copy the twist message content (linear and angular velocities)

stamped_msg->twist = *msg;

// Publish the stamped message

// std::move is used for efficient transfer of the message

publisher_->publish(std::move(stamped_msg));

}

// Declare class member variables for the subscriber and publisher

rclcpp::Subscription<geometry_msgs::msg::Twist>::SharedPtr subscription_;

rclcpp::Publisher<geometry_msgs::msg::TwistStamped>::SharedPtr publisher_;

};

/**

* @brief Main function that initializes and runs the node

*

* @param argc Number of command line arguments

* @param argv Array of command line arguments

* @return int Exit status

*/

int main(int argc, char* argv[]) {

// Initialize ROS2

rclcpp::init(argc, argv);

// Create an instance of our node

auto node = std::make_shared<CmdVelRelay>();

// Spin the node, making it respond to incoming messages

rclcpp::spin(node);

// Clean up ROS2 resources

rclcpp::shutdown();

return 0;

}

Save the file, and close it.

cd ..Add these lines to CMakeLists.txt:

add_executable(cmd_vel_relay src/cmd_vel_relay_node.cpp)

ament_target_dependencies(cmd_vel_relay

rclcpp

geometry_msgs

)

install(TARGETS

cmd_vel_relay

DESTINATION lib/${PROJECT_NAME}

)

Add these lines to package.xml:

<depend>rclcpp</depend>

<depend>geometry_msgs</depend>

Edit the Launch File

To be able to map our environment, we need to edit our launch file.

Open up a new terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/launchOpen rosmaster_x3_navigation.launch.py.

Add this code.

#!/usr/bin/env python3

"""

Launch Nav2 for the Yahboom ROSMASTER X3 robot in Gazebo.

This launch file sets up a complete ROS 2 navigation environment.

:author: Addison Sears-Collins

:date: November 30, 2024

"""

import os

from launch import LaunchDescription

from launch.actions import DeclareLaunchArgument, IncludeLaunchDescription

from launch.launch_description_sources import PythonLaunchDescriptionSource

from launch.substitutions import LaunchConfiguration

from launch_ros.actions import Node

from launch_ros.substitutions import FindPackageShare

def generate_launch_description():

"""

Generate a launch description.

Returns:

LaunchDescription: A complete launch description for the robot.

"""

# Constants for paths to different packages

package_name_gazebo = 'yahboom_rosmaster_gazebo'

package_name_localization = 'yahboom_rosmaster_localization'

package_name_navigation = 'yahboom_rosmaster_navigation'

# Launch and config file paths

gazebo_launch_file_path = 'launch/yahboom_rosmaster.gazebo.launch.py'

ekf_launch_file_path = 'launch/ekf_gazebo.launch.py'

ekf_config_file_path = 'config/ekf.yaml'

map_file_path = 'maps/cafe_world_map.yaml'

nav2_params_path = 'config/rosmaster_x3_nav2_default_params.yaml'

rviz_config_file_path = 'rviz/nav2_default_view.rviz'

# Set the path to different packages

pkg_share_gazebo = FindPackageShare(package=package_name_gazebo).find(package_name_gazebo)

pkg_share_localization = FindPackageShare(

package=package_name_localization).find(package_name_localization)

pkg_share_navigation = FindPackageShare(

package=package_name_navigation).find(package_name_navigation)

# Set default paths

default_gazebo_launch_path = os.path.join(pkg_share_gazebo, gazebo_launch_file_path)

default_ekf_launch_path = os.path.join(pkg_share_localization, ekf_launch_file_path)

default_ekf_config_path = os.path.join(pkg_share_localization, ekf_config_file_path)

default_rviz_config_path = os.path.join(pkg_share_navigation, rviz_config_file_path)

nav2_dir = FindPackageShare(package='nav2_bringup').find('nav2_bringup')

nav2_launch_dir = os.path.join(nav2_dir, 'launch')

nav2_params_path = os.path.join(pkg_share_navigation, nav2_params_path)

static_map_path = os.path.join(pkg_share_navigation, map_file_path)

# Launch configuration variables

# Config and launch files

autostart = LaunchConfiguration('autostart')

enable_odom_tf = LaunchConfiguration('enable_odom_tf')

ekf_config_file = LaunchConfiguration('ekf_config_file')

ekf_launch_file = LaunchConfiguration('ekf_launch_file')

gazebo_launch_file = LaunchConfiguration('gazebo_launch_file')

map_yaml_file = LaunchConfiguration('map')

namespace = LaunchConfiguration('namespace')

nav2_params_file = LaunchConfiguration('nav2_params_file')

rviz_config_file = LaunchConfiguration('rviz_config_file')

slam = LaunchConfiguration('slam')

use_composition = LaunchConfiguration('use_composition')

use_namespace = LaunchConfiguration('use_namespace')

use_respawn = LaunchConfiguration('use_respawn')

# Robot configuration

robot_name = LaunchConfiguration('robot_name')

world_file = LaunchConfiguration('world_file')

# Position and orientation

x = LaunchConfiguration('x')

y = LaunchConfiguration('y')

z = LaunchConfiguration('z')

roll = LaunchConfiguration('roll')

pitch = LaunchConfiguration('pitch')

yaw = LaunchConfiguration('yaw')

# Feature flags related to Gazebo and the robot description

headless = LaunchConfiguration('headless')

jsp_gui = LaunchConfiguration('jsp_gui')

load_controllers = LaunchConfiguration('load_controllers')

use_gazebo = LaunchConfiguration('use_gazebo')

use_robot_state_pub = LaunchConfiguration('use_robot_state_pub')

use_rviz = LaunchConfiguration('use_rviz')

use_sim_time = LaunchConfiguration('use_sim_time')

# Declare all launch arguments

# Config and launch files

declare_autostart_cmd = DeclareLaunchArgument(

name='autostart',

default_value='true',

description='Automatically startup the Nav2 stack')

declare_ekf_config_file_cmd = DeclareLaunchArgument(

name='ekf_config_file',

default_value=default_ekf_config_path,

description='Full path to the EKF configuration YAML file')

declare_ekf_launch_file_cmd = DeclareLaunchArgument(

name='ekf_launch_file',

default_value=default_ekf_launch_path,

description='Full path to the EKF launch file to use')

declare_enable_odom_tf_cmd = DeclareLaunchArgument(

name='enable_odom_tf',

default_value='true',

choices=['true', 'false'],

description='Whether to enable odometry transform broadcasting via ROS 2 Control')

declare_gazebo_launch_file_cmd = DeclareLaunchArgument(

name='gazebo_launch_file',

default_value=default_gazebo_launch_path,

description='Full path to the Gazebo launch file to use')

declare_map_yaml_cmd = DeclareLaunchArgument(

name='map',

default_value=static_map_path,

description='Full path to map file to load')

declare_namespace_cmd = DeclareLaunchArgument(

name='namespace',

default_value='',

description='Top-level namespace')

declare_nav2_params_file_cmd = DeclareLaunchArgument(

name='nav2_params_file',

default_value=nav2_params_path,

description='Full path to the ROS2 parameters file to use for navigation nodes')

declare_rviz_config_file_cmd = DeclareLaunchArgument(

name='rviz_config_file',

default_value=default_rviz_config_path,

description='Full path to the RVIZ config file to use')

# Robot configuration

declare_robot_name_cmd = DeclareLaunchArgument(

name='robot_name',

default_value='rosmaster_x3',

description='The name for the robot')

declare_slam_cmd = DeclareLaunchArgument(

name='slam',

default_value='False',

description='Whether to run SLAM')

declare_use_namespace_cmd = DeclareLaunchArgument(

name='use_namespace',

default_value='false',

description='Whether to apply a namespace to the navigation stack')

declare_world_cmd = DeclareLaunchArgument(

name='world_file',

default_value='cafe.world',

description='World file name (e.g., empty.world, house.world)')

# Position arguments

declare_x_cmd = DeclareLaunchArgument(

name='x',

default_value='0.0',

description='x component of initial position, meters')

declare_y_cmd = DeclareLaunchArgument(

name='y',

default_value='0.0',

description='y component of initial position, meters')

declare_z_cmd = DeclareLaunchArgument(

name='z',

default_value='0.20',

description='z component of initial position, meters')

# Orientation arguments

declare_roll_cmd = DeclareLaunchArgument(

name='roll',

default_value='0.0',

description='roll angle of initial orientation, radians')

declare_pitch_cmd = DeclareLaunchArgument(

name='pitch',

default_value='0.0',

description='pitch angle of initial orientation, radians')

declare_yaw_cmd = DeclareLaunchArgument(

name='yaw',

default_value='0.0',

description='yaw angle of initial orientation, radians')

# Feature flags

declare_headless_cmd = DeclareLaunchArgument(

name='headless',

default_value='False',

description='Whether to execute gzclient (visualization)')

declare_jsp_gui_cmd = DeclareLaunchArgument(

name='jsp_gui',

default_value='false',

description='Flag to enable joint_state_publisher_gui')

declare_load_controllers_cmd = DeclareLaunchArgument(

name='load_controllers',

default_value='true',

description='Flag to enable loading of ROS 2 controllers')

declare_use_composition_cmd = DeclareLaunchArgument(

name='use_composition',

default_value='True',

description='Whether to use composed bringup')

declare_use_respawn_cmd = DeclareLaunchArgument(

name='use_respawn',

default_value='False',

description='Whether to respawn if a node crashes. Applied when composition is disabled.')

declare_use_gazebo_cmd = DeclareLaunchArgument(

name='use_gazebo',

default_value='true',

description='Flag to enable Gazebo')

declare_use_robot_state_pub_cmd = DeclareLaunchArgument(

name='use_robot_state_pub',

default_value='true',

description='Flag to enable robot state publisher')

declare_use_rviz_cmd = DeclareLaunchArgument(

name='use_rviz',

default_value='true',

description='Flag to enable RViz')

declare_use_sim_time_cmd = DeclareLaunchArgument(

name='use_sim_time',

default_value='true',

description='Use simulation (Gazebo) clock if true')

# Specify the actions

# Start the node that relays /cmd_vel to /mecanum_drive_controller/cmd_vel

start_cmd_vel_relay_cmd = Node(

package='yahboom_rosmaster_navigation',

executable='cmd_vel_relay',

name='cmd_vel_relay',

output='screen',

parameters=[{'use_sim_time': use_sim_time}]

)

# Start Extended Kalman Filter node from the robot_localization ROS 2 package

start_ekf_cmd = IncludeLaunchDescription(

PythonLaunchDescriptionSource([ekf_launch_file]),

launch_arguments={

'ekf_config_file': ekf_config_file,

'use_sim_time': use_sim_time

}.items()

)

# Start Gazebo

start_gazebo_cmd = IncludeLaunchDescription(

PythonLaunchDescriptionSource([gazebo_launch_file]),

launch_arguments={

'enable_odom_tf': enable_odom_tf,

'headless': headless,

'jsp_gui': jsp_gui,

'load_controllers': load_controllers,

'robot_name': robot_name,

'rviz_config_file': rviz_config_file,

'use_rviz': use_rviz,

'use_gazebo': use_gazebo,

'use_robot_state_pub': use_robot_state_pub,

'use_sim_time': use_sim_time,

'world_file': world_file,

'x': x,

'y': y,

'z': z,

'roll': roll,

'pitch': pitch,

'yaw': yaw

}.items()

)

# Launch the ROS 2 Navigation Stack

start_ros2_navigation_cmd = IncludeLaunchDescription(

PythonLaunchDescriptionSource(os.path.join(nav2_launch_dir, 'bringup_launch.py')),

launch_arguments={

'namespace': namespace,

'use_namespace': use_namespace,

'slam': slam,

'map': map_yaml_file,

'use_sim_time': use_sim_time,

'params_file': nav2_params_file,

'autostart': autostart,

'use_composition': use_composition,

'use_respawn': use_respawn,

}.items()

)

# Create the launch description and populate

ld = LaunchDescription()

# Add all launch arguments

# Config and launch files

ld.add_action(declare_autostart_cmd)

ld.add_action(declare_enable_odom_tf_cmd)

ld.add_action(declare_ekf_config_file_cmd)

ld.add_action(declare_ekf_launch_file_cmd)

ld.add_action(declare_gazebo_launch_file_cmd)

ld.add_action(declare_map_yaml_cmd)

ld.add_action(declare_namespace_cmd)

ld.add_action(declare_nav2_params_file_cmd)

ld.add_action(declare_rviz_config_file_cmd)

ld.add_action(declare_slam_cmd)

ld.add_action(declare_use_composition_cmd)

ld.add_action(declare_use_namespace_cmd)

ld.add_action(declare_use_respawn_cmd)

# Robot configuration

ld.add_action(declare_robot_name_cmd)

ld.add_action(declare_world_cmd)

# Position declarations

ld.add_action(declare_x_cmd)

ld.add_action(declare_y_cmd)

ld.add_action(declare_z_cmd)

# Orientation declarations

ld.add_action(declare_roll_cmd)

ld.add_action(declare_pitch_cmd)

ld.add_action(declare_yaw_cmd)

# Feature flags

ld.add_action(declare_headless_cmd)

ld.add_action(declare_jsp_gui_cmd)

ld.add_action(declare_load_controllers_cmd)

ld.add_action(declare_use_gazebo_cmd)

ld.add_action(declare_use_robot_state_pub_cmd)

ld.add_action(declare_use_rviz_cmd)

ld.add_action(declare_use_sim_time_cmd)

# Add any actions

ld.add_action(start_cmd_vel_relay_cmd)

ld.add_action(start_ekf_cmd)

ld.add_action(start_gazebo_cmd)

ld.add_action(start_ros2_navigation_cmd)

return ld

Save the file, and close it.

Edit CMakeLists.txt

Edit the CMakeLists.txt file for the yahboom_rosmaster_navigation package.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/Make sure to add these lines:

…

install(

DIRECTORY config maps rviz

DESTINATION share/${PROJECT_NAME}

)

if(BUILD_TESTING)

find_package(ament_lint_auto REQUIRED)

# the following line skips the linter which checks for copyrights

# comment the line when a copyright and license is added to all source files

set(ament_cmake_copyright_FOUND TRUE)

# the following line skips cpplint (only works in a git repo)

# comment the line when this package is in a git repo and when

# a copyright and license is added to all source files

set(ament_cmake_cpplint_FOUND TRUE)

ament_lint_auto_find_test_dependencies()

endif()

ament_package()

Save the file, and close it.

Edit the Launch Script

Open up a new terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/scripts/Edit rosmaster_x3_navigation.sh so we can pass a boolean variable called slam to it.

#!/bin/bash

# Single script to launch the Yahboom ROSMASTERX3 with Gazebo, Nav2 and ROS 2 Controllers

cleanup() {

echo "Cleaning up..."

sleep 5.0

pkill -9 -f "ros2|gazebo|gz|nav2|amcl|bt_navigator|nav_to_pose|rviz2|assisted_teleop|cmd_vel_relay|robot_state_publisher|joint_state_publisher|move_to_free|mqtt|autodock|cliff_detection|moveit|move_group|basic_navigator"

}

# Set up cleanup trap

trap 'cleanup' SIGINT SIGTERM

# Check if SLAM argument is provided

if [ "$1" = "slam" ]; then

SLAM_ARG="slam:=True"

else

SLAM_ARG="slam:=False"

fi

# For cafe.world -> z:=0.20

# For house.world -> z:=0.05

# To change Gazebo camera pose: gz service -s /gui/move_to/pose --reqtype gz.msgs.GUICamera --reptype gz.msgs.Boolean --timeout 2000 --req "pose: {position: {x: 0.0, y: -2.0, z: 2.0} orientation: {x: -0.2706, y: 0.2706, z: 0.6533, w: 0.6533}}"

echo "Launching Gazebo simulation with Nav2..."

ros2 launch yahboom_rosmaster_bringup rosmaster_x3_navigation_launch.py \

enable_odom_tf:=false \

headless:=False \

load_controllers:=true \

world_file:=cafe.world \

use_rviz:=true \

use_robot_state_pub:=true \

use_sim_time:=true \

x:=0.0 \

y:=0.0 \

z:=0.20 \

roll:=0.0 \

pitch:=0.0 \

yaw:=0.0 \

"$SLAM_ARG" \

map:=/home/ubuntu/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/maps/cafe_world_map.yaml &

echo "Waiting 25 seconds for simulation to initialize..."

sleep 25

echo "Adjusting camera position..."

gz service -s /gui/move_to/pose --reqtype gz.msgs.GUICamera --reptype gz.msgs.Boolean --timeout 2000 --req "pose: {position: {x: 0.0, y: -2.0, z: 2.0} orientation: {x: -0.2706, y: 0.2706, z: 0.6533, w: 0.6533}}"

# Keep the script running until Ctrl+C

wait

Save the file, and close it.

Build the Package

Now let’s build our new package:

cd ~/ros2_wscolcon build source ~/.bashrcLaunch the Robot and Mapping

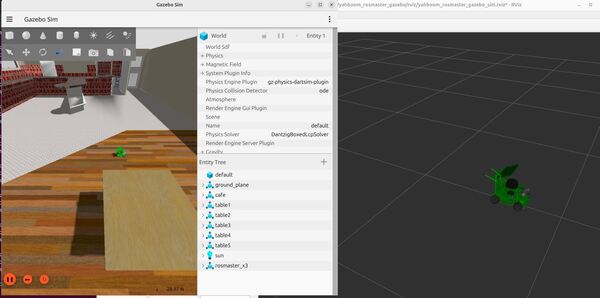

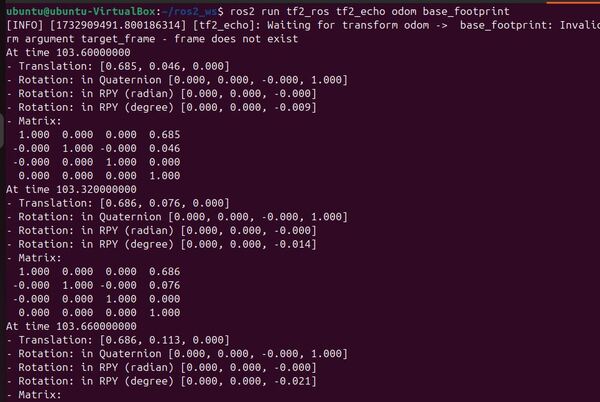

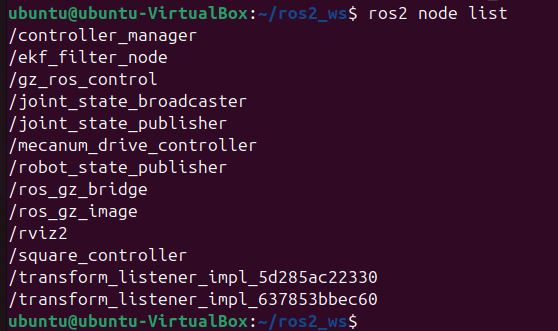

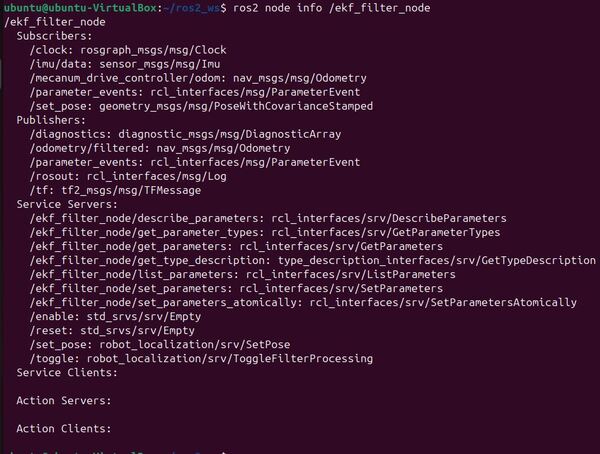

Let’s start mapping. Let’s bring our robot to life. Open a terminal window, and use this command to launch the robot:

nav slamor

bash ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/scripts/rosmaster_x3_navigation.sh slamThis command launches the Gazebo simulation with the Yahboom ROSMASTER X3 robot, and sets the slam argument to True. This tells the launch file to start the slam_toolbox node, which will perform SLAM as the robot moves around.

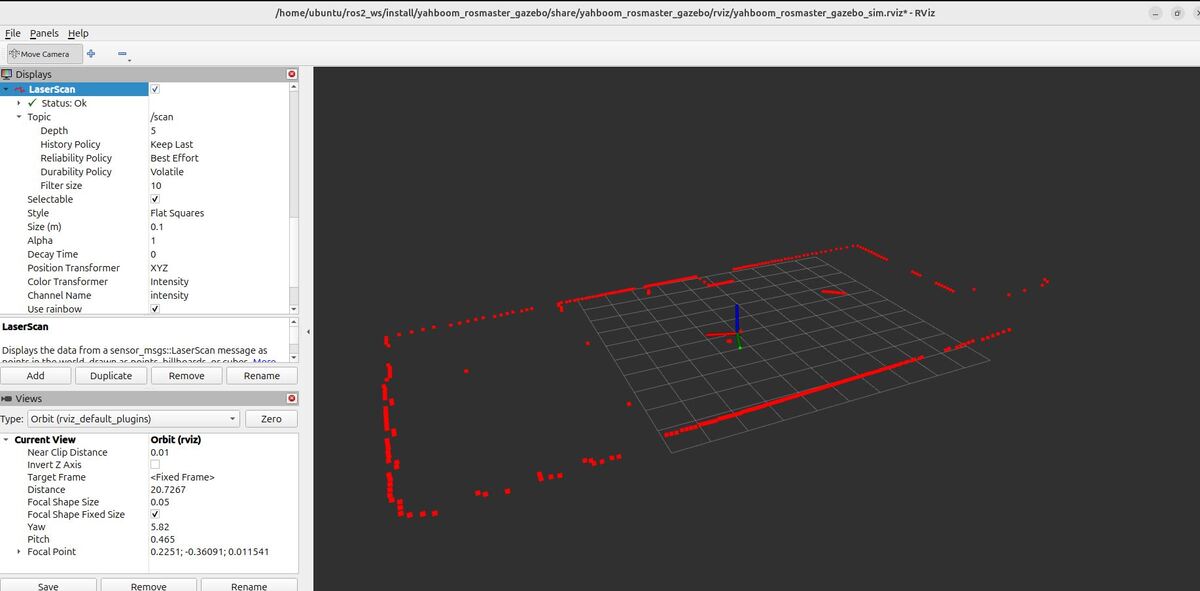

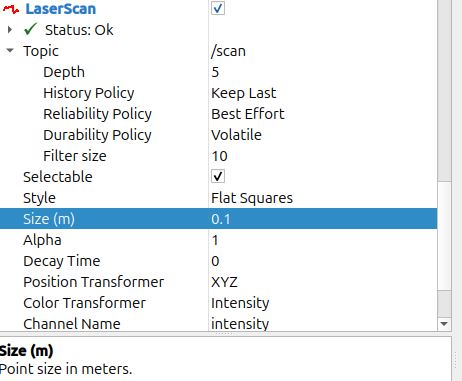

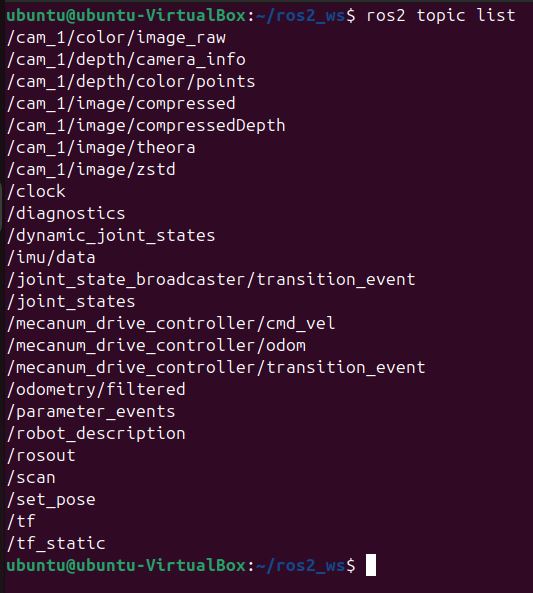

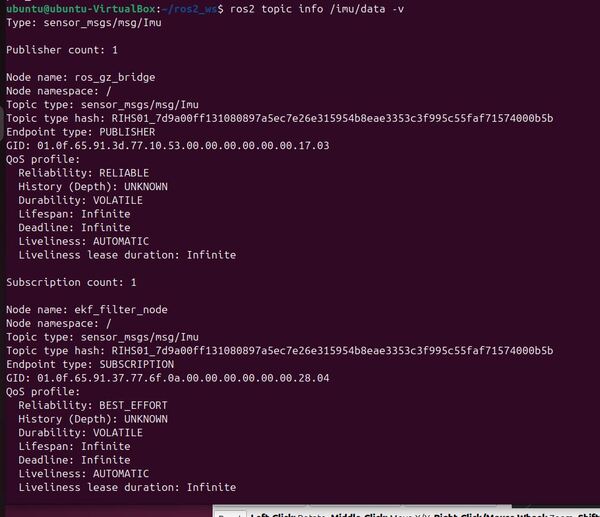

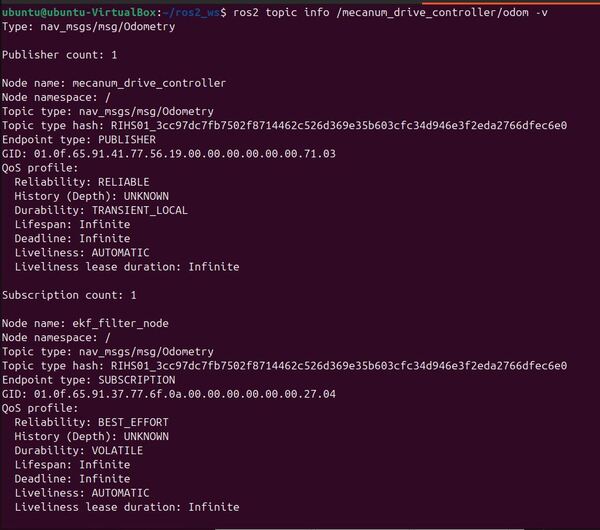

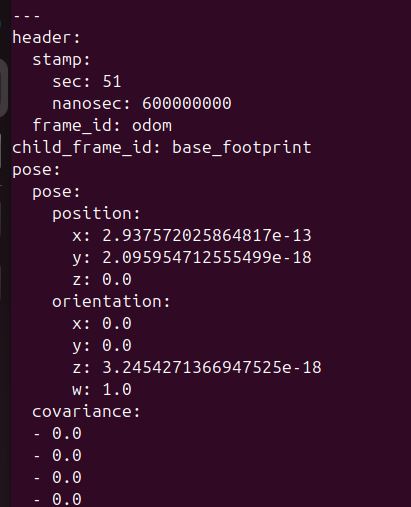

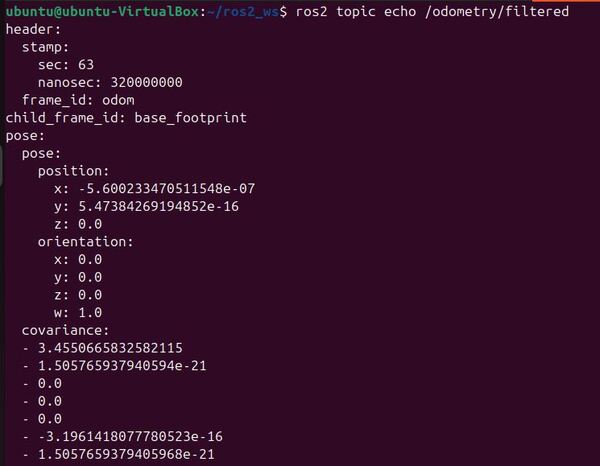

Let’s check out the velocity data. We have many topics, which you can see if you type this command:

ros2 topic listLet’s go through each velocity topic to understand the publishers and subscribers of each topic.

ros2 topic info /cmd_vel_nav -v/cmd_vel_nav (geometry_msgs/msg/Twist)

- Publishers: controller_server and behavior_server

- Subscribers: velocity_smoother

ros2 topic info /cmd_vel_smoothed -v/cmd_vel_smoothed (geometry_msgs/msg/Twist)

- Publishers: velocity_smoother

- Subscribers: collision_monitor

ros2 topic info /cmd_vel -v/cmd_vel (geometry_msgs/msg/Twist)

- Publishers: collision_monitor and docking_server

- Subscribers: cmd_vel_relay

ros2 topic info /mecanum_drive_controller/cmd_vel -v/mecanum_drive_controller/cmd_vel ( geometry_msgs/msg/TwistStamped)

- Publishers: cmd_vel_relay

- Subscribers: ros_gz_bridge (Gazebo) and mecanum_drive_controller (ROS 2 Control)

ros2 topic info /cmd_vel_teleop -v/cmd_vel_teleop (geometry_msgs/msg/Twist)

- Publishers: None

- Subscribers: behavior_server

In RViz, you’ll notice a visualization of the robot in an empty grey space. This grey space will fill in with the map as we move the robot around. Let’s do that now.

You can now either drive the robot around manually by moving the virtual joystick:

rqt_robot_steeringSet the topic to /cmd_vel.

Or you can publish a velocity command like this if rqt_robot_steering doesn’t work (it sometime doesn’t work):

ros2 topic pub /cmd_vel geometry_msgs/msg/Twist "{linear: {x: 0.2, y: 0.0, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 0.5}}" --rate 10

Or you can send the robot to goals at the edge of the white (i.e. free space).

Explore all areas of the simulated environment to ensure good map coverage.

As you move the robot, you will see the map update in real-time in RViz:

- Grey areas represent unexplored space

- White areas represent free space that the robot has mapped with its laser scanner

- Black areas represent obstacles or walls that the robot has detected

The map will gradually fill in as the robot covers more ground. Drive the robot around until you have a complete map of the environment.

Some tips for mapping for real-world robots:

- Drive slowly and smoothly to minimize odometry errors and ensure clear laser scans

- Overlap your paths to give the SLAM algorithm plenty of opportunities to “close the loop” and correct for accumulated errors

- If the map starts to look distorted or misaligned, you may need to adjust the SLAM parameters or start over in a new area

Once you’re satisfied with your map, you can save it using the map_saver utility, which we’ll cover in the next section.

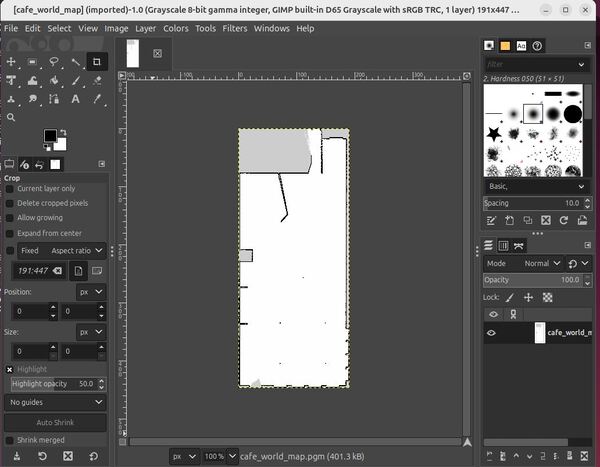

Save the Map

When see that you have a good map that you want to save, do this:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/maps/ros2 run nav2_map_server map_saver_cli -f cafe_world_mapThe syntax is:

ros2 run nav2_map_server map_saver_cli -f <map_name>Your cafe_world_map.pgm and cafe_world_map.yaml files will automatically save to the current directory.

Let’s go through the fields in the yaml file. This file provides all the necessary information to interpret the cafe_world_map.pgm image correctly, including how to scale it to real-world dimensions, where to place it in the world, and how to differentiate between free space, obstacles, and unknown areas:

image: cafe_world_map.pgm

This specifies the filename of the PGM (Portable Gray Map) image file that represents the actual occupancy grid map.

mode: trinary

The mode parameter sets the interpretation of the map data in the image. “trinary” means each pixel has three possible values – free (0-254), unknown (205), or occupied (255).

resolution: 0.05

Resolution is the real-world size in meters of each pixel in the map image. Here, each pixel represents a 5cm x 5cm area.

origin: [-5.03, -11.1, 0] (for example)

This parameter sets the origin point of the map in the real world. The coordinates [-5.03, -11.1, 0] tell you where the bottom-left corner of the map image is located in the real-world space. The first two numbers are the x and y positions, and the third number (0) is the orientation (in radians).

Suppose the origin value is [-5.03, -11.1, 0].

This means the bottom-left corner of the map image is positioned at -5.03 meters on the x-axis and -11.1 meters on the y-axis from the [x=0, y=0, yaw=0] location of the map in the real-world coordinate system.

The third value 0 indicates the orientation, which is the rotation around the z-axis. In this case, it is 0 radians, meaning no rotation.

negate: 0

This indicates whether the colors in the image need to be inverted. A value of 0 means no inversion is needed. It’s like saying the colors in the picture are already correct and don’t need to be flipped.

occupied_thresh: 0.65

This sets the threshold for determining which pixels in the image represent occupied space (e.g., walls, obstacles). If a pixel’s value is above 0.65, it is considered occupied.

free_thresh: 0.25

This sets the threshold for determining which pixels represent free space (e.g., navigable areas). If a pixel’s value is below 0.25, it is considered free.

View and Edit the Map

To view and edit the map, I recommend using a program like GIMP.

Install gimp.

sudo apt-get updatesudo apt-get install gimpcd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/maps/Run gimp.

gimp cafe_world_map.pgmOnce the map is loaded in GIMP, you can edit it. Here are some tips:

- Use the Pencil or Brush tool to draw on the map. Drawing with black (RGB 0,0,0) will add obstacles/walls. Drawing with white (RGB 255,255,255) will erase obstacles and create free space. I like to draw with white to clean out salt and pepper noise from the LIDAR.

- Use the Eraser tool to remove obstacles and create free space.

- Focus on cleaning up major errors like incorrect walls or obstacles. Don’t get too caught up in tiny details.

- Always make a backup of your original map files before editing, in case you make a mistake or want to revert changes.

After making your edits, save the file with File -> Export. Make sure to export it in the .pgm format with the same name as the original map file.

With your map cleaned up and saved, your robot will have a more accurate representation of its environment for navigation.

That’s it. Keep building!