How Does the Boltzmann Distribution Fit Into the Discussion of Epsilon Greedy Search?

In order to answer your question, let us take a closer look at the definition of epsilon greedy search. With our knowledge of how that works, we can then see how the Boltzmann distribution fits into the discussion of epsilon greedy search.

What is Epsilon Greedy Search?

When you are training an agent (e.g. race car, robot, etc.) with an algorithm like Q-learning, you can either have the agent take a random action with probability ϵ or have the agent be greedy and take the action that corresponds to its policy with probability 1-ϵ (i.e. the action for a given state that has the highest Q-value). The former is known as exploration while the latter is called exploitation. In reinforcement learning, we have this constant dichotomy of:

- exploration vs. exploitation

- learn vs. earn

- not greedy vs. greedy

- Exploration: Try a new bar in your city.

- Exploitation: Go to the same watering hole you have been going to for decades.

- Exploration: Start a business.

- Exploitation: Get a job.

- Exploration: Try to make new friends.

- Exploitation: Keep inviting over your college buddies.

- Exploration: Download Tinder dating app.

- Exploitation: Call the ex.

- Exploration (with probability ϵ): Gather more information about the environment.

- Exploitation (with probability 1-ϵ): Make a decision based on the best information (i.e. policy) that is currently available.

The epsilon greedy algorithm in which ϵ is 0.20 says that most of the time the agent will select the trusted action a, the one prescribed by its policy π(s) -> a. However, 20% of the time, the agent will choose a random action instead of following its policy.

We often want to have the epsilon-greedy algorithm in place for a reinforcement learning problem because often what is best for the agent long term (e.g. trying something totally random that pays off in a big way down the road) might not be the best for the agent in the short term (e.g. sticking with the best option we already know).

What Does the Boltzmann Distribution Have to Do With Epsilon Greedy Search?

Notice in the epsilon greedy search section above, I said that 20% of the time the agent will choose a random action instead of following its policy. The problem with this is that it treats all actions equally when making a decision on what action to take. What happens though if some actions might look more promising than others? Plain old epsilon greedy search cannot handle a situation like this. The fact is that, in the real world, all actions are not created equal.

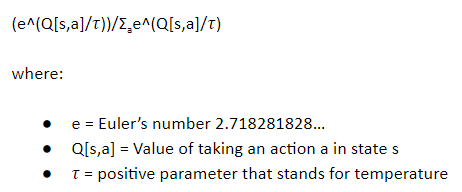

A common method is to use the Boltzmann distribution (also known as Gibbs distribution). Rather than blindly accepting any random action when it comes time for the agent to explore the environment from a given state s, the agent selections an action a (from a set of actions A) with probability:

What this system is doing above is ranking and weighting all actions in the set of possible actions based on their Q-values. This system is often referred to as softmax selection rules.

Take a closer look at the equation above to see what we are doing here. A really high value of tau means that all actions are equally likely to be selected because we are diluting the impact of the Q-values for each action (by dividing by tau). However, as tau gets lower and lower, there will be greater differences in the selection probabilities for each action. The action with the highest Q[s,a] value is therefore much more likely to get selected. And when tau gets close to zero, the Boltzmann selection criteria I outlined above becomes indistinguishable from greedy search. For an extremely low value of tau, the agent will select the action with the highest Q-value and therefore never explore the environment via a random action.