In this tutorial, we will write a program to detect corners on random objects.

Our goal is to build an early prototype of a vision system that can make it easier and faster for robots to identify potential grasp points on unknown objects.

Real-World Applications

The first real-world application that comes to mind is Dr. Pieter Abbeel (a famous robotics professor at UC Berkley) and his laundry-folding robot.

Dr. Abbeel used the Harris Corner Detector algorithm as a baseline for evaluating how well the robot was able to identify potential grasp points on a variety of laundry items. You can check out his paper at this link.

If the robot can identify where to grasp a towel, for example, inverse kinematics can then be calculated so the robot can move his arm so that the gripper (i.e. end effector) grasps the corner of the towel to begin the folding process.

Prerequisites

- You have Python 3.7 or higher

Requirements

Here are the requirements:

- Create a program that can detect corners on an unknown object using the Harris Corner Detector algorithm.

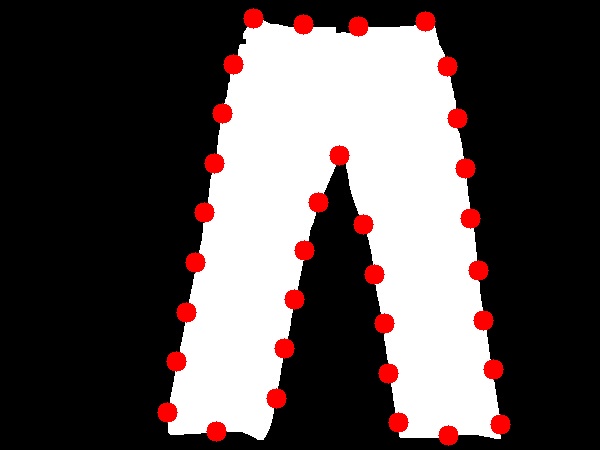

Detecting Corners on Jeans

We’ll start with this pair of jeans above. Save that image to some folder on your computer.

Now, in the same folder you saved that image above (we’ll call the file jeans.jpg), open up a new Python program.

Name the program harris_corner_detection.py.

Write the following code:

# Project: Detect the Corners of Objects Using Harris Corner Detector

# Author: Addison Sears-Collins

# Date created: October 7, 2020

# Reference: https://stackoverflow.com/questions/7263621/how-to-find-corners-on-a-image-using-opencv/50556944

import cv2 # OpenCV library

import numpy as np # NumPy scientific computing library

import math # Mathematical functions

# The file name of your image goes here

fileName = 'jeans.jpg'

# Read the image file

img = cv2.imread(fileName)

# Convert the image to grayscale

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

######## The code in this block is optional #########

## Turn the image into a black and white image and remove noise

## using opening and closing

#gray = cv2.threshold(gray, 75, 255, cv2.THRESH_BINARY)[1]

#kernel = np.ones((5,5),np.uint8)

#gray = cv2.morphologyEx(gray, cv2.MORPH_OPEN, kernel)

#gray = cv2.morphologyEx(gray, cv2.MORPH_CLOSE, kernel)

## To create a black and white image, it is also possible to use OpenCV's

## background subtraction methods to locate the object in a real-time video stream

## and remove shadows.

## See the following links for examples

## (e.g. Absolute Difference method, BackgroundSubtractorMOG2, etc.):

## https://automaticaddison.com/real-time-object-tracking-using-opencv-and-a-webcam/

## https://automaticaddison.com/motion-detection-using-opencv-on-raspberry-pi-4/

############### End optional block ##################

# Apply a bilateral filter.

# This filter smooths the image, reduces noise, while preserving the edges

bi = cv2.bilateralFilter(gray, 5, 75, 75)

# Apply Harris Corner detection.

# The four parameters are:

# The input image

# The size of the neighborhood considered for corner detection

# Aperture parameter of the Sobel derivative used.

# Harris detector free parameter

# --You can tweak this parameter to get better results

# --0.02 for tshirt, 0.04 for washcloth, 0.02 for jeans, 0.05 for contour_thresh_jeans

# Source: https://docs.opencv.org/3.4/dc/d0d/tutorial_py_features_harris.html

dst = cv2.cornerHarris(bi, 2, 3, 0.02)

# Dilate the result to mark the corners

dst = cv2.dilate(dst,None)

# Create a mask to identify corners

mask = np.zeros_like(gray)

# All pixels above a certain threshold are converted to white

mask[dst>0.01*dst.max()] = 255

# Convert corners from white to red.

#img[dst > 0.01 * dst.max()] = [0, 0, 255]

# Create an array that lists all the pixels that are corners

coordinates = np.argwhere(mask)

# Convert array of arrays to lists of lists

coordinates_list = [l.tolist() for l in list(coordinates)]

# Convert list to tuples

coordinates_tuples = [tuple(l) for l in coordinates_list]

# Create a distance threshold

thresh = 50

# Compute the distance from each corner to every other corner.

def distance(pt1, pt2):

(x1, y1), (x2, y2) = pt1, pt2

dist = math.sqrt( (x2 - x1)**2 + (y2 - y1)**2 )

return dist

# Keep corners that satisfy the distance threshold

coordinates_tuples_copy = coordinates_tuples

i = 1

for pt1 in coordinates_tuples:

for pt2 in coordinates_tuples[i::1]:

if(distance(pt1, pt2) < thresh):

coordinates_tuples_copy.remove(pt2)

i+=1

# Place the corners on a copy of the original image

img2 = img.copy()

for pt in coordinates_tuples:

print(tuple(reversed(pt))) # Print corners to the screen

cv2.circle(img2, tuple(reversed(pt)), 10, (0, 0, 255), -1)

cv2.imshow('Image with 4 corners', img2)

cv2.imwrite('harris_corners_jeans.jpg', img2)

# Exit OpenCV

if cv2.waitKey(0) & 0xff == 27:

cv2.destroyAllWindows()

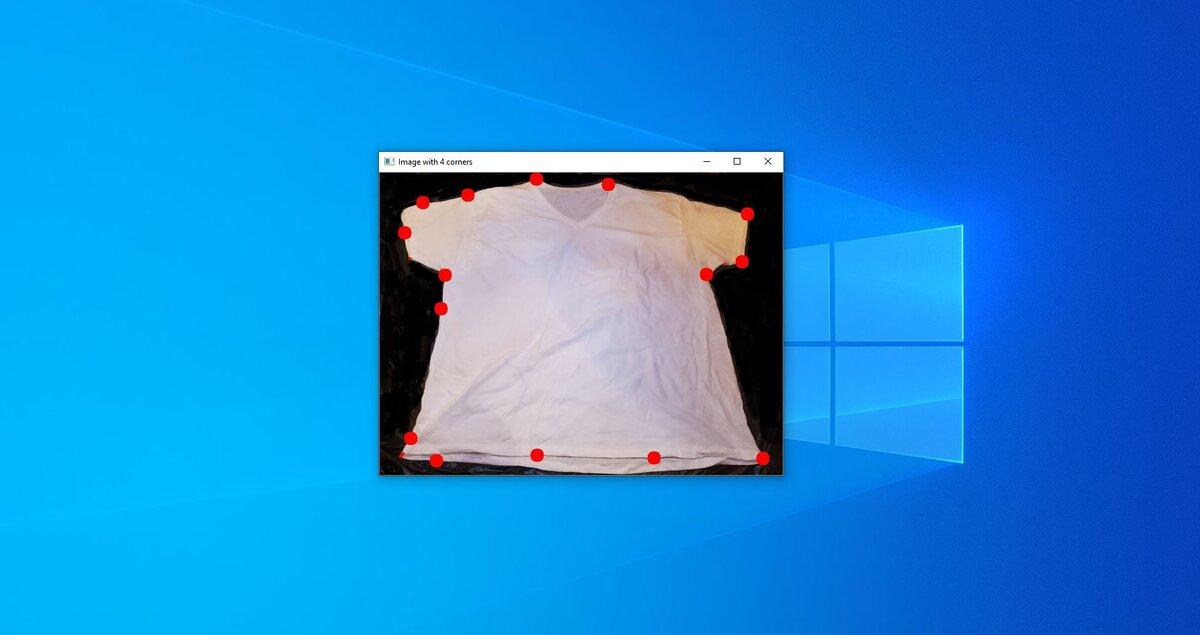

Run the code. Here is what you should see:

Now, if you uncomment the optional block in the code, you will see a window that pops up that shows a binary black and white image of the jeans. The purpose of the optional block of code is to remove some of the noise that is present in the input image.

Notice how when we remove the noise, we get a lot more potential grasp points (i.e. corners).

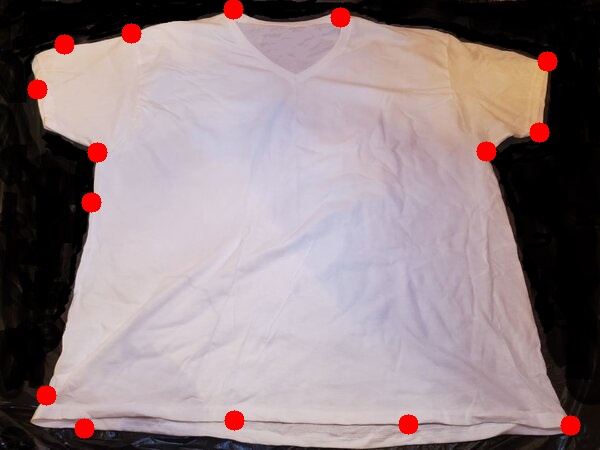

Detecting Corners on a T-Shirt

To detect corners on a t-shirt, you’ll need to tweak the fourth parameter on this line of the code. I use 0.02 typically, but you can try another value like 0.04:

# 0.02 for tshirt, 0.04 for washcloth, 0.02 for jeans, 0.05 for contour_thresh_jeans

# Source: https://docs.opencv.org/3.4/dc/d0d/tutorial_py_features_harris.html

dst = cv2.cornerHarris(bi, 2, 3, 0.02)

Let’s detect the corners on an ordinary t-shirt.

Here is the input image (tshirt.jpg):

Change the fileName variable in your code so that it is assigned the name of the image (‘tshirt.jpg’).

Here is the output image:

Detecting Corners on a Washcloth

Input Image (washcloth.jpg):

Output image:

That’s it. Keep building!