Classification algorithms like Logistic Regression and Naive Bayes only work on linearly separable problems.

What Does Linearly Separable Mean?

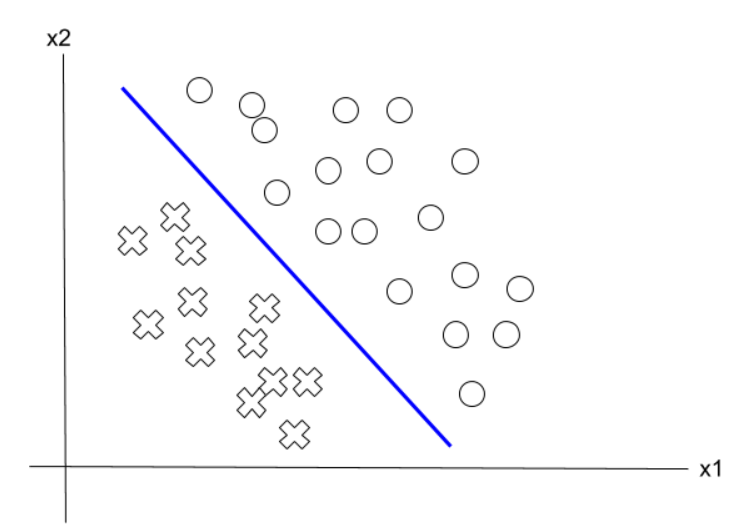

Consider a data set with two attributes x1 and x2 and two classes 0 and 1. Let class 0 = o and class 1 = x.

A straight line (or plane) can be used to separate the two classes (i.e. the x’s from the o’s). In other words, a single decision surface can be used as the boundary between both classes.

When the data is nonlinearly separable, we cannot use linear models. We must instead use models that can handle nonlinearly separable data.

What Does Nonlinearly Separable Mean?

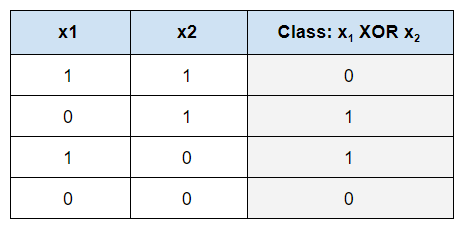

Here is an example. Consider the XOR function acting on training data that has two attributes x1 and x2 and a class (0 or 1).

The function x1 XOR x2 has the following truth table:

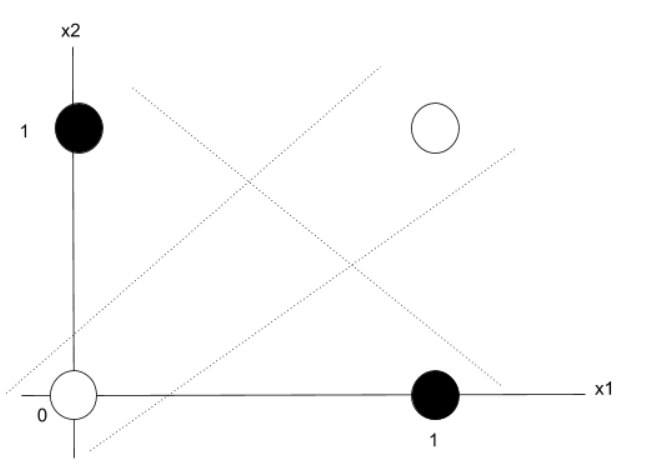

Now let us graph this function. For the class, black circles = 1 and white circles = 0.

We could try all day to try to place a line on the graph that can separate the classes, and we still would not succeed. This problem is known as the XOR problem and is a clear example of when linear classifiers like Naive Bayes and Logistic Regression would not work, and we would need to consider other types of machine learning models that can handle nonlinearly separable data.