Precise docking is a key capability for autonomous mobile robots. While Nav2 excels at general path planning and obstacle avoidance, docking often requires more accurate position information than standard navigation provides. This is where AprilTags come in – they’re visual markers that help robots determine their exact position and orientation relative to a docking station.

In this tutorial, we’ll implement automated docking by combining Nav2’s docking server with AprilTag detection. We’ll mount an AprilTag near our docking station and configure our robot to use it as a visual reference point. The Nav2 docking server will handle the approach and final alignment, using the AprilTag’s position data to guide the robot into the dock. Here is what you will be able to build by the end of this tutorial:

We’ll build this system in clear steps:

- Set up AprilTag detection to process our robot’s camera images

- Configure the Nav2 docking server to use AprilTag data

- Test our docking system in simulation

In this tutorial, we will implement AprilTag detection using ROS 2, giving you hands-on experience with an important technology in robot vision. We will use the CPU version of AprilTag detection from ROS 2. You can find a GPU-accelerated drop-in replacement for this CPU version here on the NVIDIA Isaac website.

Real-World Applications

AprilTags are used in many real-world situations. Here are some examples:

- Robotic Arms:

- Helping robotic arms in factories pick up parts from exact locations

- Guiding robotic arms in fast food restaurants, like those made by Miso Robotics, to assist with cooking tasks

- Mobile Robots:

- Helping delivery robots navigate through office buildings or hospitals

- Assisting mobile robots to dock precisely at battery charging stations

- Drones:

- Enabling drones to land accurately on specific locations marked with AprilTags

- Guiding drones for indoor inspections where GPS doesn’t work well

Prerequisites

- You have completed this tutorial: Getting Started With the Simple Commander API – ROS 2 Jazzy.

All my code for this project is located here on GitHub.

What Are AprilTags?

AprilTags are visual markers designed specifically for robotics and computer vision applications. These tags appear as small black and white squares with unique patterns inside. Their primary purpose is to help robots and cameras quickly determine their position and orientation in space.

When a robot’s camera spots an AprilTag, it can instantly calculate how far away the tag is, what angle it’s viewing the tag from, and which specific tag it’s looking at, as each tag has a unique identifier.

AprilTags offer several advantages:

- They’re inexpensive to produce and can be quickly printed on a plain sheet of white paper

- They perform well in various lighting conditions

- They allow for fast detection – an important feature for real-time applications.

Why Use AprilTags Instead of ArUco Markers?

You may have heard of another type of visual tag used in robotics called ArUco markers. ArUco markers are similar to AprilTags. They look like small black and white squares with patterns inside, much like AprilTags. However, AprilTags have some advantages:

- Better in Difficult Situations: AprilTags work better when lighting is not perfect or when part of the tag is hidden.

- More Accurate: AprilTags give robots a more precise idea of where they are, especially when far away or viewed at odd angles.

- Faster to Identify: Robots can usually find and read AprilTags more quickly than with ArUco markers, which is important when things need to happen fast.

- Fewer Mistakes: AprilTags are less likely to be confused with other objects or misread.

What Do We Need to Do?

Remember Automatic Addison’s 10 Ps of robotics: Prior Proper Planning Prevents Poor Performance. Poor Performance Promotes Pain.

Let’s begin with the end in mind and think through all the steps we need to take to get to our end goal, which is to have our robot dock to a docking station using the Nav2 Docking Server.

First, we need to add an AprilTag to our cafe world environment in Gazebo. This tag will serve as the visual marker for our robot’s docking station. The tag needs to be placed at a specific position and orientation that makes sense for docking.

Now open a new terminal window, and type the following command to launch the robot. Wait until Gazebo and RViz come fully up, which can take up to 30 seconds:

navor

bash ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_bringup/scripts/rosmaster_x3_navigation.sh Type this command in the terminal window:

ros2 topic list | grep cam_1Our robot’s depth camera currently publishes raw images to /cam_1/color/image_raw as well as camera information to /cam_1/color/camera_info.

To see the image on /cam_1/color/image_raw, type:

sudo apt-get install ros-$ROS_DISTRO-image-viewThen run the image viewer:

ros2 run image_view image_view --ros-args -r image:=cam_1/color/image_rawYou can move your robot around the room.

For these raw camera images to be useful for AprilTag detection, we need to process them through a ROS 2 package called image_proc. We will use the rectify node to achieve this. This node:

Subscribes to:

- /cam_1/color/image_raw (sensor_msgs/msg/Image)

- /cam_1/color/camera_info (sensor_msgs/msg/CameraInfo)

Publishes:

- /cam_1/color/image_rect (sensor_msgs/msg/Image)

Why did we have to do this step? For AprilTag detection, we need rectified images, which remove camera lens distortion. The camera’s lens naturally bends the image a bit (like looking through a curved glass), which can make the square tags look slightly warped – rectification fixes this so the tags are easier to detect.

With our rectified images ready, we will use the CPU-based apriltag_ros package for detection. This node:

Subscribes to:

- /cam_1/color/image_rect (sensor_msgs/msg/Image)

- /cam_1/color/camera_info (sensor_msgs/msg/CameraInfo)

Publishes:

- /tf (tf2_msgs/msg/TFMessage)

- detections (apriltag_msgs/msg/AprilTagDetectionArray)

Alternatively, if you have an NVIDIA GPU, you could use Isaac ROS AprilTag. This node would:

Subscribe to:

- /cam_1/color/image_rect (sensor_msgs/msg/Image)

- /cam_1/color/camera_info (sensor_msgs/msg/CameraInfo)

Publish:

- tag_detections (isaac_ros_apriltag_interfaces/msg/AprilTagDetectionArray)

- tf (tf2_msgs/msg/TFMessage)

The Nav2 Docking Server uses these AprilTag detections through its SimpleChargingDock plugin. Looking at the SimpleChargingDock source code, this plugin specifically subscribes to:

- detected_dock_pose (geometry_msgs/msg/PoseStamped) – For getting the current pose of the dock

The complete pipeline works like this:

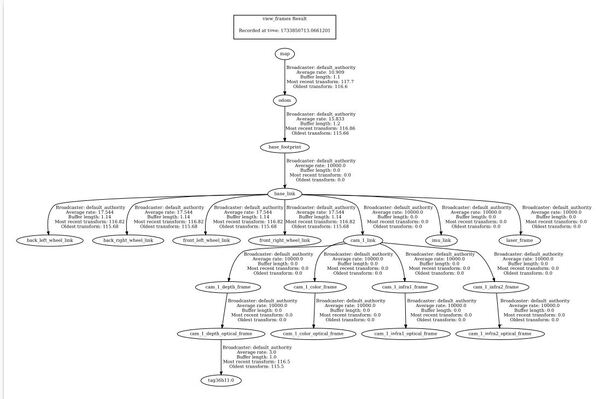

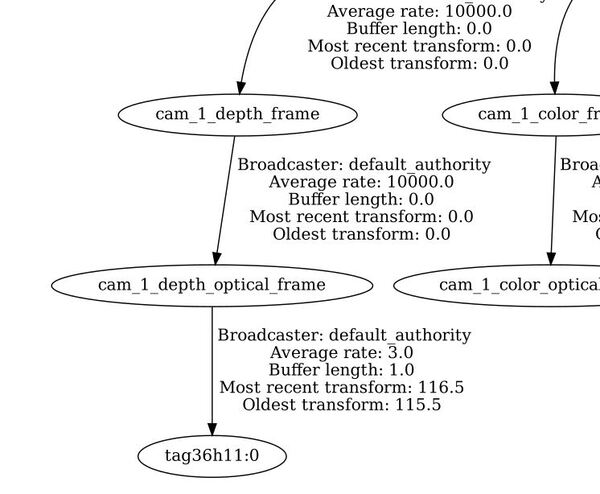

- apriltag_ros (or isaac_ros_apriltag) detects our tag (ID 0 from family 36h11) and publishes the transform:

- Parent frame: cam_1_depth_optical_frame (our camera’s optical frame)

- Child frame: tag36h11:0 (our AprilTag’s frame)

- We’ll need to create a node to add to our yahboom_rosmaster_navigation package that:

- Listens for this transform from the camera’s optical frame to the tag

- Publishes this as a PoseStamped message to the detected_dock_pose topic with the “odom” frame as the default frame_id in the header.

- We will follow the reference code here.

- The SimpleChargingDock plugin then:

- Handles any needed frame transformations internally using its tf2 buffer

- Applies configured offsets to determine the actual docking pose (since our tag will be high on the wall above the docking station)

- Computes a staging pose offset from the docking pose

- Uses either battery state or distance thresholds to determine when we’re successfully docked

Once we have all our components set up, we can test the docking system in two ways:

- Through RViz:

- Through a demo script by using the Simple Commander API.

In the next sections, we’ll implement each of these components step by step, starting with setting up a new package.

Create a New Package

Open a new terminal window and type:

cd ~/ros2_ws/src/yahboom_rosmaster/ros2 pkg create --build-type ament_cmake \

--license BSD-3-Clause \

--maintainer-name ubuntu \

--maintainer-email automaticaddison@todo.com \

yahboom_rosmaster_dockingEdit the package.xml file in this new package to add these dependencies:

<depend>rclcpp</depend>

<depend>rclpy</depend>

<depend>apriltag_ros</depend>

<depend>apriltag_msgs</depend>

<depend>image_proc</depend>

<depend>image_view</depend>

<depend>geometry_msgs</depend>

<depend>nav2_simple_commander</depend>

<depend>tf2_ros</depend>

You can also edit CMakeLists.txt to add these package dependencies.

# find dependencies

find_package(ament_cmake REQUIRED)

find_package(ament_cmake_python REQUIRED)

find_package(geometry_msgs REQUIRED)

find_package(rclcpp REQUIRED)

find_package(rclpy REQUIRED)

find_package(apriltag_ros REQUIRED)

find_package(apriltag_msgs REQUIRED)

find_package(image_proc REQUIRED)

find_package(geometry_msgs REQUIRED)

find_package(tf2_ros REQUIRED)

Update the package.xml file inside the metapackage yahboom_rosmaster.

<exec_depend>yahboom_rosmaster_bringup</exec_depend>

<exec_depend>yahboom_rosmaster_description</exec_depend>

<exec_depend>yahboom_rosmaster_docking</exec_depend>

<exec_depend>yahboom_rosmaster_gazebo</exec_depend>

<exec_depend>yahboom_rosmaster_localization</exec_depend>

<exec_depend>yahboom_rosmaster_navigation</exec_depend>

<exec_depend>yahboom_rosmaster_system_tests</exec_depend>

Now build your workspace.

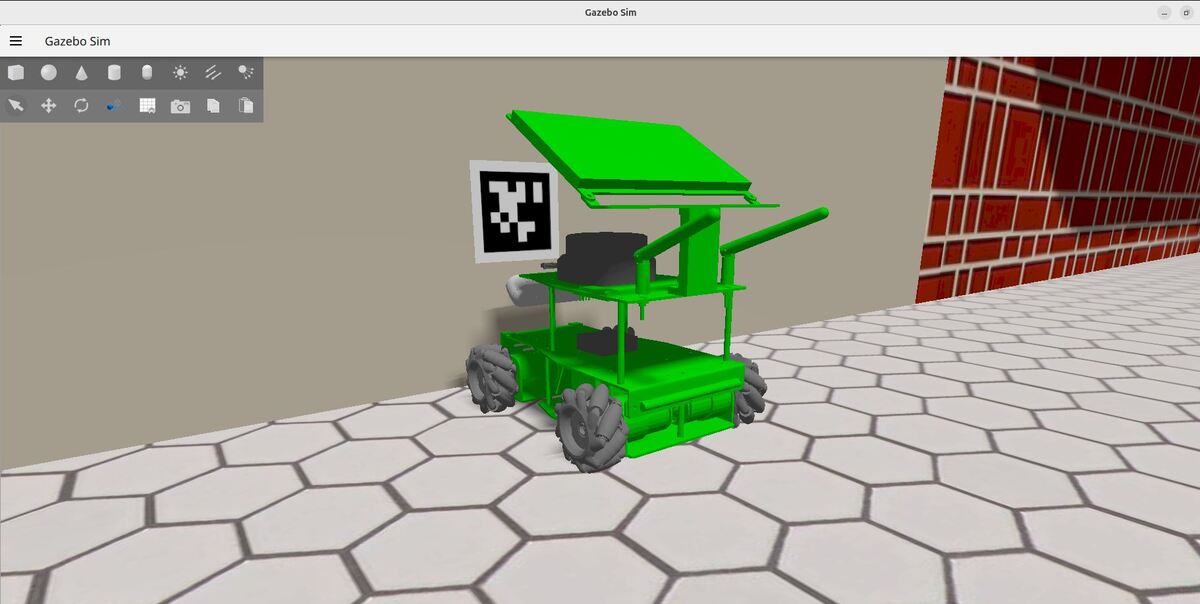

cd ~/ros2_ws/rosdep install --from-paths src --ignore-src -r -ycolcon build && source ~/.bashrcAdd an AprilTag To Your Gazebo World

In this section, we’ll add an AprilTag to our cafe world.

The AprilTag is in Simulation Description Format (SDF), the default model format for Gazebo.

Open a terminal window, and type this:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/models/Type:

dirYou can see we have an AprilTag that represents the number 0.

Apriltag36_11_00000

In another terminal, navigate to this file:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/worlds/Open cafe.world.

We’ll be adding the AprilTag model to the wall.

Add this code.

<include>

<name>Apriltag36_11_00000</name>

<pose>-4.96 1.5 0.55 1.5708 0 1.5708</pose>

<static>true</static>

<uri>model://Apriltag36_11_00000</uri>

</include>

Save the changes, and close the file.

To see your cafe.world file in action, type the following command to let Gazebo know how to find the models:

export GZ_SIM_RESOURCE_PATH=/home/ubuntu/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_gazebo/modelsNow launch your Gazebo world:

gz sim cafe.worldYour Gazebo world should now include the April Tag.

Remember to adjust the pose of the AprilTag as needed to ensure it is visible and correctly placed in your specific world layout.

Convert the Raw Camera Images into Rectified Images

Let’s create a launch file that will use the image_proc package’s rectify node to do the following:

Subscribes to:

- /cam_1/color/image_raw (sensor_msgs/msg/Image)

- /cam_1/color/camera_info (sensor_msgs/msg/CameraInfo)

Publishes:

- /cam_1/color/image_rect (sensor_msgs/msg/Image)

Open a terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_docking/mkdir launchAdd this file to the launch folder:

apriltag_dock_pose_publisher.launch.pySave the file, and close it.

Edit CMakeLists.txt.

Add these lines:

# Copy necessary files to designated locations in the project

install (

DIRECTORY launch

DESTINATION share/${PROJECT_NAME}

)

Save the file, and close it.

Build your workspace.

cd ~/ros2_ws/colcon build && source ~/.bashrcLaunch the robot.

navOnce everything is up, type:

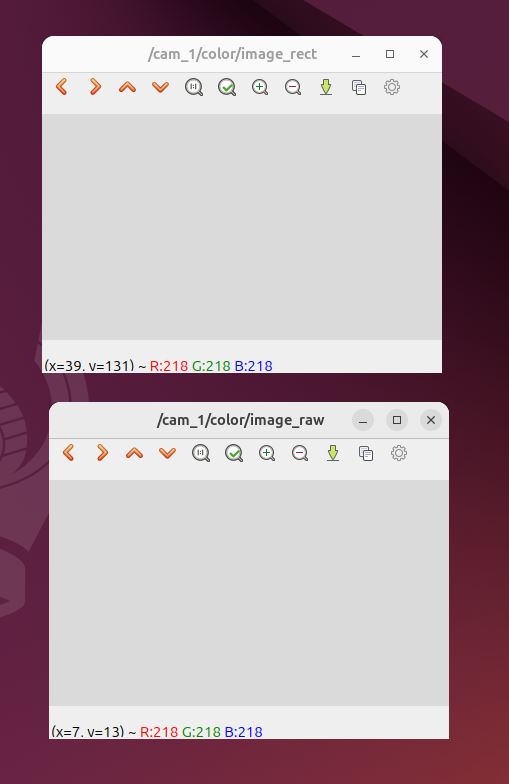

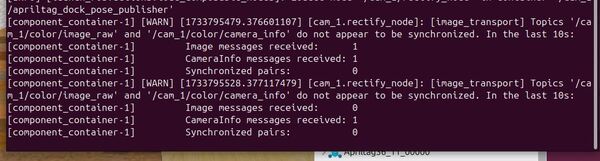

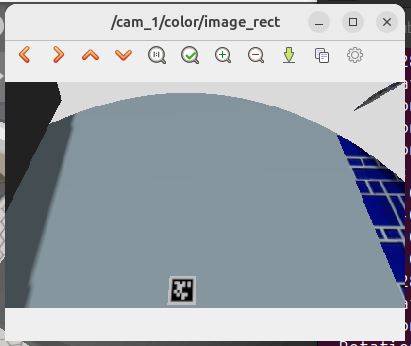

ros2 launch yahboom_rosmaster_docking apriltag_dock_pose_publisher.launch.pyTo see the rectified image:

ros2 run image_view image_view --ros-args -r image:=/cam_1/color/image_rectRun the image viewer to see the raw input image:

ros2 run image_view image_view --ros-args -r image:=cam_1/color/image_rawYou should see a light grey square in both images.

Type this command in the terminal window:

ros2 topic list | grep cam_1

You can safely ignore the synchronization warnings. These warnings happen from time to time due to minor timing misalignments with Gazebo.

The important thing is that you can see the rectified image properly. Feel free to move the robot around.

Publish the April Tag Pose Using the apriltag_ros Package

We will now use the apriltag_ros package to publish the pose of the AprilTag with respect to the camera’s optical frame.

Specifically, we will detect our tag (ID 0 from family 36h11) in the rectified camera image and then publish the following coordinate transformation:

- Parent frame: cam_1_depth_optical_frame (our camera’s optical frame)

- Child frame: tag36h11:0 (our AprilTag’s frame)

Let’s start by creating a configuration file.

Open a terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_docking/mkdir configAdd this file to the config folder:

apriltags_36h11.yamlSave the file, and close it.

Edit CMakeLists.txt.

Add these lines:

# Copy necessary files to designated locations in the project

install (

DIRECTORY config launch

DESTINATION share/${PROJECT_NAME}

)

Save the file, and close it.

Build your workspace.

cd ~/ros2_ws/colcon build && source ~/.bashrcLet’s test our AprilTag detection pipeline. First, make sure your robot is running:

navOnce your robot is up and running, open a new terminal and launch our AprilTag detection nodes:

ros2 launch yahboom_rosmaster_docking apriltag_dock_pose_publisher.launch.pyNavigate your robot to where it can see the AprilTag in its front camera.

Now let’s verify that our AprilTag detection pipeline is working correctly. Open a new terminal and check if we’re receiving AprilTag detections:

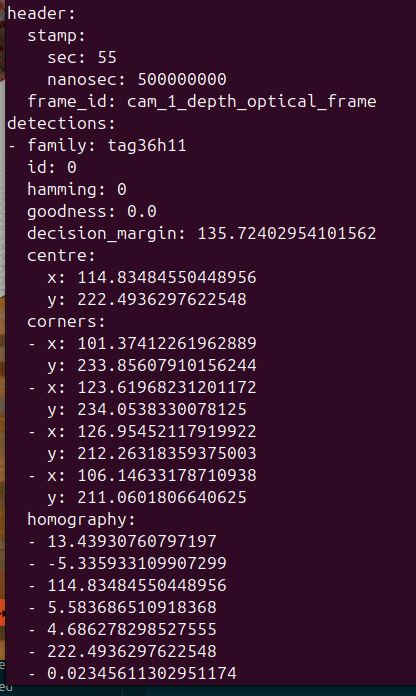

ros2 topic echo /cam_1/detectionsYou should see detection messages whenever your robot’s camera sees the AprilTag. These messages include information about the tag’s ID, position, and detection confidence.

To verify the coordinate transformations are being published correctly, use the tf2_tools package:

ros2 run tf2_tools view_framesThis command will generate a PDF file showing the complete transform tree. Look for a transform from cam_1_depth_optical_frame to tag36h11:0.

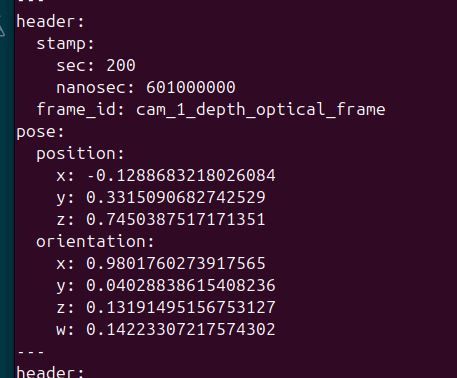

To see these transforms in real-time, open another terminal and type:

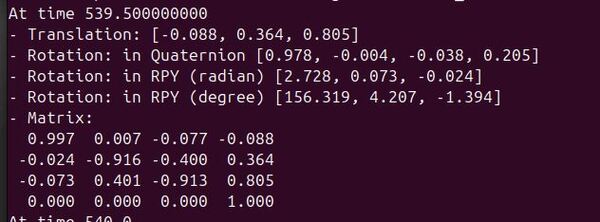

ros2 run tf2_ros tf2_echo cam_1_depth_optical_frame tag36h11:0This will display the position and orientation of the tag relative to your robot’s camera optical frame, updating in real-time.

You can also visualize what your robot’s camera is seeing. To view the rectified image (which is what the AprilTag detector uses):

ros2 run image_view image_view --ros-args -r image:=/cam_1/color/image_rect

Try moving your robot around to different positions where it can see the AprilTag. You should observe:

- The detection messages updating in the topic echo window

- The transform values changing as the relative position between camera and tag changes

- The AprilTag visible in the image viewer windows

Let me explain the transform from the camera’s optical frame to the AprilTag frame. Remember that we’re working with rectified images, which means the image has been processed to remove lens distortion and appear as if taken by an ideal camera.

In the camera’s optical frame (assume we are in front of the camera looking directly at the camera lens):

- Positive X points to the left

- Positive Y points down

- Positive Z points outward from the camera lens towards us

For the AprilTag (assume we are looking directly at the tag above the docking station, which is against the wall):

- Positive Z points out from the tag towards us (away from the wall and into the scene)

- Positive X points to the right of the tag

- Positive Y points up along the tag towards the ceiling

The translation [-0.088, 0.364, 0.805] tells us the tag’s position relative to the camera optical frame (assume we are looking through the camera lens at the AprilTag):

- -0.088m in X: Tag is slightly to the left of the camera’s center

- +0.364m in Y: Tag appears in the lower portion of the image

- +0.805m in Z: Tag is about 0.8 meters in front of the camera

The rotation, shown in degrees [156.319, 4.207, -1.394], represents:

- Roll (156.319°): This large value indicates the tag is mounted vertically on the wall. The tag orientation is achieved by rotating the camera optical frame (parent) about its x axis 156 degrees in the counterclockwise direction so that it aligns with the child tag36h11:0 frame (remember the right-hand rule of robotics)

- Pitch (4.207°): Small positive pitch shows the tag is almost directly facing the camera

- Yaw (-1.394°): Minimal yaw means the tag is mounted level on the wall with almost no rotation to the left or right

The transform matrix combines both the rotation and translation into a single 4×4 matrix, useful for computing the exact pose of the tag relative to the camera. This information will be essential for our docking system to determine how to approach the dock.

If you’re seeing the AprilTag detections and transforms being published, congratulations! Your AprilTag detection pipeline is working correctly.

I will now add the apriltag_dock_pose_publisher.launch.py launch file to the main navigation launch file rosmaster_x3_navigation.launch.py, which is inside the yahboom_rosmaster_bringup/launch folder.

Create a Detected Dock Pose Publisher

To make our docking system work with AprilTags, we need to create a node that will listen to the /tf topic for the transform between the cam_1_depth_optical_frame (parent frame) and the tag36h11:0 child frame. It will then publish this information as a geometry_msgs/PoseStamped message to a topic named detected_dock_pose.

Our Detected Dock Pose Publisher will process this transformation information and publish the dock’s AprilTag pose as a geometry_msgs/PoseStamped message on the “detected_dock_pose” topic. This publisher is important because it transforms the AprilTag pose data from the TF tree into a format that the docking system can directly use to locate and approach the dock accurately.

The Nav2 Docking Server uses the information on the detected_dock_pose topic through its SimpleChargingDock plugin. Looking at the SimpleChargingDock source code, this plugin specifically subscribes to:

- detected_dock_pose (geometry_msgs/msg/PoseStamped) – For getting the current pose of the AprilTag associated with the docking station

Let’s to create a node to add to our yahboom_rosmaster_navigation package that:

- Listens for the cam_1_depth_optical_frame to tag36h11:0 transform.

- Publishes this as a PoseStamped message to the detected_dock_pose topic with the “cam_1_depth_optical_frame” frame as the default frame_id in the header. The reference code here from NVIDIA serves as a rough guide to what we must do.

Open a terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_docking/srctouch detected_dock_pose_publisher.cppAdd this code.

Save the file, and close it.

Update your CMakeLists.txt.

Now add this node to your apriltag_dock_pose_publisher.launch.py launch file in the yahboom_rosmaster_docking package.

Also uncomment the relevant lines in your main launch file: rosmaster_x3_navigation.launch.py.

Build the package.

cd ~/ros2_ws/ && colcon build && source ~/.bashrcTo test that everything is setup properly, launch the robot:

navNow run the following command:

ros2 topic info /detected_dock_pose -vYou should see one publisher (detected_dock_pose_publisher) and one subscriber (docking_server).

Navigate the robot over to the AprilTag so that the tag is in the camera’s field of view. Then type:

ros2 topic echo /detected_dock_pose My output is this:

The data should match the output from this command:

ros2 run tf2_ros tf2_echo cam_1_depth_optical_frame tag36h11:0To see what the camera sees, type:

ros2 run image_view image_view --ros-args -r image:=/cam_1/color/image_rectOverview of the Docking Action

Now that we have the data setup, it is time to configure the docking server for Nav2.

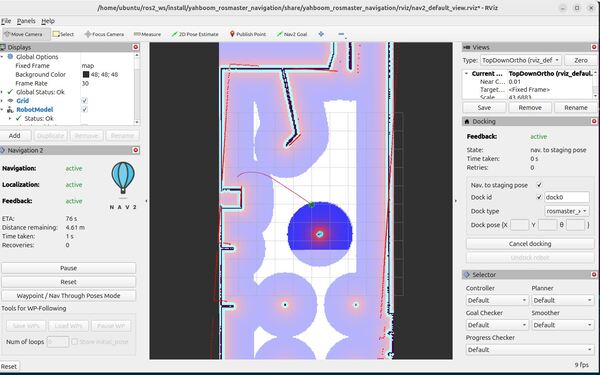

The docking action in Nav2 guides your robot from its current position to a successful connection with a charging dock. Here’s a high-level overview of how it works:

- The action server receives a request to dock, either specifying a dock ID from a known database or providing explicit dock pose information.

- If the robot isn’t already near the dock, it uses Nav2 to navigate to a predefined staging pose near the charging station.

- Once at the staging pose, the robot begins to search for and detect the April tag associated with the dock.

- Using visual feedback from the AprilTag, the robot enters a precision control loop, carefully maneuvering to align itself with the dock.

- The robot continues its approach until it detects contact with the dock or confirms that charging has begun.

- If the docking attempt fails, the system can retry the process a configurable number of times.

- Throughout the process, the action server provides feedback on the current state and elapsed time.

This structured approach allows for reliable docking across various robot types and environments, with the flexibility to handle different dock designs and detection methods.

Overview of the Undocking Action

The undocking action in Nav2 guides your robot from a docked position to a safe staging pose away from the charging station. Here’s a high-level overview of how it works:

- The action server receives a request to undock, optionally including the dock type if not already known from a previous docking operation.

- The system verifies the dock type and current docking status, ensuring the robot is actually docked (for charging docks).

- For charging docks, the system first attempts to disable charging before any physical movement begins.

- Once charging is disabled, the robot calculates its staging pose based on its current position relative to the dock.

- Using a precision control loop, the robot carefully maneuvers to the staging pose while maintaining specified linear and angular tolerances.

- The system monitors both position and charging status, only considering the undocking successful when the robot has reached the staging pose and charging has completely stopped.

- Throughout the process, the system enforces timeout limits and can respond to cancellation requests for safety.

This structured approach allows for reliable undocking that works across different robot platforms and dock types, while maintaining the safety and integrity of both the robot and charging equipment.

Create the Dock Database File

To use the Nav2 docking server, you’ll need to create a database of known docking stations in your environment. This database tells your robot where docks are located and what type they are. Here’s how to set it up. We will store our files in the yahboom_rosmaster_docking package.

You can find a guide of how to create the database file here on GitHub.

Open a terminal window, and type:

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_docking/configtouch dock_database.yaml Add this code.

Save the file, and close it.

In this file, we defined a dock with a unique identifier, its type, and its pose in your map. The pose is given as [x, y, theta] coordinates.

Configure the Docking Server

We now need to configure the docking_server.

First, let’s navigate to the folder where the Nav2 parameters are located.

Open a terminal and move to the config folder.

cd ~/ros2_ws/src/yahboom_rosmaster/yahboom_rosmaster_navigation/config/Now, let’s open the YAML file using a text editor: rosmaster_x3_nav2_default_params.yaml

Add these parameters.

docking_server:

ros__parameters:

controller_frequency: 10.0

initial_perception_timeout: 20.0 # Default 5.0

wait_charge_timeout: 5.0

dock_approach_timeout: 30.0

undock_linear_tolerance: 0.05

undock_angular_tolerance: 0.05

max_retries: 3

base_frame: "base_link"

fixed_frame: "odom"

dock_backwards: false

dock_prestaging_tolerance: 0.5

# Types of docks

dock_plugins: ['rosmaster_x3_dock']

rosmaster_x3_dock:

plugin: 'opennav_docking::SimpleChargingDock'

docking_threshold: 0.02

staging_x_offset: 0.75

staging_yaw_offset: 3.14

use_external_detection_pose: true

use_battery_status: false

use_stall_detection: false

stall_velocity_threshold: 1.0

stall_effort_threshold: 1.0

charging_threshold: 0.5

external_detection_timeout: 1.0

external_detection_translation_x: -0.18

external_detection_translation_y: 0.0

external_detection_rotation_roll: -1.57

external_detection_rotation_pitch: 1.57

external_detection_rotation_yaw: 0.0

filter_coef: 0.1

# Dock instances

dock_database: $(find-pkg-share yahboom_rosmaster_docking)/config/dock_database.yaml

controller:

k_phi: 3.0

k_delta: 2.0

beta: 0.4

lambda: 2.0

v_linear_min: 0.1

v_linear_max: 0.15

v_angular_max: 0.75

slowdown_radius: 0.25

use_collision_detection: true

costmap_topic: "/local_costmap/costmap_raw"

footprint_topic: "/local_costmap/published_footprint"

transform_tolerance: 0.1

projection_time: 5.0

simulation_step: 0.1

dock_collision_threshold: 0.3

Save the file, and close it.

You can find a detailed list and description of each of these parameters here.

In this scenario, the docking camera is on the front of the robot. Remember ROS conventions:

For the AprilTag (assume we are looking directly at the tag on the docking station):

- Z-axis points out from the tag (away from the docking station, towards us)

- X-axis points to the right of the tag

- Y-axis points up along the tag towards the celing

For the robot’s base_link:

- X-axis points forward

- Y-axis points left

- Z-axis points up

The docking pose of the robot is the AprilTag’s pose with certain translations and rotations applied.

The external_detection_* values in the docking parameters define offsets relative to the AprilTag frame to specify where the robot should dock.

We use the right hand rule here to figure out the values for the external_detection translations and rotations.

To properly express the docking pose relative to the AprilTag’s pose, we need to figure out what combination of rotations and translations of the parent AprilTag axes will match up to the desired docking pose.

You can see in the source code that the rotations are applied in this order: pitch (rotation around the y-axis by 90 degrees counterclockwise…1.57 radians), roll (rotation around the x-axis 90 degrees clockwise…-1.57 radians), then yaw (no rotation around the z-axis).

When you use your right hand to perform these rotations, you will see that your hand will align with the expected orientation of the robot when it is parked headfirst into the docking station.

Since the x-axis of the AprilTag points to the right (as if we are looking directly at the tag), we need that axis to point forward so that it is aligned with the robot base link forward direction x-axis.

To do this, we first rotate the AprilTag pose around its y axis (our middle finger). The z-x plane rotates counterclockwise around the positive y-axis 90 degrees. Counterclockwise rotations in robotics are positive by convention, so we have 1.5708 for external_detection_rotation_pitch. Remember pitch is another way of saying rotation around the y-axis.

Now we need to get the z-axis of the AprilTag pointing towards the sky. To do that, we need to rotate clockwise around the positive x-axis 90 degrees (imagine we are looking down at the arrow of the positive x-axis and watching the y-z plane rotate…rotate around your index finger). Clockwise rotations in robotics are negative by convention, so we have -1.5708 for external_detection_rotation_roll. Remember roll is another way of saying rotation around the x-axis.

Now our AprilTag orientation is aligned with the axes of the robot. Your thumb should be pointing towards the ceiling.

We then move 0.18 meters down the negative x-axis (move your forearm backwards in the direction of your elbow) to get to the desired docking position of the robot. That is why external_detection_translation_x = -0.18.

In robotics and computer graphics, translations and rotations are generally non-commutative – meaning the order in which you apply them produces different results. Remember you need to:

- First correct the orientation of the parent frame to match your expected child frame orientation.

- Then apply any translational offsets based on that corrected orientation

Test Docking and Undocking

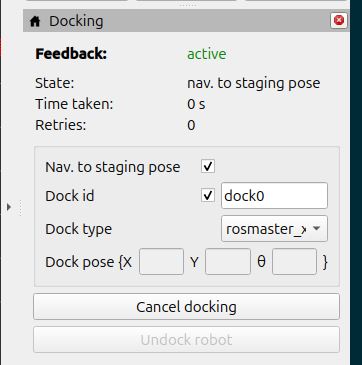

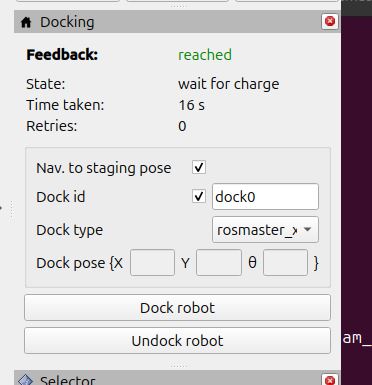

Now let’s send the robot to the dock using RViz.

Build your workspace.

cd ~/ros2_ws/colcon build && source ~/.bashrcLaunch navigation.

navOnce everything has come up, go to the right panel.

In the Dock id field, type dock0. This is the id of the docking station.

Next to Dock type, select rosmaster_x3_dock.

Click Dock robot.

We could have also done this:

ros2 action send_goal /dock_robot nav2_msgs/action/DockRobot "{use_dock_id: true, dock_id: 'dock0', dock_type: 'rosmaster_x3_dock', max_staging_time: 1000.0, navigate_to_staging_pose: true}"

To undock the robot, either click the Undock robot button in RViz, or type:

ros2 action send_goal /undock_robot nav2_msgs/action/UndockRobot "{dock_type: 'rosmaster_x3_dock', max_undocking_time: 30.0}"The nav2_docking server doesn’t allow a lot of control over the undocking process. I would prefer to just back out straight to the staging pose. I had best results by shortening the max_undocking_time to 2.0 seconds, letting the action abort, and then sending a regular navigation goal after that. This package is fairly new as of the time of this writing, and definitely needs a little more work to be ready for prime time.

ros2 action send_goal /undock_robot nav2_msgs/action/UndockRobot "{dock_type: 'rosmaster_x3_dock', max_undocking_time: 2.0}"That’s it.

If you want to take the work we did here to the next level, you can send docking and undocking goals by creating action clients that connect to the /dock_robot and /undock_robot action servers. You can write these clients using the Simple Commander API if you want to use Python, or you can use C++.

Keep building!