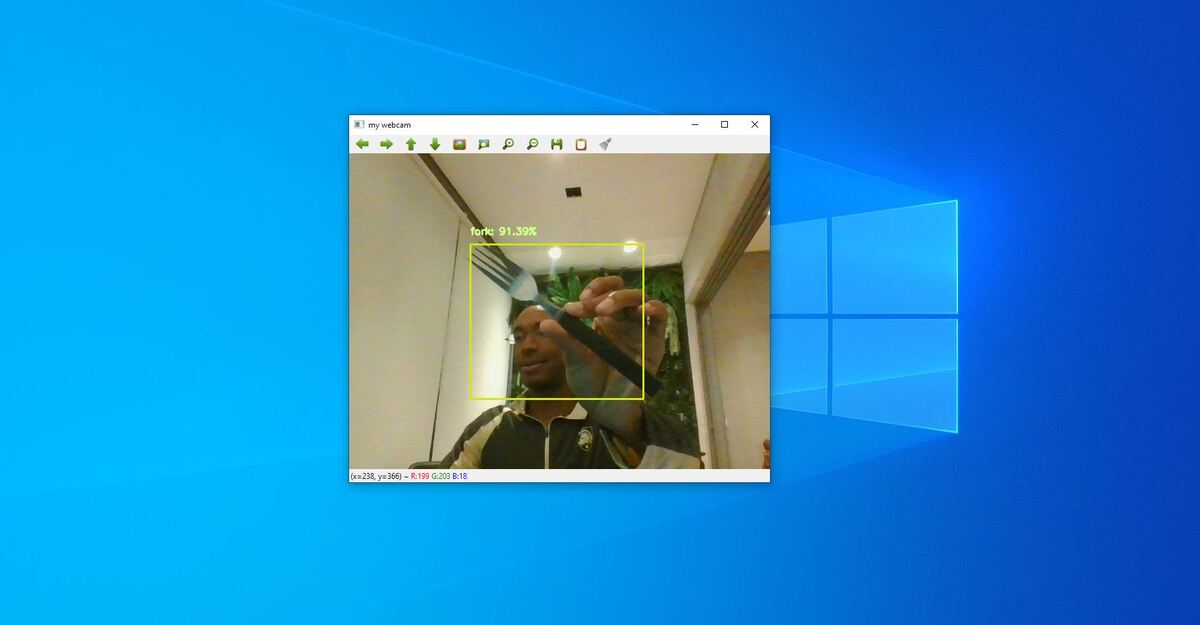

In this tutorial, we will load a TensorFlow model (i.e. neural network) using the popular computer vision library known as OpenCV. To make things interesting, we will build an application to detect eating utensils (i.e. forks, knives, and spoons). Here is what the final output will look like:

Our goal is to build an early prototype of a product that can make it easier and faster for a robotic chef arm, like the one created by Moley Robotics, to detect forks, knives, and spoons.

You Will Need

Directions

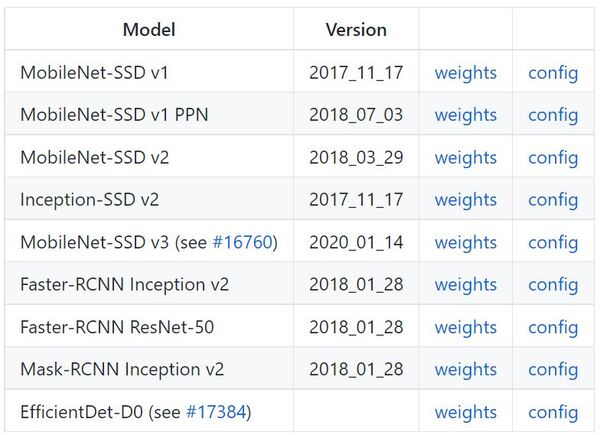

Open this page for the TensorFlow Object Detection API.

Download a weights and a config file for one of the pretrained object detection models. I will use Inception-SSD v2. You will want to right click and Save As.

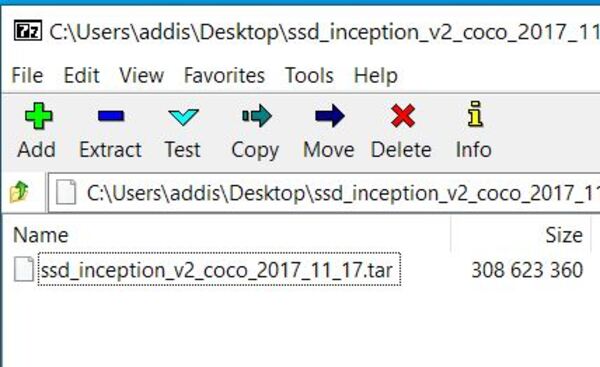

Use a program like 7-Zip to open the tar.gz archive file (i.e. click Open archive if using 7-Zip).

Double click on ssd_inception_v2_coco_2017_11_17.tar.

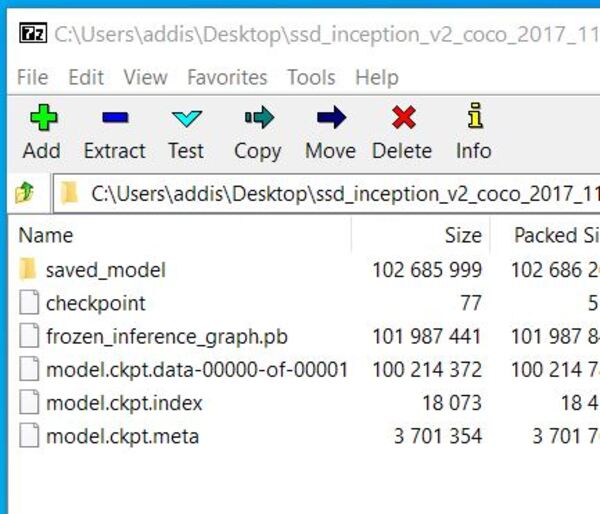

Double click again on the folder name.

Locate a file inside the folder named frozen_inference_graph.pb. Move this file to your working directory (i.e. the same directory where we will write our Python program later in this tutorial).

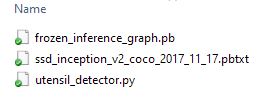

I also have a pbtxt file named ssd_inception_v2_coco_2017_11_17.pbtxt that came from the config link. This file is of the form PBTXT.

To create the ssd_inception_v2_coco_2017_11_17.pbtxt file, I right clicked on config, clicked Save As, saved to my Desktop, and then copied the contents of the pbtxt file on GitHub into this pbtxt file using Notepad++.

Make sure the pb and pbtxt files are both in your working directory.

Alternatively, you can just download my pb file and my pbtxt file.

Now create a new Python program in your working directory called utensil_detector.py.

Add the following code:

# Project: Eating Utensil Detector Using TensorFlow and OpenCV

# Author: Addison Sears-Collins

# Date created: August 1, 2021

# Description: This program detects forks, spoons, and knives

import cv2 as cv # OpenCV computer vision library

import numpy as np # Scientific computing library

# classes = ['person','bicycle','car','motorcycle','airplane' ,'bus','train','truck','boat' ,'traffic light','fire hydrant',

# 'stop sign','parking meter','bench','bird','cat','dog','horse','sheep','cow','elephant','bear','zebra','giraffe' ,

# 'backpack','umbrella','handbag' ,'tie','suitcase','frisbee' ,'skis','snowboard','sports ball' ,'kite',

# 'baseball bat','baseball glove','skateboard','surfboard','tennis rack','bottle','wine glass','cup','fork','knife',

# 'spoon','bowl','banana','apple' ,'sandwich','orange','broccoli','carrot','hot dog','pizza' ,'donut' ,'cake',

# 'chair' ,'couch' ,'potted plant','bed','dining table','toilet','tv','laptop','mouse','remote','keyboard',

# 'cell phone','microwave','oven','toaster','sink','refrigerator','book','clock','vase','scissors' ,'teddy bear',

# 'hair drier','toothbrush']

# Just use a subset of the classes

classes = ["background", "person", "bicycle", "car", "motorcycle",

"airplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant",

"unknown", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse",

"sheep", "cow", "elephant", "bear", "zebra", "giraffe", "unknown", "backpack",

"umbrella", "unknown", "unknown", "handbag", "tie", "suitcase", "frisbee", "skis",

"snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard",

"surfboard", "tennis racket", "bottle", "unknown", "wine glass", "cup", "fork", "knife",

"spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog",

"pizza", "donut", "cake", "chair", "couch", "potted plant", "bed", "unknown", "dining table",

"unknown", "unknown", "toilet", "unknown", "tv", "laptop", "mouse", "remote", "keyboard",

"cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "unknown",

"book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush" ]

# Colors we will use for the object labels

colors = np.random.uniform(0, 255, size=(len(classes), 3))

# Open the webcam

cam = cv.VideoCapture(0)

pb = 'frozen_inference_graph.pb'

pbt = 'ssd_inception_v2_coco_2017_11_17.pbtxt'

# Read the neural network

cvNet = cv.dnn.readNetFromTensorflow(pb,pbt)

while True:

# Read in the frame

ret_val, img = cam.read()

rows = img.shape[0]

cols = img.shape[1]

cvNet.setInput(cv.dnn.blobFromImage(img, size=(300, 300), swapRB=True, crop=False))

# Run object detection

cvOut = cvNet.forward()

# Go through each object detected and label it

for detection in cvOut[0,0,:,:]:

score = float(detection[2])

if score > 0.3:

idx = int(detection[1]) # prediction class index.

# If you want all classes to be labeled instead of just forks, spoons, and knives,

# remove this line below (i.e. remove line 65)

if classes[idx] == 'fork' or classes[idx] == 'spoon' or classes[idx] == 'knife':

left = detection[3] * cols

top = detection[4] * rows

right = detection[5] * cols

bottom = detection[6] * rows

cv.rectangle(img, (int(left), int(top)), (int(right), int(bottom)), (23, 230, 210), thickness=2)

# draw the prediction on the frame

label = "{}: {:.2f}%".format(classes[idx],score * 100)

y = top - 15 if top - 15 > 15 else top + 15

cv.putText(img, label, (int(left), int(y)),cv.FONT_HERSHEY_SIMPLEX, 0.5, colors[idx], 2)

# Display the frame

cv.imshow('my webcam', img)

# Press ESC to quit

if cv.waitKey(1) == 27:

break

# Stop filming

cam.release()

# Close down OpenCV

cv.destroyAllWindows()

Save the code.

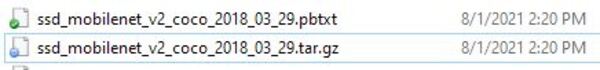

Here is what my working directory looks like.

Run the code.

python utensil_detector.pyYou should see object detection running in real time. If you place a fork, knife, or spoon in the camera, you will see it labeled accordingly.

That’s it! Keep building!