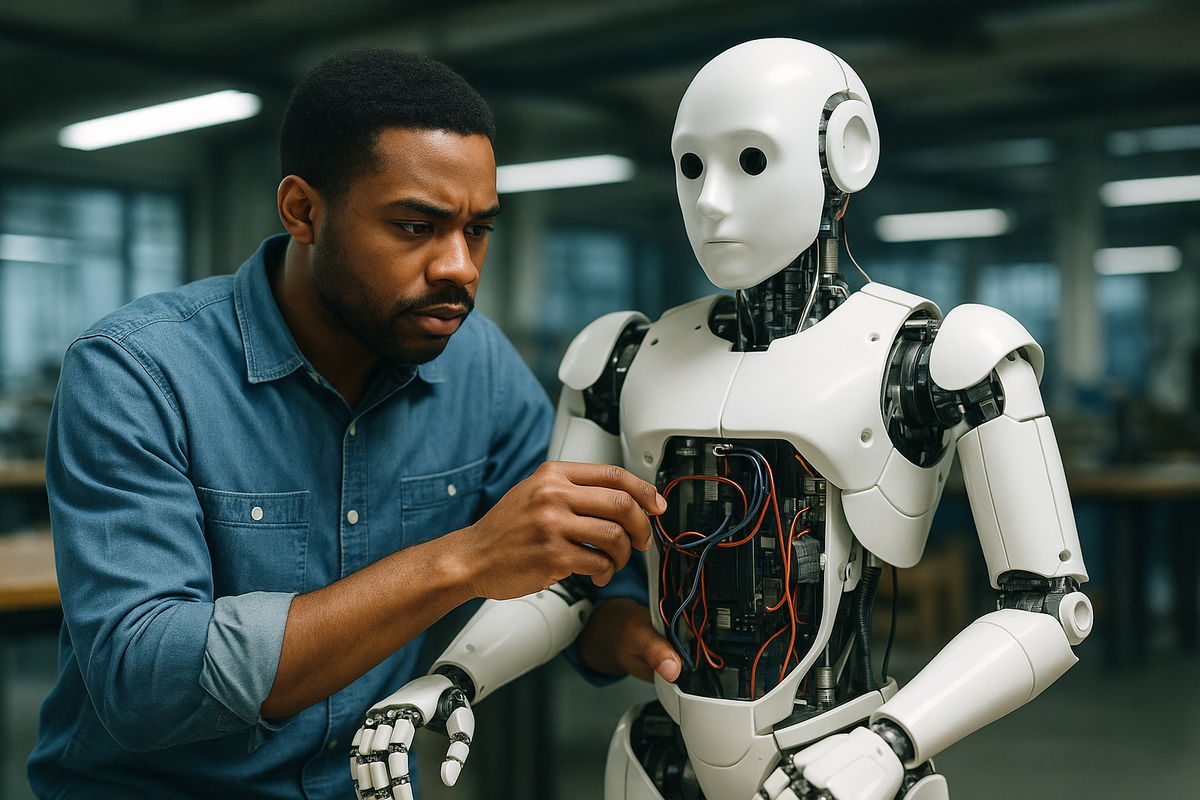

Some skills will make your robotics career. Others will quietly kill it.

You won’t hear this in a classroom. You won’t find it on a résumé checklist. But if you’re betting your future on the wrong skills, you’re not leveling up—you’re self-sabotaging.

Because while everyone’s out here flexing about how many programming languages they know or name-dropping AI like it’s seasoning, the real game-changers? They’re mastering the boring, unsexy, elite stuff.

In this video, we break down:

- The most overrated skills in robotics (yes, we’re naming names)

- The underrated ones that actually get robots working

- Why debugging and systems integration might matter more than your entire GitHub

- And how poor communication can ruin even the smartest engineer

If you’re serious about robotics, stop chasing clout.

Watch this and find out what actually moves the needle.