In this tutorial, we will explore templates and macros in C++.

Prerequisites

- You have completed this tutorial: How to Use Lambda Expressions and File I/O in C++.

- I am assuming you are using Visual Studio Code, but you can use any code editor.

Employing Macros

Let’s explore how to use macros in C++ and their application in robotics projects. Macros are preprocessor directives that allow you to define reusable pieces of code, processed before compilation.

Open a terminal window, and type this:

cd ~/Documents/cpp_tutorial && code . Create a new C++ file and name it basic_macro.cpp.

Type the following code into the editor:

#include <iostream>

// Define a constant macro

#define PI 3.14159

// Define a function-like macro

#define AREA_CIRCLE(radius) (PI * (radius) * (radius))

int main() {

double radius = 5.0;

double area = AREA_CIRCLE(radius);

std::cout << "The area of a circle with radius " << radius << " is: " << area << std::endl;

return 0;

}

In this code, we define two macros:

- PI is a constant macro that defines the value of pi.

- AREA_CIRCLE(radius) is a function-like macro that calculates the area of a circle given its radius.

In the main() function, we use the AREA_CIRCLE macro to calculate the area of a circle with a radius of 5.0 and store the result in the area variable. We then print the calculated area using std::cout.

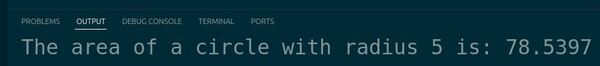

Run the code.

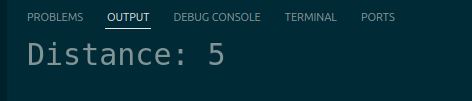

The output displays the calculated area of the circle using the AREA_CIRCLE macro.

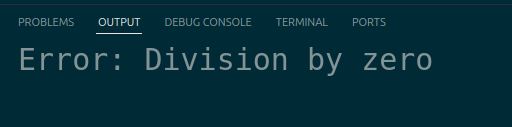

It’s important to note that macros should be used sparingly and with caution, as they can sometimes lead to unexpected behavior if not used carefully. In modern C++, const variables, inline functions, or templates are often preferred over macros when possible.

Implementing Template Functions

Let’s explore template functions in C++, which allow us to write generic functions that can work with different data types. This is particularly useful in robotics when dealing with various sensor data types or mathematical operations.

Create a new C++ file and name it template_functions_example.cpp.

Type the following code into the editor:

#include <iostream>

template<typename T>

T find_max(T a, T b) {

return (a > b) ? a : b;

}

int main() {

std::cout << "Max of 10 and 20 is: " << find_max<int>(10, 20) << std::endl;

std::cout << "Max of 5.5 and 2.1 is: " << find_max<double>(5.5, 2.1) << std::endl;

return 0;

}

In this code, we define a template function find_max that takes two parameters of the same type and returns the greater of the two. The function uses the ternary operator to compare the two values. We then test this function with integers and doubles to show its versatility.

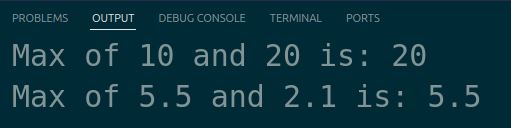

Run the code.

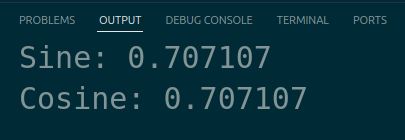

The output should display “Max of 10 and 20 is: 20” and “Max of 5.5 and 2.1 is: 5.5”, demonstrating how the template function adapts to different data types.

Defining Template Classes

Let’s explore template classes in C++, which allow us to create generic classes that can work with different data types. This is particularly useful for creating reusable data structures in robotics applications.

Create a new C++ file and name it template_class.cpp.

Type the following code into the editor:

#include <iostream>

// Template class for a point in 2D space

template <typename T>

class Point {

private:

T x;

T y;

public:

Point(T x, T y) : x(x), y(y) {}

T getX() const { return x; }

T getY() const { return y; }

void printPoint() const {

std::cout << "(" << x << ", " << y << ")" << std::endl;

}

};

int main() {

Point<int> int_point(5, 10);

Point<double> double_point(3.14, 2.71);

std::cout << "Integer point: ";

int_point.printPoint();

std::cout << "Double point: ";

double_point.printPoint();

return 0;

}

In this code, we define a template class called Point that represents a point in 2D space. The class has two private member variables, x and y, of type T. The typename keyword is used to specify that T is a type parameter.

The Point class has a constructor that takes x and y values and initializes the member variables. It also provides getter functions getX() and getY() to access the values of x and y, respectively. The printPoint() function is a member function that prints the point in the format (x, y).

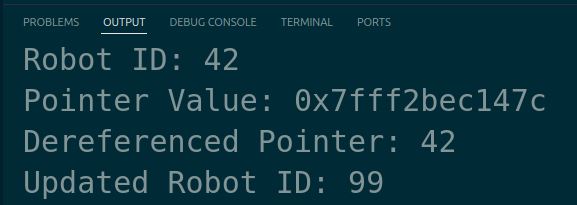

In the main() function, we create two instances of the Point class: int_point with integer values and double_point with double values.

We use the printPoint() function to print the points and verify that the template class works correctly with different data types.

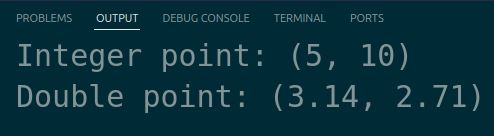

Run the code.

The output displays the points created with integer and double values.

Template classes provide flexibility and help reduce code duplication, making the code more maintainable and efficient.

Thanks, and I’ll see you in the next tutorial.

Keep building!